Your phone knows what you’re about to type before you do. Netflix knows what you’ll binge next. Banks know if you’ll repay a loan. Spotify knows the songs you’ll play on repeat.

But how? Who’s secretly watching all of us?

Psychic pigeons? A secret society of mind-reading people? Or your mom – because she somehow always knows?

Nope. It’s AI algorithms.

These systems make eerily accurate predictions by reading tons of data and analyzing patterns.

But what exactly is an AI algorithm? How does it work?

What are its different types, and how is it being used in real-world applications? We’re everything, and much more in today’s blog.

Let’s start from the beginning.

What Are AI Algorithms?

AI algorithms are used in everyday technology – Google Search, Siri, Netflix recommendations – but they’re also used in fraud detection using transaction monitoring software, self-driving cars, and medical diagnostics.

The roots of AI go back to the 1940s when Alan Turing asked a question, “Can machines think?”

He came up with the Turing Machine in the 1950s which was later tested with Turing Test. It showed how machines could follow logical steps to solve problems.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

Back in the 1950s and 60s, some programs (Logic Theorist) could prove math theorems.

But there was one problem – they couldn’t learn. Every single rule had to be manually programmed.

At its core, AI is just a set of instructions—an algorithm—that helps machines make decisions.

Some are simple, like filtering spam emails. Others are more complex, like predicting disease risks based on medical records.

But let’s be clear—AI doesn’t think for itself. It depends on human programming, and consistent learning to get better at what it does.

Let’s understand this with an example,

AI helps in bigger decisions. Take a bank approving a loan. It might use a Decision Tree (a simple AI model that works like a flowchart):

- Does the applicant have a stable income? No → Deny the loan. Yes → Check credit score.

- Good credit score? No → Reconsider. Yes → Check existing loans.

- Too many loans? High risk. Few loans? Lower risk.

At the end of the process, the AI either approves or denies the loan based on structured logic.

Now comes the next part…

How AI Algorithms Enable Machine Learning & Automation

Think of it like this.

Imagine teaching a child on how to recognize dogs.

A teacher would show them pictures, point out key features, and over time, they would get better at spotting one in real life.

AI algorithms learn the same way – learning from massive amounts of data to make predictions and automate tasks.

1 – Prediction

A regression algorithm studies past information to make real-time automated predictions.

For example, Netflix recommends you shows based on prediction. If you liked Stranger Things, it might suggest Dark or The Umbrella Academy because others who liked Stranger Things watched those, too.

2 – Machine Learning

But AI isn’t just about predictions – it adapts.

Machine learning enables computers to learn and improve from experience without being explicitly programmed.

For example, What if you only liked Stranger Things for its sci-fi elements but hated horror? What if you preferred short, fast-paced shows over slow-burn dramas? Netflix’s algorithm will analyze your deep-level activities and will adjust the recommendations.

3 – Automation

Then there’s automation.

Automation is the process of using technology to perform tasks with minimal human intervention.

For example, Self-driving cars do something similar, using computer vision to “see” the road, recognize stop signs, and learn from every mile they drive.

The more data they process the smarter they get.

How AI Algorithms Work (Step-by-Step)

Just like a person learning a new skill, AI also picks things up step-by-step.

Let’s break it down using the image recognition feature in Google search engine.

Step # 1 – Data Collection

Everything starts with data. AI needs hundreds of thousands of examples to learn from. In image recognition, it includes:

- Millions of labeled images (e.g., pictures of cats labeled “cat,” pictures of dogs labeled “dog”).

- Variation in lighting, angles, and quality.

- Different sizes, colors, and shapes of the same object.

- Edge cases (blurry images, objects partially hidden, low contrast).

Step # 2 – Preprocessing

Raw images contain a lot of unnecessary information like blurry or low-quality images, unrelated objects, and cluttered images etc.

Before training AI, the data must be cleaned and standardized. This includes:

- Resizing images to a uniform size so they can be processed consistently.

- Grayscale or color normalization to ensure brightness and contrast don’t mislead the AI.

- Removing noise like unnecessary background elements that don’t contribute to object identification.

Step # 3 – Training

AI doesn’t “see” images the way humans do. It sees them as numbers—thousands of pixels, each with a value representing brightness and color.

To make sense of this, AI uses a Convolutional Neural Network (CNN), a special type of deep learning model built for image recognition.

Here’s how CNNs break down an image:

- Convolution Layers: AI scans the image in parts, first detecting simple shapes (lines, curves) and later recognizing complex features (eyes, ears, whiskers).

- Pooling Layers: These shrink the image, keeping essential details while discarding unnecessary pixels.

- Fully Connected Layers: AI links detected features to make a final prediction—if it sees pointed ears and whiskers, it identifies a cat.

This process involves epochs.

Imagine you’re learning to recognize different bird species. The first time you see a sparrow and a pigeon, you might mix them up.

But after looking at pictures, studying their features, and getting feedback, you improve.

AI learns the same way.

An epoch is one complete cycle where the AI looks at all the training data, makes predictions, checks for mistakes, and adjusts.

It does this over and over—just like how you’d practice multiple times to get better at a skill.

Step # 4 – Testing

Before AI is ready for real-world use, it needs to be tested. This involves:

- Feeding it images it has never seen before.

- Measuring its accuracy—does it correctly label a cat as a cat?

- Checking for overfitting, where AI memorizes training data but struggles with new images.

If AI fails too often, it goes back for more training until it can reliably identify images it’s never encountered.

Step # 5 – Deployment

Once trained and tested, the AI model is deployed. When we’ll feed it with an image. It’ll:

- Break it down into pixel values

- Run it through all learned layers

- Generate a probability score for each possible label

- Choose the most likely classification

A typical result might look like:

- Cat: 99.7% probability

- Dog: 0.2% probability

- Other: 0.1% probability

If you want to see how similar algorithmic thinking applies to math, Undetectable AI’s Math Solver breaks down equations step by step using the same logic-driven approach.

It shows every stage of the solution clearly, which makes it easier to understand how algorithms process and reason through structured problems.

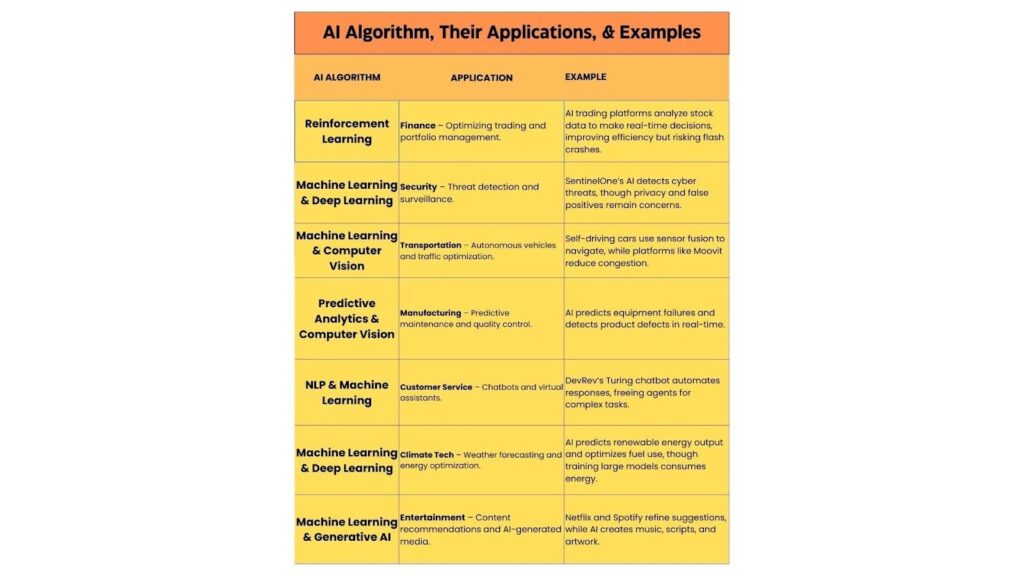

Types of AI Algorithms & How They Are Used

Just like people have different ways of learning—some by reading, some by doing—AI has different types of algorithms, each suited for specific tasks.

1 – Supervised Learning

Imagine a kid learning how to recognize apples and oranges. The teacher labels the pictures as:

“This is an apple.”

“This is an orange.”

Over time, they learn to tell the difference. That’s supervised learning—AI is trained on labeled data and learns to make predictions.

For example,

The AI algorithm of spam filters scans thousands of emails labeled “spam” or “not spam” and learns patterns.

- Does the email contain certain keywords?

- Is it from a suspicious sender?

Over time, it gets better at catching spam before it hits your inbox.

Supervised learning powers regression models, which predict things like housing prices, and classification models, which decide whether an email belongs in spam or your main inbox.

2 – Unsupervised Learning

Now imagine you give that same kid a basket of fruit but don’t tell them which ones are apples or oranges.

Instead, they group them based on similarities—color, shape, texture.

That’s unsupervised learning—AI finds patterns in data without labels.

For example,

Banks don’t always know instantly if a transaction is fraudulent, but AI can help in preventing the fraud.

It scans millions of purchases, learning what’s “normal” for each customer and what isn’t.

Let’s say you buy groceries and gas every week. Then suddenly, you want to buy a $5,000 luxury car in another country.

AI will flag it as suspicious, and it might freeze your card or send you a quick “Was this you?” message.

3 – Reinforcement Learning

Now let’s say you give the kid a challenge—every time they correctly pick an apple, they get a candy. If they pick the wrong fruit, they lose one.

Over time, they learn the best way to get the most candy. That’s reinforcement learning.

AI does the same thing—it tests different actions, learns from mistakes, and adjusts based on rewards and penalties.

For example,

Self-driving cars don’t start out knowing how to drive.

But after analyzing millions of miles of road data, they get better at braking, merging into traffic, and avoiding obstacles.

Every mistake is a lesson. Every success makes them smarter.

4 – Neural Networks & Deep Learning

Some problems are just too complicated for simple rules. That’s where neural networks come in.

They’re designed to work like the human brain, recognizing patterns and making decisions without needing every little instruction spelled out.

For example,

A traditional computer might struggle with different angles, lighting, or expressions.

But a deep learning model (a neural network with multiple layers) can learn to recognize faces, no matter the conditions.

Just like people have different ways of learning—some by reading, some by doing—AI has different types of algorithms, each suited for specific tasks.

AI Algorithms in Real-World Applications

How AI Image Detector Uses Algorithms to Spot AI-Created Images

The AI-generated images are now so realistic that people can barely tell them apart from real photos.

But AI image detectors are trained to see beyond the surface.

Technique # 1 – Anomaly Detection

The process begins with anomaly detection, which looks for anything that doesn’t belong.

If an image has unnatural textures, inconsistent lighting, or blurred edges. AI Image Detector raises a red flag.

Technique # 2 – Generative Adversarial Networks

One way to detect AI-generated images is by looking at the hidden patterns left by the technology that creates them.

These patterns come from Generative Adversarial Networks (GANs), which power most AI images.

Just like every artist has a unique style, GANs create patterns that aren’t present in real-world photos.

AI Image Detector is trained to recognize these patterns, which helps to determine whether an image was generated by an Artificial Intelligence.

Technique # 3 – Metadata

Beyond just looking at the pixels, an AI Image Detector also examines metadata, which acts like an image’s digital fingerprint.

This data includes details like when and where a photo was taken and which device captured it.

If an image claims to be from 2010 but was actually created by an AI tool last week, AI Image Detector will flag it as suspicious.

Bias in AI Algorithms & How to Reduce It

AI is supposed to be fair, but sometimes it isn’t. AI bias can happen in two ways:

- Data Bias – This happens when certain groups are underrepresented in the training data.

- Model Bias – This occurs when the AI makes more mistakes for one group than another, reinforcing unfair outcomes.

Amazon’s Biased Hiring Tool

In 2014, Amazon had to scrap an AI hiring tool because it was biased against women.

The system learned from past hiring data, where more men had been hired for tech roles, so it started favoring male candidates and penalizing resumes that included words like “women’s” (as in “women’s chess club”).

The AI wasn’t trying to be unfair, but it learned from biased data and carried that bias forward.

Privacy Concerns in AI Data Collection

Every time you use an app, browse the web, or make a purchase, data is being collected.

Some of it is obvious – like your name, email, or payment details.

But there’s hidden data like GPS location, purchase history, typing behavior, and browsing habits.

Companies use this information to personalize experiences, recommend products, and improve services.

With so much data floating around, risks are inevitable:

- Data breaches – Hackers can steal user information.

- Re-identification – Even anonymized data can be linked back to individuals.

- Unauthorized use – Companies might misuse data for profit or influence.

Even when companies claim to anonymize data, studies have shown that patterns can reveal user identities with enough information.

To protect user privacy, companies are using:

- Anonymization – Removes personal details from datasets.

- Federated Learning – AI models train on your device without sending raw data to a central server. (e.g., Google’s Gboard).

- Differential Privacy – Adds random noise to data before collection to prevent tracking (e.g., Apple’s iOS system).

Can AI Algorithms Be Completely Neutral?

AI isn’t created in a vacuum.

It’s built by humans, trained on human data, and used in human society. So can it ever truly be neutral?

Short answer: No. At least, not yet.

AI learns from real-world data, and that data comes with all the biases, assumptions, and imperfections of the humans who created it.

Take the COMPAS recidivism tool, for example.

It was designed to predict which criminals were most likely to reoffend.

Sounds straightforward, right?

But studies showed that the algorithm disproportionately flagged Black defendants as high-risk compared to white defendants.

It is not biased because someone programmed it to be, but because it inherited patterns from a flawed criminal justice system.

So, can AI ever be made fair?

Some experts think so.

Researchers have developed fairness constraints—mathematical techniques designed to force AI models to treat different groups more equally.

Bias audits and diverse training datasets also help reduce skewed outcomes.

But even with all these safeguards, true neutrality is tricky.

And even if we could make AI completely “neutral,” should we?

AI doesn’t make decisions in a bubble. It affects real people in real ways.

The reality is, AI reflects the world we feed into it.

If we want unbiased AI, we must first tackle the biases in our systems.

Otherwise, we’re just teaching machines to mirror our flaws—only faster and at scale.

FAQs About AI Algorithms

What Is the Most Common AI Algorithm?

Neural networks—especially deep learning—are at the heart of most AI applications today.

They’re what power tools like ChatGPT, facial recognition software, and the recommendation systems that suggest what to watch or buy next.

Are AI Algorithms the Same as Machine Learning?

Not exactly. AI is the big umbrella that covers many different technologies, and machine learning is just one piece of it.

Machine learning specifically refers to AI systems that learn patterns from data rather than following strict, pre-programmed rules.

But not all AI relies on machine learning—some use other methods like rule-based systems.

How Do AI Algorithms Improve Over Time?

AI improves through experience—kind of like humans do.

The more data an algorithm processes, the better it gets at spotting patterns and making accurate predictions.

Fine-tuning its parameters, using techniques like reinforcement learning, and continuously updating its training data all help refine its performance.

Enhance your experience by trying our AI Detector and Humanizer in the widget below!

Final Thoughts: The Future of AI Algorithms

So what does all this mean for us?

AI is influencing our decisions on daily basis. It decides what we watch, what we buy, and even how safe our bank account is.

But here’s the question…

If AI is learning from us, what are we teaching it?

Are we making sure it’s fair, unbiased, and helpful? Or are we letting it pick up the same mistakes humans make?

And if AI keeps getting smarter, what happens next? Will it always be a tool we control, or could it one day start making choices we don’t fully understand?

Maybe the biggest question isn’t what AI can do, but what we should let it do.

What do you think?