When you look around the internet nowadays, you’ll more than likely find AI elements in every shape and form all over the place.

It’s no longer something out of a sci-fi flick anymore.

From your phone’s autocorrect feature to Netflix recommendations and your ChatGPT tab that’s open right now, it didn’t appear overnight like a viral TikTok sensation—it’s been here and evolving before our very own eyes.

It’s amazing how far we’ve come from “what if machines were able to think” to “ChatGPT, outline me a year-long business strategy.”

Throughout its development, AI has had many failures and breakthroughs and produced many brilliant thinkers.

In this post, we won’t just walk down memory lane, but also find out how AI became a part of everyday conversation.

Spoiler alert: it involves way more math anxiety and existential dread than you’d expect.

Key Takeaways

- AI research began in the 1940s with early computer pioneers dreaming of thinking machines.

- The field officially launched in 1956 at the Dartmouth Conference, coining the term “artificial intelligence.”

- AI experienced multiple “winters” where funding dried up and the spotlight faded.

- Modern AI breakthroughs came from combining massive datasets with powerful computing.

- Today’s generative AI represents the latest chapter in a 70-year story of human ambition.

How AI Evolved From Theory to Reality

Think of AI’s history like your favorite band’s career.

It started with the underground years when only real fans paid attention.

Then came the mainstream breakthrough that everyone claims they saw coming.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

A few flops and comebacks later, they’re suddenly everywhere, and your parents are asking about them.

AI followed this exact trajectory. Early researchers weren’t trying to build ChatGPT.

They were asking fundamental questions: Can machines think? Can they learn? Can they solve problems like humans do?

The answer turned out to be “sort of, but it’s complicated.”

Origins of AI (Pre-1950s)

Before we had computers, we had dreamers. Ancient myths told stories of artificial beings brought to life.

Greek mythology gave us Talos, the bronze giant who protected Crete. Jewish folklore had golems, which are clay creatures animated by mystical words.

But the real AI origin story starts during World War II with Alan Turing. Turing was breaking Nazi codes and laying the groundwork for modern computing. Talk about multitasking.

In 1936, Turing introduced the concept of a universal computing machine.

This theoretical device could perform any calculation if given the right instructions.

It sounds a little boring until you realize this idea became the foundation for every computer you’ve ever used, including the one you’re reading this article on.

The war accelerated everything and created a need for innovation. Suddenly governments were using their budgets toward any technology that might give them an edge.

The first electronic computers emerged from this pressure cooker environment.

Machines like ENIAC filled entire rooms and needed teams of engineers to operate, but they could calculate in seconds what took humans hours.

By the late 1940s, researchers began wondering: if these machines can calculate, can they think?

1950s: The Birth of Artificial Intelligence

The year 1956 was AI’s main character moment. A group of researchers gathered at Dartmouth College in New Hampshire for a summer workshop that would change everything.

John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon basically locked themselves in a room and decided to create thinking machines.

They coined the term “artificial intelligence” and laid out an ambitious roadmap.

These researchers believed that within a generation, machines would be able to solve any problem humans could solve.

Ultimately, they were off by several decades, but their confidence was admirable.

The Dartmouth Conference launched AI as a legitimate field of study.

Suddenly, universities were creating AI labs, governments were writing checks, and researchers were making bold predictions about the future.

Alan Turing had already given them a head start with his famous test.

The Turing Test asked a simple question: if you’re having a conversation with something and can’t tell if it’s human or machine, does it matter?

It’s the ultimate “fake it till you make it” philosophy, and it’s still relevant today.

1960s–1970s: Early Optimism and First Models

The 1960s started with incredible momentum. Researchers had funding, media attention, and a clear mission. What could go wrong?

Everything, as it turned out.

Early AI programs worked well in controlled environments but fell apart when faced with real-world complexity.

It’s like being amazing at playing basketball in your driveway but completely shutting down during an actual game.

ELIZA, created by Joseph Weizenbaum in 1964, could carry on conversations by recognizing keywords and responding with pre-programmed phrases.

It was a more sophisticated version of the Magic 8-Ball toy, and people loved it.

ELIZA worked by pattern matching and substitution. If you said “I am sad,” it might respond with “Why are you sad?”

It was simple but effective enough to fool some users into thinking they were talking to a real therapist. Weizenbaum was horrified when people started forming emotional attachments to his program.

Undetectable AI’s Ask AI works in a similar vein. You can use it to simulate or explain how early AI models like ELIZA worked compared to modern language models.

However, the difference is staggering. ELIZA was playing word association, while today’s AI can actually understand context and generate coherent responses.

Meanwhile, researchers were tackling more ambitious projects. Terry Winograd’s SHRDLU could understand and manipulate objects in a virtual world made of blocks.

It could follow complex instructions like “Put the red block on top of the green one, but first move the blue block out of the way.”

SHRDLU was impressive, but it only worked in its tiny block world. Try to expand it to the real world, and it would crash harder than your laptop during finals week.

The problem wasn’t just technical. Researchers were discovering that intelligence is way more complicated than they thought.

Things humans do effortlessly, like recognizing a face or understanding sarcasm, turned out to be incredibly difficult for machines.

1980s: Expert Systems and Commercial AI

Just when everyone thought AI was dead, it came back with a vengeance. The 1980s brought expert systems, and suddenly, AI was making real money.

Expert systems were different from earlier AI approaches. Instead of trying to replicate general intelligence, they focused on specific domains where human experts had deep knowledge.

Think of them as really smart, really specialized consultants.

- MYCIN diagnosed blood infections.

- DENDRAL identified chemical compounds.

- XCON configured computer systems.

These programs captured the knowledge of human experts and made it available to others.

The key insight was that you didn’t need general intelligence to be useful.

You just needed to be really good at one thing. It’s like that person who knows everything about Marvel movies but can’t remember where they left their keys.

Companies started paying attention. Expert systems could solve real problems and save real money. Medical diagnosis, financial planning, equipment troubleshooting—AI wasn’t just an academic curiosity anymore.

The Japanese government launched the Fifth Generation Computer Project, planning to create intelligent computers by the 1990s. Other countries panicked and started their own AI initiatives.

The space race was over, so why not have an AI race instead?

Expert systems had limitations, though. They required extensive knowledge engineering, manually encoding human expertise into computer-readable rules.

It was like trying to teach someone to ride a bike by writing down every possible scenario they might encounter.

1990s: AI Goes Mainstream (Quietly)

The 1990s were AI’s awkward teenage years. The field was going through changes, finding its identity, and definitely not talking about its feelings.

The expert systems boom had cooled off. These systems were expensive to maintain and couldn’t adapt to new situations. Companies started looking for alternatives.

But AI didn’t disappear. It just stopped calling itself AI.

Machine learning techniques that had been brewing in academic labs started finding practical applications.

AI was everywhere in the form of email spam filters, credit card fraud detection, and recommendation systems, but nobody was bragging about it.

This was smart marketing. The term “artificial intelligence” carried too much baggage from previous cycles. People found it better to talk about “statistical analysis,” “pattern recognition,” or “decision support systems.”

The real breakthrough came from a shift in approach.

And nobody called it AI, though. That would have been way too obvious.

2000s: Foundations of Modern AI

The 2000s laid the groundwork for everything happening in AI today.

It’s like the training montage in a sports movie, except it lasted a decade and involved way more math.

Several factors converged to create perfect conditions for AI advancement. Computing power was getting cheaper and more powerful.

The internet had created massive datasets. And researchers had figured out how to train neural networks effectively.

Meanwhile, tech companies were quietly building AI into everything.

Google’s search algorithm used machine learning to rank web pages. Amazon’s recommendation engine drove billions in sales. Facebook’s news feed algorithm determined what millions of people saw every day.

The iPhone launched in 2007, putting powerful computers in everyone’s pocket and generating unprecedented amounts of personal data.

Every tap, swipe, and search became a data point that could train better AI systems.

By the end of the decade, AI was embedded in the digital infrastructure of modern life.

Most people didn’t realize it, but they were interacting with AI systems dozens of times per day.

2010s: Deep Learning and Big Data

The 2010s were when AI went from “neat technical trick” to “holy crap, this changes everything.”

Deep learning kicked off the decade with a bang. In 2012, a neural network called AlexNet crushed the competition in an image recognition contest.

It wasn’t just better than other AI systems—it was better than human experts.

This wasn’t supposed to happen yet.

The secret ingredients were bigger datasets, more powerful computers, and better training techniques.

Graphics processing units (GPUs), originally designed for video games, turned out to be perfect for training neural networks. Gamers accidentally created the hardware that would power the AI revolution.

The media couldn’t get enough. Every AI breakthrough made headlines. Deep Blue beating Kasparov at chess in the 1990s was impressive, but AlphaGo beating the world champion at Go in 2016 was mind-blowing.

Go was supposed to be too complex for computers to master.

In over your head about these advanced technologies? Modern AI tools like Undetectable AI’s AI Chat can explain complex AI concepts like convolutional neural networks or reinforcement learning to non-technical audiences.

The same deep learning techniques that power image recognition also power today’s language models.

Autonomous vehicles captured everyone’s imagination. Self-driving cars went from science fiction to “coming next year” (a promise that’s still being made, but with more caution these days).

Virtual assistants became mainstream. Siri, Alexa, and Google Assistant brought AI into millions of homes.

Everyone was now having conversations with their devices, even if those conversations were mostly “play my music” and “what’s the weather?”

The decade ended with the emergence of transformer architectures and attention mechanisms.

These innovations would prove crucial for the next phase of AI development, even though most people had never heard of them.

2020s: Generative AI and Large Language Models

The 2020s started with a pandemic, but AI researchers were too busy changing the world to notice.

OpenAI’s GPT models went from interesting research projects to cultural phenomena. GPT-3 launched in 2020 and blew everyone’s minds with its ability to write coherent text on almost any topic.

Then ChatGPT came about in late 2022 and broke the internet. Within days, millions of people were having conversations with AI for the first time.

Students were using it for homework. Workers were automating parts of their jobs. Content creators were generating ideas faster than ever.

The response was immediate and intense. Some people were amazed. Others were terrified. Most were somewhere in between, trying to figure out what this meant for their careers and their kids’ futures.

Generative AI became the biggest tech story since the iPhone.

Every company started adding AI features. Every startup claimed to be “AI-powered.”

Every conference had at least twelve panels about the future of artificial intelligence.

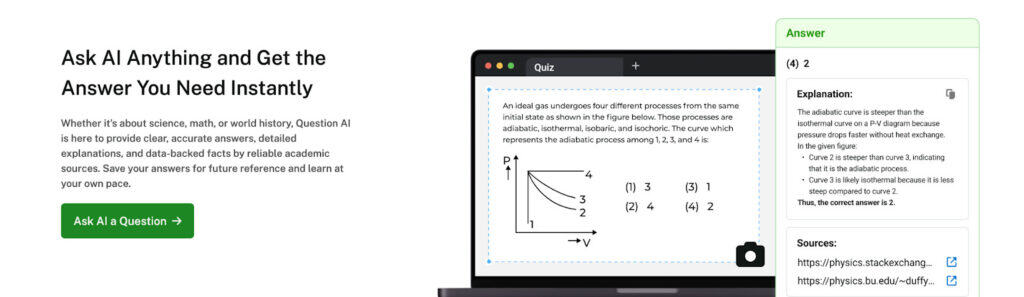

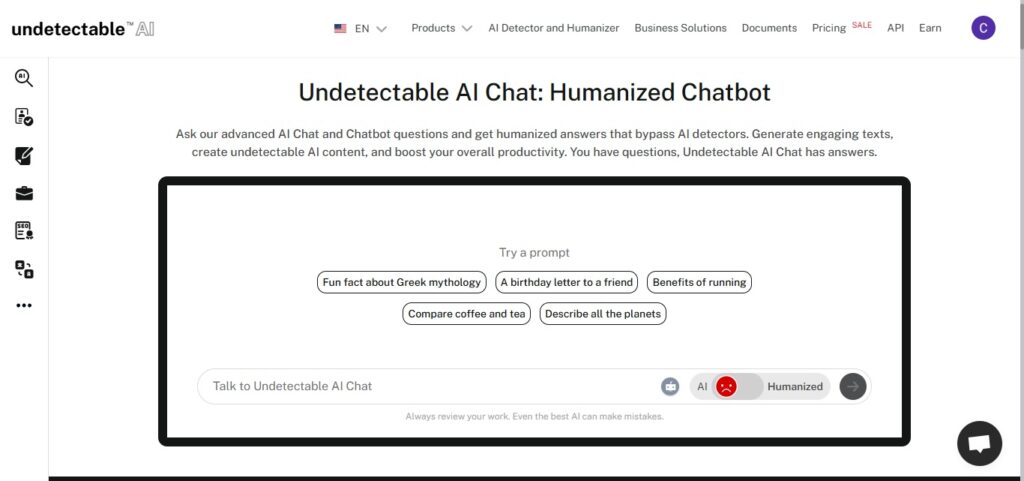

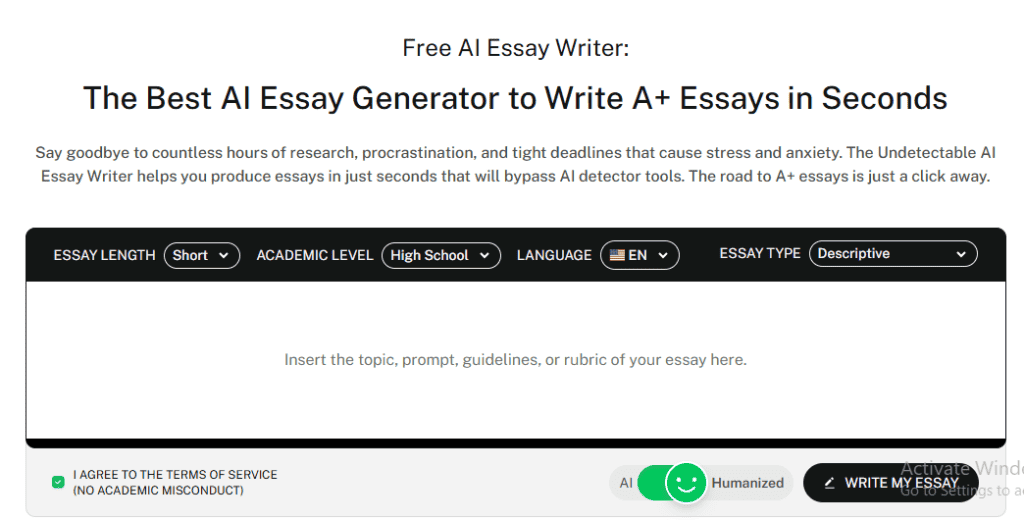

This is where tools like Undetectable AI’s AI SEO Writer, AI Essay Writer, and AI Humanizer fit into the story.

These modern applications represent the practical evolution of generative AI technology. They take the same underlying models that power ChatGPT and apply them to specific use cases.

Image generation followed a similar trajectory. DALL-E, Midjourney, and Stable Diffusion could create photorealistic images from text descriptions. Artists were excited and worried in equal measure.

The technology improved at breakneck speed. Models got bigger, smarter, and more capable. GPT-4 could pass professional exams and write code.

Claude could have nuanced conversations about complex topics. Bard could search the web and provide current information.

Major Milestones in AI History

Some moments in AI history deserve special recognition.

These aren’t just technical achievements but cultural turning points that changed how we think about artificial intelligence.

- The Dartmouth Conference (1956) officially launched the field and gave AI its name. Without this gathering, we might be calling it “machine intelligence” or “computational thinking” or something equally boring.

- Deep Blue defeating Garry Kasparov at chess (1997) was AI’s first mainstream moment. Millions watched a computer outthink one of humanity’s greatest strategic minds. The future suddenly felt very real and slightly scary.

- IBM Watson winning at Jeopardy! (2011) showed that AI could handle natural language and general knowledge. Watching a computer nail the Daily Double was both impressive and unsettling.

- AlphaGo beating Lee Sedol at Go (2016) was a technical masterpiece. Go has more possible board positions than atoms in the observable universe, yet DeepMind’s system found winning strategies that human experts had never considered.

- The ImageNet breakthrough (2012) kicked off the deep learning revolution. AlexNet’s victory in the image recognition contest proved that neural networks were ready for prime time.

- GPT-3’s release (2020) democratized AI content generation. Suddenly anyone could access powerful language models through simple web interfaces.

- ChatGPT’s launch (2022) brought AI to the masses. Within two months, it had 100 million users, making it the fastest-growing consumer application in history.

Each milestone built on previous work while opening new possibilities.

That’s how progress works: gradual improvements punctuated by moments of breakthrough that make everyone reconsider what’s possible.

AI Winters and Comebacks

AI’s history isn’t a straight line of progress. It’s more like a roller coaster designed by someone with commitment issues.

The field has experienced several “AI winters”, periods when funding dried up, researchers switched fields, and the media declared AI dead.

These weren’t just minor setbacks but existential crises that nearly killed AI research entirely.

What Caused the AI Winters?

The first AI winter hit in the mid-1970s. Early researchers had made bold predictions about achieving human-level intelligence within decades. When those predictions didn’t pan out, disappointment set in.

Government funding agencies started asking uncomfortable questions. Where were the thinking machines they’d been promised?

Why were AI systems still so limited? What exactly were researchers doing with all that money?

The British government commissioned the Lighthill Report in 1973, which deemed AI research overhyped and underdelivering.

Funding was cut dramatically. Similar reviews in other countries reached similar conclusions.

The second AI winter arrived in the late 1980s after the expert systems bubble burst. Companies had invested heavily in AI technology but found it difficult to maintain and scale.

The market collapsed, taking many AI startups with it.

Both winters shared common themes. Unrealistic expectations led to oversized promises. When reality didn’t match the hype, backlash was inevitable.

Researchers learned valuable lessons about managing expectations and focusing on practical applications.

The Future of AI: What’s Next?

Predicting AI’s future is like trying to forecast the weather using a Magic 8-Ball. Possible, but your accuracy rate probably won’t impress anyone.

Still, some trends seem likely to continue. AI systems will get more capable, more efficient, and more integrated into daily life.

The question isn’t whether AI will become more powerful – it’s how society will adapt to that power.

- Generative AI will probably get better at creating content that’s indistinguishable from human work. Artists, writers, and content creators will need to figure out how to compete with or collaborate with AI systems.

- Autonomous systems will become more common. Self-driving cars might finally live up to their promises. Delivery drones could fill the skies. Robot workers might handle dangerous or repetitive jobs.

- AI safety research will become increasingly important as systems grow more powerful. We’ll need better ways to ensure AI systems behave as intended and don’t cause unintended harm.

- The economic implications are staggering. Some jobs will disappear. New jobs will emerge. The transition could be smooth or chaotic, depending on how well we prepare.

- Regulation will play a bigger role. Governments are already working on AI governance frameworks. The challenge is creating rules that protect people without stifling innovation.

- The democratization of AI will continue. Tools that once required PhD-level expertise are becoming accessible to everyone. This could unleash tremendous creativity and innovation, or it could create new problems we haven’t anticipated yet.

Find more useful tools here, or try our AI Detector and Humanizer in the widget below!

This Story Writes Itself… Almost

AI’s history is a testament to human ambition, turning impossible dreams into reality.

From Turing’s theories to today’s generative models, progress came from tackling unsolvable problems with persistence.

Each era felt revolutionary, but today’s rapid pace and scale are unprecedented.

AI is basically decades of work by brilliant minds. The story is far from over. The next breakthrough could come from anywhere, and its impact will depend on the choices we make now.

We’ve imagined artificial minds for millennia, and today we’re building them.

With Undetectable AI’s AI SEO Writer, AI Chat, AI Essay Writer, and AI Humanizer, you can create high-quality, natural-sounding content that’s optimized, engaging, and uniquely yours.

Try Undetectable AI and take your AI-powered writing to the next level.