If you go digging around the internet for answers to how much energy does ChatGPT use, you’ll probably find something like this:

“ChatGPT uses 10 times more electricity than a Google Search.”

If you follow the trail, this claim leads to a 170-page IEA report, which links to a paper quoting a likely estimate from an Alphabet chairman… based on data from 2009. Yikes!

In reality, Google’s newer search operations are about 10x more efficient than in 2009, and ChatGPT’s actual energy use per request today is way lower than those early alarm bells suggested.

Newer models like GPT-4o have made major strides in efficiency, meaning the headline numbers floating around are outdated and, honestly, a bit misleading.

So, what’s the real picture? That’s exactly what we’re diving into here.

Your takeaways will be:

- What powers ChatGPT under the hood?

- How much energy does one ChatGPT search use?

- How much energy it consumes compared to Google Search and other AI models?

- What OpenAI is doing to reduce its impact?

- What steps you can take as a user to make your AI usage more responsible?

Let’s get into it.

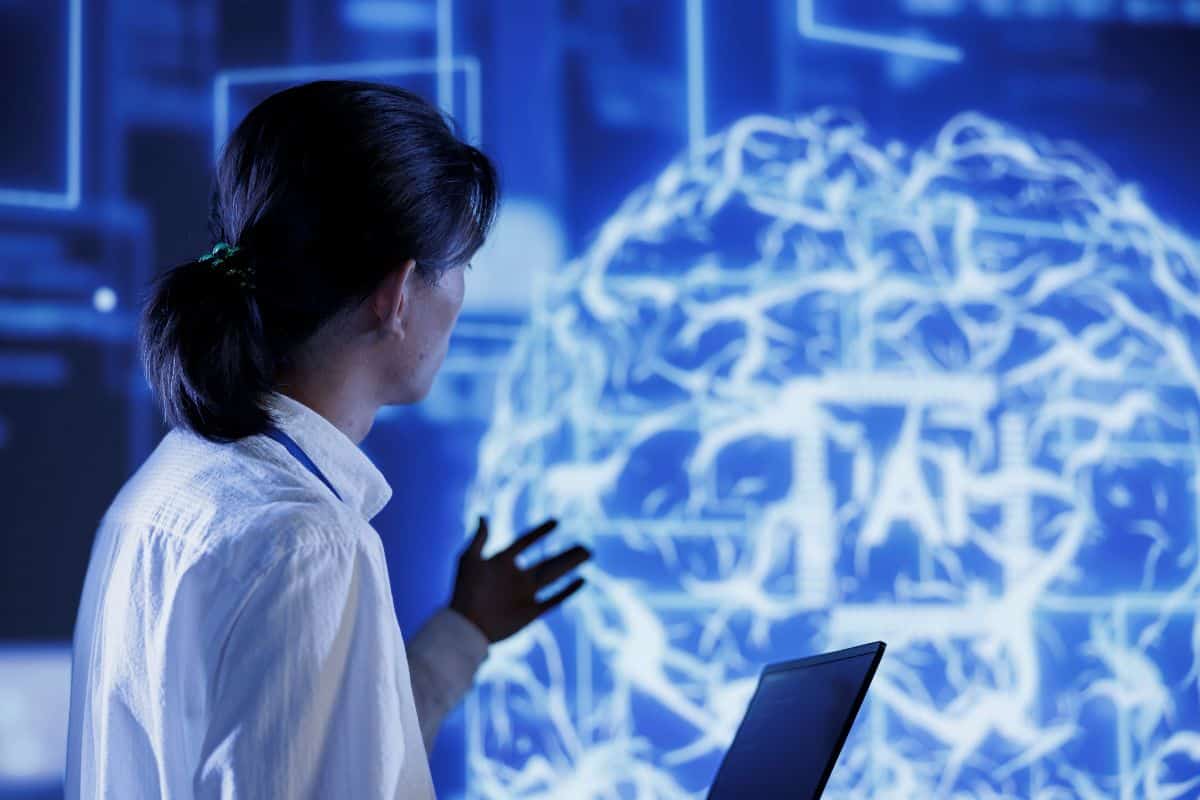

What Powers ChatGPT?

Mark Russinovich, CTO of Microsoft Azure, gave us a peek behind the curtain in a podcast that Microsoft published in 2023.

According to him, Microsoft built an infrastructure capable of training models with hundreds of billions of parameters.

GPT-3, for example, had 175 billion parameters, which already sounds like too many until you hear that Microsoft’s Megatron-Turing model flexed with 530 billion.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

Hardware-wise, the original 2020 OpenAI supercomputer stitched together over 285,000 AMD InfiniBand-connected CPU cores plus 10,000 NVIDIA V100 Tensor Core GPUs.

The new H100 virtual machine series clusters up to eight NVIDIA H100 Tensor Core GPUs per VM and scales out to thousands, just like the kind of high-performance infrastructure used in hosting Minecraft servers to deliver low-latency, high-uptime experiences.

In short, if you’re wondering what powers ChatGPT, the answer is basically: all of it.

All the cores, all the GPUs, all the network bandwidth you could ever hoard in your dreams.

Estimated Energy Use of ChatGPT

Now, onto the real question: how much energy does ChatGPT use per question?

You might expect it to be apocalyptic, like it melts glaciers with every prompt you type.

But actually, recent estimates say your typical ChatGPT query on GPT-4o clocks in at about 0.3 watt-hours (Wh).

That’s about ten times less than older (and likely inaccurate) estimates from early 2023 that pegged it at 3 watt-hours.

The leap in efficiency comes down to better models, sharper hardware, and realizing that older estimates were being way too pessimistic about token counts.

For scale, 0.3 watt-hours is less energy than what your laptop uses while you reheat your coffee.

Even if you’re slamming out heavy queries all day, the how much energy does ChatGPT use per day numbers are still pretty tame compared to, say, running your AC or gaming PC. But that’s just your usage.

OpenAI’s user base was over 400 million weekly as of February, going by what an OpenAI spokesperson told Reuters. So that’d be a lot of watt-hours even if you’re being efficient about it.

It’s important to note that this 0.3 watt-hours figure is still a little on the cautious side.

Many everyday queries are probably cheaper than that.

But queries that involve massive amounts of input, extensive outputs, or heavy-duty reasoning models can still push consumption much higher.

Energy Use: GPT-4 vs GPT-3.5

Even though GPT-3.5 has been sunsetted, its legacy still lingers, especially when we’re discussing how much energy does ChatGPT use across versions.

Here’s what the data says about its energy use compared to GPT-4.

For a GPT-3 style model with about 175 billion parameters, emissions were already intense, but moving to a GPT-4 style model with its heavier architecture could jack emissions up by around 200 times.

According to George Hotz, GPT-4 is eight instances of a 220B-parameter GPT-3-ish model stitched together.

But importantly, only two of these models are actually routed during inference which means the real action happens with about 280B effective parameters once you account for some parameter sharing (~55B for attention mechanisms).

Compare that to GPT-3.5’s 175B setup, and you start to understand why GPT-4’s inference costs are up to three times higher.

The growth in energy use outstrips the mere increase in parameter count, which should have suggested only a 1.6x rise.

But that’s not what happened because GPT-4’s queries are simply way more expensive.

The Carbon Footprint of AI Models

Running large AI models isn’t cheap, for the planet or the power bill.

These models require serious computing muscle, which means lots of electricity and, in many cases, lots of emissions.

For example, GPT-3 reportedly required around 1,287 MWh of electricity to train, producing over 50 pounds of CO2.

Newer models like GPT-4o are even bigger, but OpenAI hasn’t released their exact footprint.

Beyond OpenAI’s ChatGPT, you’ve got Meta’s AI assistant, which is likely running on Llama 3.2 models (either 11B or 90B parameters).

Again, exact figures of CO2 emissions are not available.

Anthropic’s Claude 3.5 Sonnet weighs in much heavier, estimated at around 400 billion parameters with no disclosure of its exact carbon footprint.

And Google’s Gemini? Powered by the “Flash” and “Pro” variants, though Google hasn’t revealed exact parameter numbers.

We can still infer they’re in the same ballpark as GPT-4o or its mini variants.

And speaking of streamlined models, DeepSeek-V3 is out here flexing with just 37 billion active parameters (out of 671B total).

Its R1 reasoning variant shows strong performance while sipping energy more delicately per token than GPT-4o.

How Does ChatGPT Compare to Other Tools?

At this point, you should know that how much energy does ChatGPT use depends on the version you’re interacting with, the length of the prompts, and the mechanics under the hood.

Compared to the larger universe of AI tools, ChatGPT is about average in terms of energy demands, especially for high-end models.

But when it comes to sheer market share and frequency of use, even “average” translates into a massive energy footprint at scale.

Energy use vs Google Search

Back in 2009, Google estimated the energy cost of a single search query to be 0.3 Wh.

Fast-forward to 2024, and that estimate has become dusty.

It turns out that today’s Google searches actually use about 10x less energy than those early estimates.

Meanwhile, earlier energy estimates for an average LLM request, including ChatGPT were also about 10x too high (2.9 Wh).

With the latest research that puts the energy consumption of Google at 0.04 Wh and ChatGPT at 0.3 Wh, these two mistakes cancel each other out, meaning that the old “LLMs use about 10x more energy per query than Google Search” still holds… but only because everyone was wrong in just the right way.

What Is OpenAI Doing to Reduce Impact?

OpenAI is fully aware that training and running models like ChatGPT burns through energy at a significantly faster rate.

Which brings us to the question: what’s being done about it?

First off, OpenAI has been pushing efficiency upgrades.

Over time, newer models, like GPT-4o, and now GPT-4.1, have been specifically optimized for significantly lower energy consumption during inference.

Advances in model architecture, token handling, and server-level hardware improvements mean that today, how much energy does a ChatGPT query use is already far lower than it would have been even a year ago for the same task.

OpenAI also partners with Microsoft Azure, and Azure has committed to running its data centers on 100% renewable energy by 2025.

That’s important because when you’re throwing queries at ChatGPT, you’re pinging Azure supercomputers we talked about earlier.

Shifting the energy source away from fossil fuels to renewables doesn’t directly shrink the wattage a query uses, but it radically lowers the carbon footprint attached to it.

Beyond infrastructure, there’s some more future-facing stuff happening. OpenAI is actively researching ways to make models smaller without sacrificing performance.

Model compression, distillation, and smarter routing (like dynamic token processing) are all very live topics in AI efficiency circles.

What Can You Do as a User?

While OpenAI handles the big structural shifts, users still have a role to play in minimizing waste and using ChatGPT responsibly.

Here’s what you can do:

- Be concise: Frame your prompts clearly and tightly. Every extra token processed costs a bit of energy.

- Avoid spamming prompts: Resist the temptation to submit 15 slightly reworded versions of the same question.

- Use appropriate models: When possible, pick lighter, cheaper models (like GPT-4o-mini, if offered) for casual or lightweight tasks.

- Batch your requests: Instead of a bunch of fragmented questions, consolidate them into a single, well-thought-out prompt.

If you want to cut down on endless re-prompts and re-generations, it’s smart to use dedicated tools that generate cleaner, publish-ready content from the first few tries.

This is where Undetectable AI tools like Stealth Writer or Paraphraser come in.

Instead of asking ChatGPT for that perfect version of your text through multiple edits and retries (each costing more energy), you can simply use our specialized tools built for precision.

Tools designed for specific actions generally perform more efficiently and all our tools fit right into this model of smart, energy-conscious usage.

In other words, cleaner output in fewer tries = less energy burned = happier servers = you saving the world, one crisp paragraph at a time.

Ready to see the difference? Launch our AI Detector and Humanizer in the widget below!

FAQs: ChatGPT and Energy Use

How much energy does one ChatGPT request use?

A typical ChatGPT query, especially when using GPT-4o, consumes about 0.3 watt-hours.

This is based on newer and more accurate estimates that reflect improvements in hardware efficiency and model architecture.

Earlier estimates were about 3 watt-hours per query, but those numbers were based on older technology and assumptions.

So, how much energy does one ChatGPT request use today is much lower than it used to be.

Is training more energy-intensive than using it?

Yes, absolutely. Training a model like ChatGPT uses a massive amount of energy, far more than running it for day-to-day queries.

Training involves processing enormous datasets over weeks or months on thousands of GPUs, which results in a very large upfront carbon footprint.

By contrast, using the model (inference) consumes relatively small amounts of energy per request.

Does OpenAI publish sustainability data?

No, OpenAI does not currently release detailed public sustainability reports or full energy usage statistics.

While they work closely with Microsoft Azure, which has its own sustainability goals and reports, OpenAI itself has not made comprehensive disclosures about the energy consumption or carbon footprint of its models.

Final Thoughts: The Energy Cost of AI

In conclusion, how much energy does ChatGPT use depends heavily on which model you’re accessing and how you use it.

But overall, it’s clear that newer models are getting more efficient, and the industry is making real efforts to reduce the environmental impact.

That said, choosing the right tool for the right task can make a meaningful difference in your digital footprint.

Smarter workflows, fewer retries, and clearer prompts all add up to lower energy use.

And when you combine ChatGPT with purpose-built tools like Undetectable AI, you get the best of both worlds.

You generate clean, polished, and ready-to-publish content with less trial-and-error. That means saving time, compute, and yes, a little bit of the planet too.

If you want to work smarter and greener, sign up for Undetectable AI today.