The term deepfake first popped up on Reddit in 2017, when a user with the same screen name started sharing altered videos that used AI to swap faces, initially in adult content.

While Reddit banned the original subreddit, the idea had already taken off.

But the roots of this tech go back even further.

In 1997, researchers had already started experimenting with video editing through machine learning.

Their early lip-syncing tool, called Video Rewrite could make someone appear to say something they hadn’t.

It wasn’t technically a deepfake by today’s standards, but it laid the groundwork for what would come next.

Today’s blog is all about how this evolving technology works, where it shows up in everyday life, and what risks and benefits it brings with it.

Let’s begin with understanding what is a deepfake actually.

Key Takeaways:

- Deepfakes are AI-generated media that make people appear to say or do things they never actually did.

- With free tools and basic skills, almost anyone can now create convincing deepfake videos, images, or audio clips.

- While deepfakes can be creative or entertaining, they also pose serious risks like scams, defamation, and misinformation.

- You can spot most deepfakes by using detection tools or watching closely for visual and behavioral inconsistencies.

What Is a Deepfake?

A deepfake is fake media, usually a video, audio clip, or image, that’s been altered using artificial intelligence to make someone look or sound like they’re doing or saying something they never actually did.

Now, don’t mistake this for just a bad Photoshop job or a sketchy voiceover.

We’re talking high-level manipulation using a technology called deep learning, which is a subset of AI.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

That’s what the term “deepfake” implies: deep learning and fake content.

The goal of a deepfake is usually to create something that looks real enough to fool people.

Maybe you’ve seen those viral videos of celebrities doing bizarre things or politicians making outrageous statements they’d never actually say.

Those are classic deepfake use cases. What you’re seeing isn’t real, but the tech behind it has gotten good enough that your brain doesn’t immediately pick up on the fakery, unless you’re really looking.

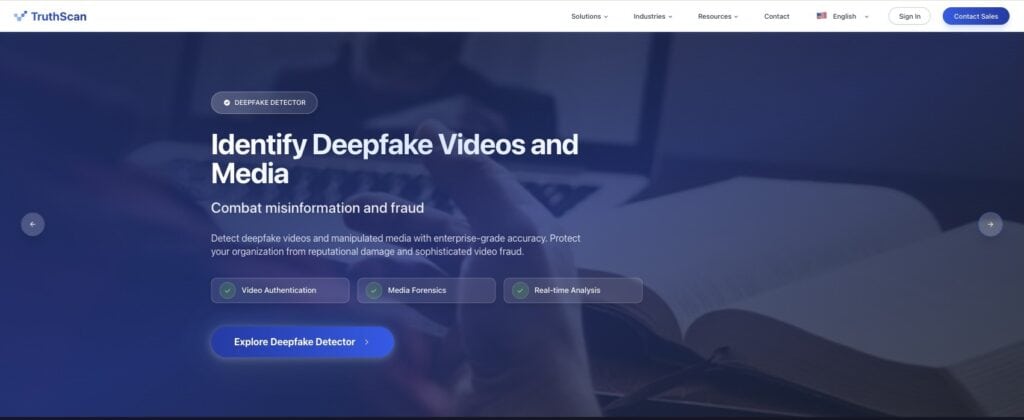

That’s where tools like TruthScan step in.

TruthScan is built for organizations that need to verify media at scale—companies, newsrooms, universities, and public agencies. It helps teams spot manipulation before false content damages trust or reputation.

In fact, according to Undetectable AI’s research team, 85 % of Americans say deepfakes have eroded their trust in online information.

You just learned what is a deepfake video or media actually is, now onto how people make these.

How Deepfakes Are Made

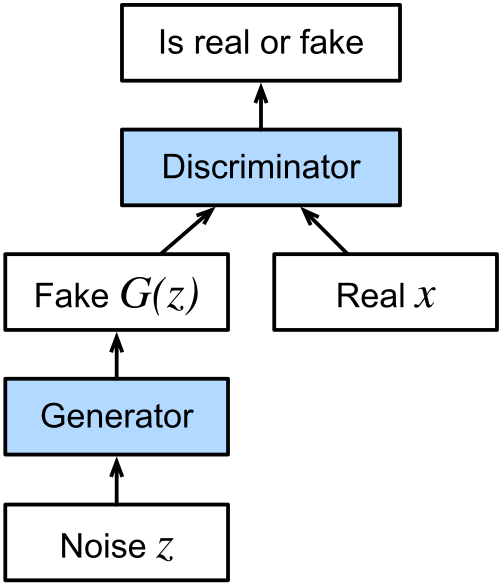

The technology behind most deepfakes is a category of machine learning algorithms called generative adversarial networks, or GANs.

GANs are made up of two parts: a generator and a discriminator.

The generator creates fake media based on what it’s been trained to replicate, and the discriminator’s job is to call out what looks fake.

They keep going back and forth until the generator gets better at making fake content that can pass the discriminator’s test.

Over time, this back-and-forth leads to media that looks shockingly realistic.

What is a Deepfake Video Generation Process?

When building a deepfake video, the system analyzes videos from multiple angles, studies how the person talks, how their face moves, how their body shifts.

All of that information gets fed into the generator so it can create content that mimics those behaviors.

Then, the discriminator helps fine-tune it by pointing out what’s off until the end result looks just right.

That’s how the illusion holds up so well in motion.

There are a few different techniques used depending on the purpose of the deepfake video.

If the idea is to make someone appear to say or do something in a video they never actually said or did, then we’re dealing with what’s called a source video deepfake.

A deepfake autoencoder, made up of an encoder and decoder, studies the original footage and applies the target’s expressions, gestures, and subtle head movements onto it.

Audio Deepfakes

An audio deepfake works by cloning someone’s voice, again using GANs, based on recordings of how they naturally speak.

Once trained, the model can generate new speech in that voice, even if the person never actually said those words.

Another layer often added is lip syncing.

Here, the system maps generated or pre-recorded audio onto a video.

The goal is to make the lips in the video match the words in the audio.

Real-World Examples of Deepfakes

Deepfakes are showing up everywhere these days.

Let’s introduce you to some popular deepfakes that show how far the technology has come.

The Deepfake of President Zelenskyy Asking Ukrainian Troops to Surrender

In March 2022, during the early stages of the Russia-Ukraine war, a video surfaced online showing Ukrainian President Volodymyr Zelenskyy urging his soldiers to surrender.

It looked like a national address, complete with Zelenskyy’s voice and mannerisms.

The video spread across social media platforms and was even posted on a compromised Ukrainian news website.

The president acted fast and released an official statement through his verified channels to debunk the footage.

The Viral Deepfake Image of Pope Francis in a Designer Puffer Jacket

Sometimes, a deepfake image doesn’t need to be malicious to cause confusion.

In 2023, a viral photo of Pope Francis walking around in a stylish white Balenciaga puffer jacket took the internet by storm.

It racked up tens of millions of views and was widely shared across platforms.

The image was created using an AI tool called Midjourney by an anonymous user in Chicago.

Elon Musk Deepfakes Used in Online Scams

In 2024, deepfakes featuring Elon Musk became a central part of several large-scale online scams.

AI-generated videos of Musk started popping up on Facebook, TikTok, and other platforms, promoting fake cryptocurrency giveaways and investment schemes.

These clips looked and sounded remarkably real.

The damage wasn’t just theoretical. An elderly retiree reportedly lost nearly $700,000 after being convinced by one of these videos.

The Joe Biden Deepfake Robocall That Targeted U.S. Voters

In early 2024, just before the New Hampshire primary, voters received robocalls that sounded exactly like U.S. President Joe Biden.

In the call, Joe Biden was urging voters to stay home and save their vote for the general election in November. The intention was to confuse and mislead voters into skipping the primary.

The incident triggered calls for regulation. Advocacy groups urged the U.S. Federal Election Commission to step in, but the FEC ultimately declined, citing limitations in its authority.

Meanwhile, the telecom company responsible for distributing the robocall agreed to pay a $1 million fine.

Political Deepfake Use in the 2020 Indian Elections

Not all uses of deepfake technology are harmful or deceptive in intent (more on this later on in the blog).

During the 2020 Delhi Legislative Assembly election, the Bharatiya Janata Party used AI to tailor a campaign ad for different linguistic audiences.

The party took a video of its leader Manoj Tiwari speaking in English and used deepfake lip-syncing to produce a version in Haryanvi, a regional dialect.

While the voiceover came from an actor, the visuals were modified to match the new audio using AI trained on real footage of Tiwari.

Party members saw it as a positive application of the tech that allowed them to connect with voters in a language they understood, even if the candidate didn’t speak it fluently.

How to Verify Deepfakes in the Real World

With deepfakes spreading across politics, entertainment, and everyday communication, knowing how to confirm what’s real has never been more important.

Despite the rise of sophisticated examples, reliable tools exist to counter the threat of deepfake technology.

Undetectable AI’s AI Video Detector is one such tool, showing that reliable tools exist to counter the sophisticated examples of deepfake technology.

That’s where Undetectable AI’s Deepfake Detection comes in.

It’s designed to verify whether audio or video content has been artificially generated, using advanced forensic models that analyze frame-by-frame motion, pixel-level consistency, and vocal fingerprinting.

In seconds, users can see if a video has been digitally altered—or if a voice has been cloned by AI.

Just upload the file, and the tool provides a detailed authenticity report with a visual breakdown and confidence score.

Whether it’s a viral clip, a suspicious interview, or a shared recording, Deepfake Detection helps journalists, educators, and everyday users uncover the truth behind what they see and hear.

Are Deepfakes Dangerous? Risks and Concerns

So far, we’ve looked at what is a deepfake, how it’s made, and where it’s already shown up in the real world.

The technology is undeniably impressive, but the risks tied to it are serious and growing fast.

Deepfakes can be weaponized in multiple ways. Here are a few main concerns.

Defamation

When someone’s likeness or voice is used to create fake remarks, statements, or videos, especially those that are offensive or controversial, it can ruin reputations almost instantly.

And unlike older hoaxes or fake quotes, a convincing deepfake leaves little room for doubt in the viewer’s mind.

In this regard, deepfakes can stir outrage, destroy relationships, or simply push a damaging narrative.

What’s especially concerning is that the deepfake doesn’t even need to be perfect.

As long as the person is recognizable, and the content is believable enough, it can leave a lasting impact on public opinion.

Credibility of Information

Another major concern is how deepfakes undermine the very idea of truth.

As deepfakes become more common, it’s getting harder to know whether what we’re seeing or hearing is real. Over time, this could lead to a broader erosion of trust in any form of digital communication.

This crisis of credibility goes beyond individual incidents.

In democratic societies, people rely on shared facts to make decisions, debate issues, and solve collective problems.

But if voters, viewers, or citizens begin to question everything, it becomes much easier to manipulate public opinion or to dismiss inconvenient truths as “just another deepfake.”

Blackmail

AI-generated media can be used to falsely incriminate individuals by making it seem like they’ve done something illegal, unethical, or embarrassing.

This kind of manufactured evidence can then be used to threaten or control them.

And it cuts both ways. Because deepfakes are now so realistic, someone facing real blackmail might claim the evidence is fake, even if it’s not.

This is sometimes referred to as blackmail inflation, where the sheer volume of believable fakes ends up reducing the value of actual incriminating material.

The credibility of real evidence gets lost in the fog, and that only adds another layer of complexity when trying to expose wrongdoing.

Fraud and Scams

Using AI-generated videos or voices of trusted public figures, fraudsters are creating incredibly convincing schemes.

In some cases, deepfakes of celebrities like Elon Musk, Tom Hanks, or Oprah Winfrey are used to endorse products or services they’ve never heard of.

These videos are then pushed across social platforms, where they reach millions.

Even private individuals are at risk, especially in spearphishing campaigns that target specific people with personalized content designed to manipulate or deceive.

According to a 2024 report by Forbes, deepfake-driven fraud has already racked up an estimated $12 billion in global losses, and that figure is expected to more than triple in the next few years.

That’s why detection isn’t just for individuals. It’s essential for companies that handle sensitive data, communications, or public content. TruthScan helps these organizations identify altered videos before misinformation spreads.

Positive and Creative Uses of Deepfakes

It’s worth noting that not every application of the technology is negative.

While many blogs explaining what is a deepfake focus on misuse, there’s a growing list of creative and productive ways deepfakes are being used.

Still, for businesses, even artistic or marketing uses of deepfakes should be checked for authenticity before release.

Tools like TruthScan can confirm that no unintended manipulation remains in the final version.

Below are some notable examples.

Film and Acting Performances

Studios are starting to rely on deepfake technology for things like:

- Enhancing visual effects

- Reducing production costs

- Bringing back characters who are no longer around

Disney, for instance, has been refining high-resolution deepfake models that allow for face-swapping and de-aging actors with impressive realism.

Their technology operates at a resolution of 1024 x 1024 and can accurately track facial expressions to make characters look younger or more expressive.

Beyond Hollywood, deepfakes have enabled global campaign work, such as when David Beckham was digitally cloned to deliver a health message in multiple languages.

Art

In 2018, multimedia artist Joseph Ayerle used deepfake technology to create an AI actor that blended the face of Italian movie star Ornella Muti with the body of Kendall Jenner.

The result was a surreal exploration of generational identity and artistic provocation, part of a video artwork titled Un’emozione per sempre 2.0.

Deepfakes have also shown up in satire and parody.

The 2020 web series Sassy Justice, created by South Park creators Trey Parker and Matt Stone, is a prime example.

It used deepfaked public figures to poke fun at current events while raising awareness of the technology itself.

Customer Service

Outside of the creative industries, businesses are finding utility in deepfakes for customer-facing services.

Some call centers now use synthetic voices powered by deepfake technology to automate basic requests, such as account inquiries or complaint logging.

In these instances, the intent isn’t malicious but simply to streamline.

Caller response systems can be personalized using AI-generated voices to make automated services sound more natural and engaging.

Since the tasks handled are usually low-risk and repetitive, deepfakes in this context help reduce costs and free up human agents for more complex issues.

Education

Education platforms have also begun incorporating deepfake-powered tutors to assist learners in more interactive ways.

These AI-driven tutors can deliver lessons using synthetic voices and personalized guidance.

Deepfake Detection Tools and Techniques

As deepfakes become more advanced and accessible, so does the need to identify them before they cause harm.

People and organizations also need the right tools and techniques to stay one step ahead. So here are a few tools and techniques.

For image-focused verification, TruthScan’s AI Image Detector analyzes lighting, pixel patterns, and composited elements to flag AI-generated or edited visuals in real time.

It helps teams confirm authenticity quickly across images and video frames before content is published or shared.

TruthScan: Enterprise-Grade Deepfake Detection

TruthScan is designed for businesses, universities, and media organizations that need large-scale verification of visual and audio content.

It analyzes video authenticity across multiple dimensions:

- Face Swap Detection: Identifies manipulated facial features frame by frame.

- Behavioral Fingerprinting: Detects subtle expression patterns and micro-movements inconsistent with natural human behavior.

- Video Forensics: Examines frame compression, pixel noise, and voice tampering.

- Real-Time Detection: Enables live verification during video calls, press releases, or broadcast streams.

With TruthScan, teams can authenticate videos before publication or public distribution, preventing reputational and financial damage.

For everyday users, Undetectable AI’s tools offer similar protection at the individual level.

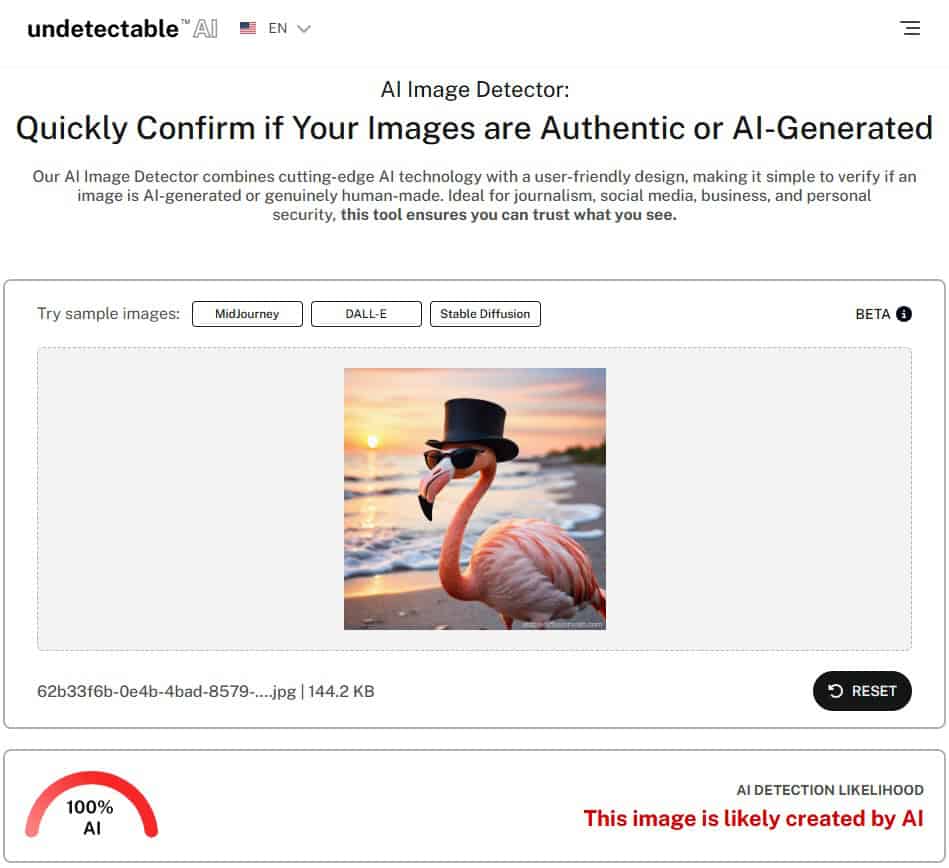

AI Image Detector by Undetectable AI

Undetectable AI’s AI Image Detector makes deepfake detection more easy, even for those without a technical background.

The detector works by analyzing various elements in an uploaded image, such as color patterns, textures, facial features, and structural inconsistencies.

It supports detection for media created using the most well-known AI image generators like

- MidJourney

- DALL·E

- Stable Diffusion

- Ideogram

- GAN-based models

To use it, simply upload an image, let the tool analyze it, and receive a clear verdict with a confidence score.

If you’re unsure whether an image you’ve come across is genuine, try our AI Image Detector to check for any signs of AI tampering.

Now, let’s put Undetectable’s AI Detector to the test and see if it can accurately tell the image is AI-generated.

As you can see, the image AI detector by Undetectable AI has flagged this image as 100% AI-generated.

Visual and Behavioral Techniques for Manual Detection

There are also practical techniques individuals can use to detect deepfakes manually, especially in situations where immediate analysis is needed.

Some visual red flags include:

- Awkward facial positioning or odd expressions.

- Inconsistent lighting or coloring across different parts of the image or video.

- Flickering around the face or hairline, particularly during movement.

- Lack of natural blinking or irregular eye motion.

- Discrepancies in lip syncing when the audio doesn’t match up with the speech.

From a behavioral angle, deepfakes often struggle to mimic subtle human traits. Pay attention to body language, emotional expressions, and habitual gestures.

And in real-time conversations, especially live videos, ask for a side profile view.

Many deepfake models still struggle to accurately render a 90-degree facial angle or complex movements like turning the head while maintaining natural expressions.

Spotting Deepfakes in Text and Context

Deepfakes aren’t limited to visuals. Some versions involve synthetic text, voice, or behavior that mimics someone’s communication style.

When analyzing textual content or dialogue, keep an eye out for:

- Misspellings and odd grammar.

- Sentences that feel forced or don’t flow naturally.

- Unusual email addresses or inconsistent phrasing.

- Messages that feel out of context or unrelated to the situation.

Context also matters. If a video or message appears in a setting that doesn’t make sense, like a politician casually announcing a major decision in a low-quality clip, it’s worth questioning its authenticity.

Curious about our AI Detector and Humanizer? Try them in the widget below!

FAQs About Deepfakes

Are deepfakes illegal?

Deepfakes are not illegal by default, but they can be if they break existing laws such as those covering defamation, child pornography, or non-consensual explicit content.

Some U.S. states have passed laws targeting deepfakes that influence elections or involve revenge porn.

Federal bills like the DEFIANCE Act and NO FAKES Act are also in progress to regulate malicious uses of deepfake technology.

Can anyone make a deepfake?

Yes, almost anyone can make a deepfake using free or low-cost software and AI tools.

Many platforms now offer user-friendly interfaces, so no advanced technical skills are needed to get started.

How can I protect myself from deepfakes?

Limit your exposure by avoiding posting high-res images or videos online. Use privacy settings and detection tools like Undetectable AI or TruthScan to double-check anything suspicious.

Are there deepfake detection apps?

Yes, TruthScan is designed for business users who need to analyze video content at scale, while Undetectable AI’s detectors serve individuals and creators looking to verify smaller volumes of content.

Final Thoughts

Deepfakes are reshaping the way we see truth online. Whether you’re protecting your brand, managing public communications, or just browsing safely, using the right detection tools makes all the difference.

For businesses and institutions, TruthScan provides enterprise-level protection against AI-driven misinformation.

For individuals, Undetectable AI’s AI Image Detector offers a simple, fast way to check images and stay informed.

Together, they help ensure what you see and share stays real.