Generative AI tools have evolved deepfakes from scientific research into a major public concern. People show fear toward synthetic media while simultaneously demonstrating inability to detect it according to the June 2025 survey results.

The FBI states that AI deepfake technology enables criminal activities through three main methods which include AI-generated spam messages and AI-created social media personas and AI-coded phishing websites.

The goal of these schemes targets both business organizations and individual consumers for financial exploitation. The combination of deepfake technology with AI resulted in more than $200 million in losses during 2025.

The majority of Americans (85%) lost their trust in online information during the past year because of deepfakes and 81% fear personal harm from fake audio and video content. More than 90% of users fail to utilize detection tools. The data indicates a rising “trust gap” which demands immediate intervention from regulators and platform operators and technologists.

Key Findings

| Insight | Supporting Data |

|---|---|

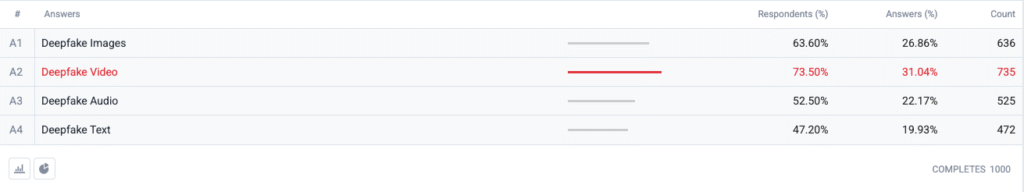

| Deepfake video tops the fear index. | 73.5 % of respondents chose deepfake video as a format they are “most worried about,” followed by images (63.6 %), audio (52.5 %) and text (47.2 %). |

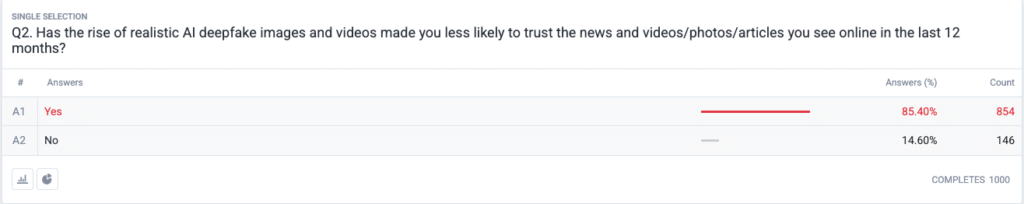

| A full‑blown trust crisis is unfolding. | 85.4 % report they have become less likely to trust news, photos or videos online in the past 12 months due to realistic deepfakes. |

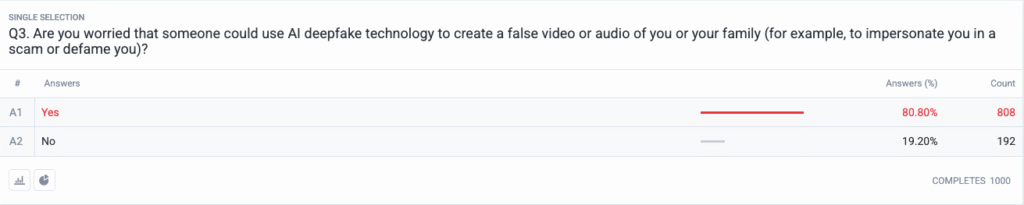

| Personal threat has gone mainstream. | 80.8 % worry that scammers or bad actors could weaponize deepfake content against them or their family. |

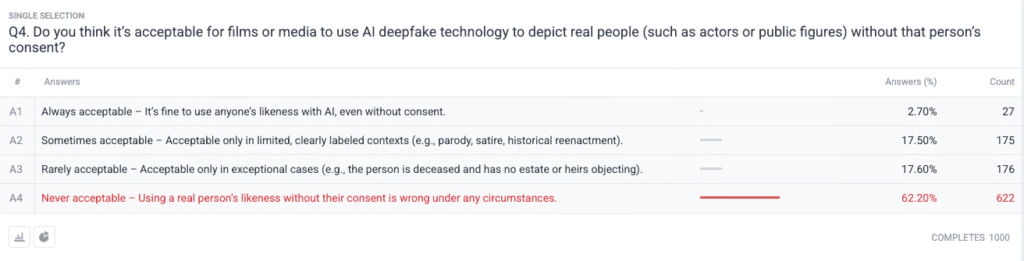

| Strong moral consensus on consent. | 62.2 % say using a real person’s likeness without permission is never acceptable; just 2.7 % say it is always acceptable. |

Methodology

- Sample & Fieldwork. We recruited 1,000 U.S. adults using age, gender and regional quotas aligned with U.S. Census benchmarks. Fieldwork was completed on 18 June 2025.

- Question Design. Five closed‑ended questions gauged worry about different deepfake formats, recent changes in trust, perceived personal risk, ethical views on consent, and prior use of detection software.

- Margin of Error. ±3.1 percentage points at the 95 % confidence level.

- Weighting. Post‑stratification weighting corrected minor deviations from national demographics.

Questions

Q1: Which form of AI Deepfakes are you most worried about? (Choose all that apply)

Q2: Has the rise of realistic AI deepfake images and videos made you less likely to trust the news and videos/photos/articles you see online in the last 12 months?

Q3: Are you worried that someone could use AI deepfake technology to create a false video or audio of you or your family (for example, to impersonate you in a scam or defame you)?

Q4: Do you think it’s acceptable for films or media to use AI deepfake technology to depict real people (such as actors or public figures) without that person’s consent?

Trend Analysis

- Video Anxiety Outpaces Other Formats

In the past video stood as a trusted medium which people believed when they saw it. Synthetic video concerns now dominate American minds since video lost its trusted status. People fear video more than images by a 10-point margin (73.5% vs 63.6%) because video’s motion combined with synchronized voice and realistic appearance intensifies their concerns. Media organizations should implement strict authentication procedures for video content before they release it to the public.

- Erosion of Information Trust

The massive 85% drop in trust levels among the public demonstrates widespread doubt about every type of digital content regardless of its obvious lack of credibility. The “cheap speech” theory demonstrates how easy fabrication has made verification of truth become the responsibility of the consumer. Public figures and journalists along with policymakers need to prepare for increased monitoring by investing in clear verification methods (such as cryptographic watermarks and signed metadata).

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

- Deepfakes Become Personal

The majority of respondents (85%) envision themselves or their family members becoming victims of deepfake attacks. The perception of deepfakes as election and celebrity-focused political disinformation tools has shifted to include personal threats in recent cycles. The spread of AI voice-cloning and face-swap apps has made identity theft and financial scams plausible threats for ordinary households which has triggered demands for improved victim-recourse mechanisms and identity-protection laws.

- Near‑Universal Demand for Consent

The survey’s ethical question reveals how the Hollywood community remains divided about synthetic actors and posthumous performances. The strong public stance against individual rights infringement emerged as the “never acceptable” choice which received support from 62.2 percent of participants. Public officials should use this evidence to create laws which require explicit consent from individuals before creating AI-generated likeness representations and ensure proper labeling disclosure.

- Readiness Gap and Education Opportunity

Even though deep concern exists about the issue 89.5 percent of respondents have not used detection tools. The market shows latent potential since interest levels exceed current usage capabilities which indicates usability and awareness issues and doubts about effectiveness. Undetectable AI alongside other industry players must create simple onboarding processes while adding detection tools to consumer platforms and develop educational programs that explain AI technology to the public.

Implications for Stakeholders

| Stakeholder | Actionable Takeaway |

|---|---|

| News & Social Platforms | Deploy authenticated‑media standards (C2PA, W3C provenance) and surface “origin verified” badges to rebuild audience trust. |

| Entertainment Studios | Institute explicit opt‑in contracts for synthetic likenesses and adopt on‑screen disclosures when AI recreation is used. |

| Regulators | Advance right‑of‑publicity and deepfake labeling bills to align with public consent expectations. |

| Businesses & Brands | Proactively monitor brand imagery for manipulations and educate employees on spotting suspicious content. |

| Consumers | Seek reputable detection tools and practice “pause, verify, share” digital hygiene before amplifying media. |

Comments

We collected additional responses from survey participants relating to their use of AI deep fake detection tools, which revealed that some are already using them and a large portion of individuals say that they were unaware of them but are interested in learning more. The full survey data is available upon request.

This report is an AI summary of our full findings, which are available upon request. This research was conducted in collaboration with TruthScan, a deepfake detection and anti-ai fraud solution.

Conclusion

Deepfake concerns in 2025 are defined by a stark paradox: soaring public alarm intersecting with minimal protective action. With trust in foundational decline, organizations face an urgent mandate to verify, label, and authenticate digital content.

Fair Use

Please feel free to use this information in an article or blog post, so long as you provide us with a link and citation. For access to the complete survey data set, don’t hesitate to get in touch with us directly.

For press inquiries or access to full crosstabs, contact [email protected] or [email protected]