As AI tools like ChatGPT become more sophisticated and accessible, a pressing concern has emerged within the education sector:

Can colleges and universities reliably detect ChatGPT-generated content?

In this article, we examine how institutions are responding to the rise of generative AI, what detection methods are being used in 2025.

The challenges they face, and how students can use AI responsibly without compromising academic integrity.

Key Takeaways

- Colleges can detect ChatGPT—but not with perfect accuracy. Tools like Turnitin and Copyleaks are commonly used but still produce false positives and negatives.

- Human review and stylometric analysis are key in evaluating inconsistencies between a student’s prior work and new submissions.

- Detection relies on linguistic, statistical, and contextual patterns, but advanced AI outputs can often bypass superficial analysis.

- Educators are updating policies and adopting oral exams, real-time writing tasks, and AI-aware assessments to reduce dependence on detection tools alone.

- Ethical AI use is possible, especially when guided by transparency, academic honesty, and tools like Undetectable AI.

Is ChatGPT Detectable in 2025?

ChatGPT and other large language models have improved dramatically in their ability to generate natural, coherent, and human-like text.

While this advancement unlocks powerful educational potential, it also presents new challenges for academic institutions seeking to preserve the integrity of student work.

Most AI detectors used by colleges—including Turnitin’s AI detection feature—rely on linguistic patterns, perplexity, and burstiness to flag AI-generated writing.

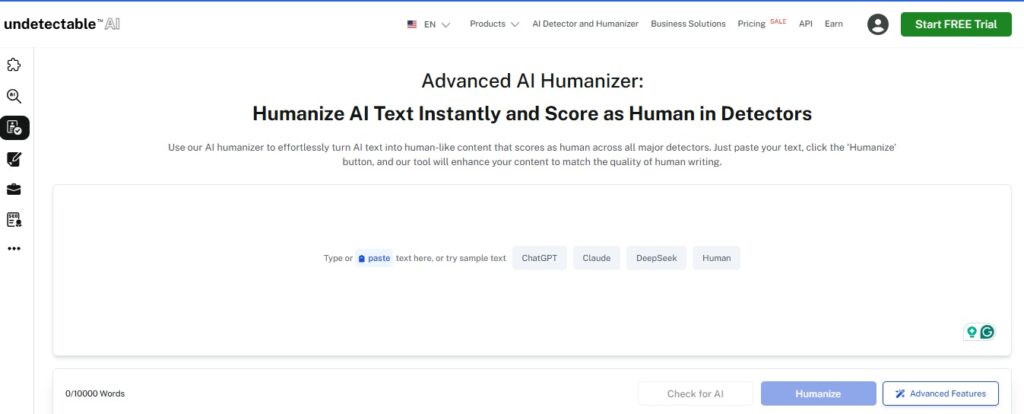

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

However, these systems are not infallible. Many students edit AI outputs or humanize them using advanced tools to bypass detection.

This arms race between generative AI and AI detectors has left universities walking a tightrope—balancing fairness, academic integrity, and evolving student needs.

Common Detection Methods in Higher Education

1. Stylometry

Stylometry examines a student’s unique writing style across previous submissions—analyzing grammar, punctuation, sentence structure, and vocabulary.

A sharp deviation in tone or complexity often signals AI use. This method is particularly effective when educators have a portfolio of the student’s earlier work for comparison.

2. Statistical Pattern Analysis

AI-generated content often exhibits unnatural consistency in sentence length, formality, or structure.

Detectors like Turnitin scan for anomalies based on known patterns in human writing. However, highly edited AI content may bypass such analysis.

3. Contextual and Semantic Analysis

Instead of just surface-level grammar, contextual analysis evaluates depth, relevance, and coherence.

AI tools may produce content that “sounds right” but lacks insightful analysis or connection to course material.

4. Machine Learning Detection

Some institutions use AI to fight AI—training machine learning models to distinguish between human and machine-generated text based on thousands of sample essays.

5. Human Review

Despite automation, experienced educators remain the most accurate AI detectors, especially when they know their students’ voices.

In fact, a 2025 arXiv study found that seasoned instructors outperformed AI detectors in spotting subtle AI-generated assignments.

Limitations of AI Detection in Academia

While detection systems have improved, several critical limitations remain:

- False positives may wrongfully accuse students, especially those whose writing deviates from the norm (e.g., ESL learners or neurodiverse students).

- Cost and accessibility restrict universities with smaller budgets from acquiring the most advanced AI detection tools.

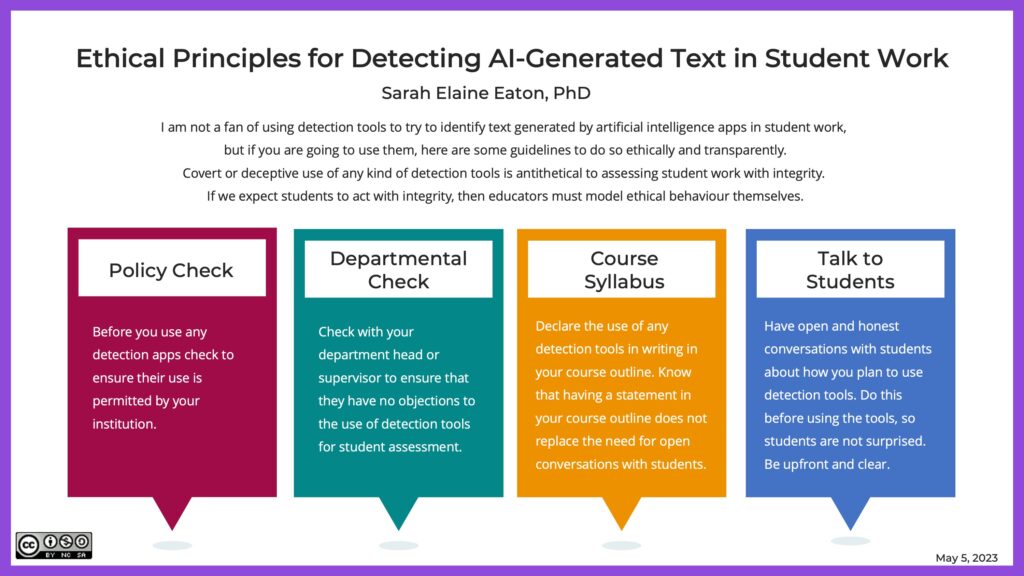

- Ethical concerns emerge when invasive detection practices infringe on student privacy or foster distrust.

- Inequity in AI usage can further widen achievement gaps among students with different access levels to AI literacy and tools.

These concerns have pushed many institutions to reevaluate not just how they detect AI—but how they rethink assessment altogether.

Implications of Using ChatGPT for Students and Colleges

Academic Integrity: AI-generated submissions can obscure a student’s true understanding.

When used unethically, ChatGPT challenges the accuracy of academic evaluation and compromises the value of grades and credentials.

Ethical Dilemmas: From fairness to data privacy, universities are grappling with where to draw the line on AI use. Should AI be banned outright—or integrated thoughtfully?

Long-Term Impact: Overreliance on AI could weaken essential skills such as critical thinking, argumentation, and creativity.

If academic outputs are perceived as AI-assisted, the credibility of degrees could decline in the eyes of employers and grad programs.

Ways to Safely Use AI Tools (Featuring Undetectable AI)

AI doesn’t have to be the enemy of academic honesty—when used correctly, it can enhance understanding without replacing original thought.

Here’s how students can use AI safely and transparently:

- Use AI for brainstorming, not writing full essays.

- Cite your sources if AI helped generate insights.

- Edit heavily to make the work your own.

- Use humanizing tools to ensure your writing reflects your personal voice.

Recommended Tools from Undetectable AI

- AI Humanizer – Converts robotic AI outputs into human-like writing that aligns with your unique voice.

- AI SEO Content Writer – Ideal for students managing blogs or personal portfolios who need ethically optimized content.

- AI Image Detector – Designed for visual projects and multimedia submissions, helping ensure originality and compliance.

By using Undetectable AI tools responsibly, students can embrace AI without violating academic expectations—and educators can build trust through transparency.

Ensure your writing aligns with platform standards—start with the widget below.

Frequently Asked Questions (FAQs)

Can colleges detect ChatGPT-generated content?

Yes—but not always. Detection tools can flag likely AI usage, but they often miss or falsely identify content. Human review remains essential.

Is using ChatGPT for help cheating?

It depends. Using ChatGPT to brainstorm or clarify a topic may be fine if approved by your instructor. Passing off AI-generated work as your own is widely considered academic dishonesty.

What happens if a student is falsely accused of using AI?

False positives do happen. That’s why institutions are urged to combine AI tools with educator judgment before taking disciplinary action.

Can Undetectable AI tools bypass detection?

Undetectable AI helps humanize content and restore natural flow, but its primary purpose is to ensure clarity and originality—not to cheat detection systems.

Will colleges continue to evolve their AI policies?

Absolutely. Most institutions are actively revising policies, developing new assessments, and exploring how to incorporate AI responsibly into education.

Conclusion

The future of education lies not in avoiding AI—but in learning to use it wisely.

Colleges in 2025 are increasingly adopting detection methods, but true academic integrity comes from student understanding, transparency, and trust.

Whether you’re a student seeking ethical ways to use AI, or an educator adapting to a new learning landscape—Undetectable AI provides the tools to support your journey with integrity.

Explore Undetectable AI and unlock ethical, effective ways to work with AI in today’s classrooms.