Can GPT o1 write content without getting caught by AI detectors?

It’s a fair question.

In this article, you’ll read that GPT o1 is a model that was trained to handle complex task related to the field of science, coding, and math.

But hey, if it can write, and whether or not you can sneak it past AI detectors, is worth exploring.

Here’s what you’ll walk away with after reading this article:

- What Is GPT-o1?

- How AI Detectors Work?

- Can GPT-o1 Content Be Detected?

- Are OpenAI’s o1-mini and o1-preview Truly Undetectable?

- How to Bypass AI Detectors with GPT-o1?

- GPT-o1 vs GPT-4o: Which Is More Detectable?

So let’s start.

What Is GPT-o1?

In September 2024, OpenAI launched GPT-o1-preview, alongside a lighter, more cost-efficient variant called o1-mini.

By December 2024, the preview version was officially replaced by the full GPT-o1 model.

According to OpenAI, GPT-o1 is made to tackle highly complex problems by dedicating more computational “thinking” time before generating responses.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

This includes advanced tasks like competitive programming, abstract mathematics, and scientific reasoning, which the model can handle with near-expert finesse.

And the tests are evidence of this expertise.

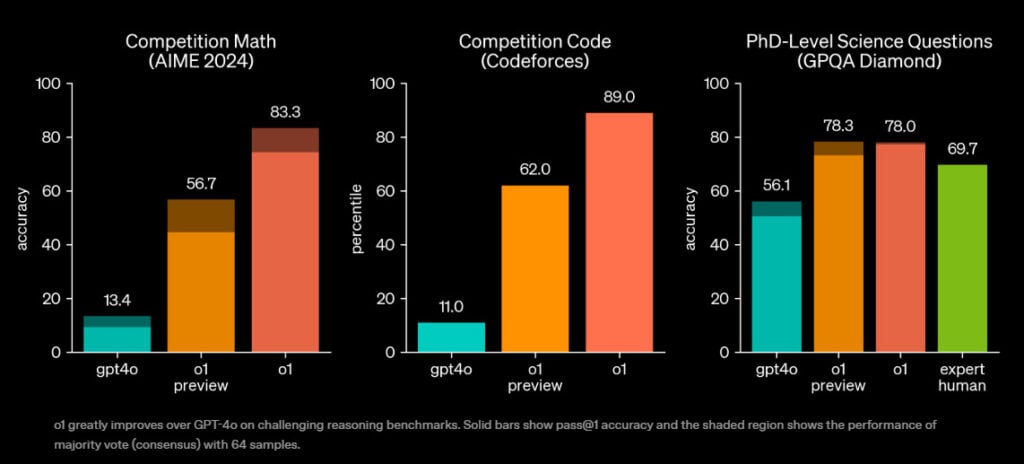

In benchmark testing, o1-preview ranked in the 89th percentile on Codeforces competitions.

On the American Invitational Mathematics Examination, o1 solved 83% of problems (12.5/15). By comparison, GPT-4o barely cracked 13% (1.8/15).

This model also showed PhD-level proficiency across multiple scientific domains (physics, chemistry, and biology).

So it’s clear that the model was made for research and technical applications, primarily.

How It Differs from GPT-3.5, GPT-4 & GPT-4o

From GPT-o1’s perspective, the differences to GPT-3.5, GPT-4 and GPT-4o are both subtle and stark, depending on the task at hand.

GPT-3.5 operates on an older dataset with a limited 16,385 context window (4,096 output tokens) and offers basic reasoning and decent coding skills.

It does okay with general prompts, but hand it a problem with nested logic or a tricky algorithm, and you’ll quickly see its edges.

Compared to it GPT o1 operates in a different cognitive class. So, the comparison isn’t right.

GPT-4 remains solid, more refined than 3.5 and capable across a broader range of tasks, especially those needing nuance. But even it doesn’t dig into technical complexity with the same rigor as o1.

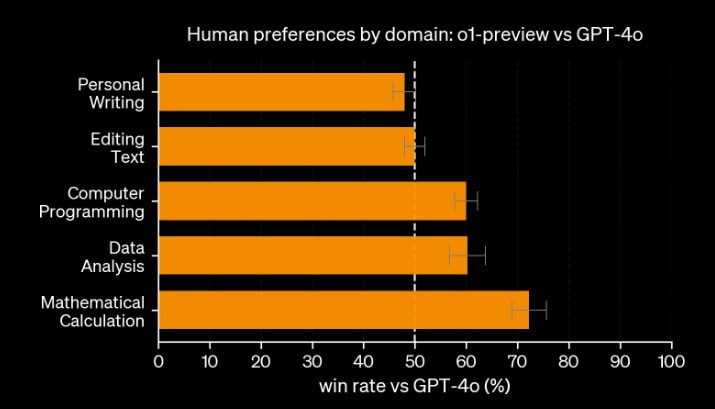

GPT-4o further refined speed and multimodal capabilities but still lagged in deep analytical tasks.

It’s faster, more interactive, and better equipped for general-purpose use. It handles creative writing, chatting, and multimedia tasks better than o1 for now.

But, when it comes to logic-heavy use cases, o1 holds a measurable lead.

That said, GPT-o1 lacks some user-friendly features like web browsing or file uploads, which GPT-4o supports.

The mode can also be a little terse. Its refusal responses are shorter, which sometimes skip over references or deeper explanations that 4o tends to include.

Another major caveat of o1 is that it can amplify risks when addressing dangerous queries.

For example, when asked about rock-climbing techniques, GPT-o1’s in-depth engagement could encourage over-reliance, whereas GPT-4o defaults to generic advice.

[source]

| Feature | GPT-3.5 | GPT-4 | GPT-4o | GPT o1 |

| Training Data | Older dataset | More recent & extensive | Most up-to-date | Most up-to-date |

| Output tokens | 4,096 tokens | 8,192 tokens | 16,384 tokens | 100,000 tokens |

| Context window | 16,385 | 8,192 | 128,000 | 200,000 |

| Accuracy & Coherence | Good | Significantly improved | High | Exceptional |

| Reasoning Abilities | Basic | Advanced | Advanced | PhD-level |

| Coding Skills | Decent | Proficient | Proficient | Near expert-level |

| Creative Writing | Capable | More creative & nuanced | Fast, creative | Not feasible |

| Response Speed | Fast | Moderate | Fastest | Slower (deliberate) |

| Best For | Casual use | General tasks | Speed + multitasking | Technical depth |

How AI Detectors Work

AI detectors have become increasingly common in the post-GPT boom.

Their purpose is to figure out whether something was written by a person or spit out by a model like GPT-o1.

They make a guess based on a bunch of nerdy metrics and four major machine learning and NLP concepts.

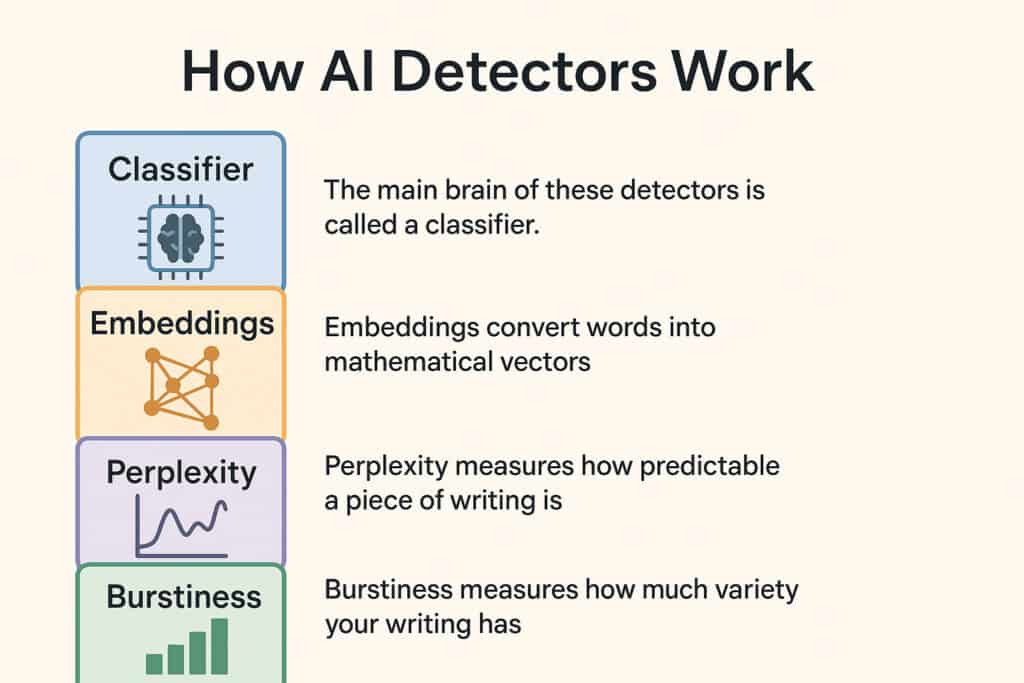

Classifier

The main brain of these detectors is called a classifier.

These classifiers are trained on massive datasets labeled as either AI-generated or human-written, and over time they learn what sets the two apart.

Once the model is trained, it can assess a new chunk of text and decide where it likely belongs on that AI–human spectrum.

It checks how often certain words show up, how long the sentences are, and whether the whole thing sounds too squeaky clean.

Embeddings

Since computers can’t grasp language in the way we do, embeddings convert words into mathematical vectors that represent meaning, context, and word relationships.

So when a detector analyzes a sentence, it’s looking at where words live in this multi-dimensional vector space and how their positions relate to patterns seen in either human or AI outputs.

It’s how the system knows that “queen” and “king” are things that go together.

Perplexity

In AI detection, perplexity is a metric that measures how predictable a piece of writing is.

AI-generated content tends to have lower perplexity because it aims to produce text that flows logically and reads clearly.

Human writing, meanwhile, can be messier. It’s richer in unpredictability, creative leaps, or straight-up weird phrasing.

So, a low perplexity score can be a clue that something came from an AI, but it’s never used in isolation because, well, even humans like to sound obvious sometimes.

Burstiness

Burstiness measures how much variety your writing has.

Human authors usually show higher burstiness because we naturally mix things up between short, snappy lines next to sprawling, complex ones.

AI tends to play it safe. It doesn’t take weird detours or suddenly go off the rails mid-thought. And that makes it easier to spot.

Can GPT-o1 Content Be Detected?

Chat GPT o1 isn’t available for free and is neither part of the regular Plus plan anymore.

It’s been bumped up to OpenAI’s Pro plan, which starts at a steep $200.

Now, even though we don’t have full public access to test GPT o1 ourselves, we can still make an educated guess about its detectability.

OpenAI made it pretty clear that this model was built with STEM in mind.

So based on that, it’s a safe bet that the model’s ability to mimic human writing is would be pretty average, if not entirely bad.

Thanks to a couple of YouTube channels that posted sample outputs from Chat GPT o1 models—specifically the o1-mini and o1-preview—we got to run those texts through popular detection tools and see what kind of red flags pop up.

Are OpenAI’s o1-mini and o1-preview Truly Undetectable?

We pulled two text samples straight from YouTube videos that ran prompts through o1-preview and o1-mini.

Let’s see if their outputs can actually slip past AI detectors without raising alarms.

Is o1-preview Detectable?

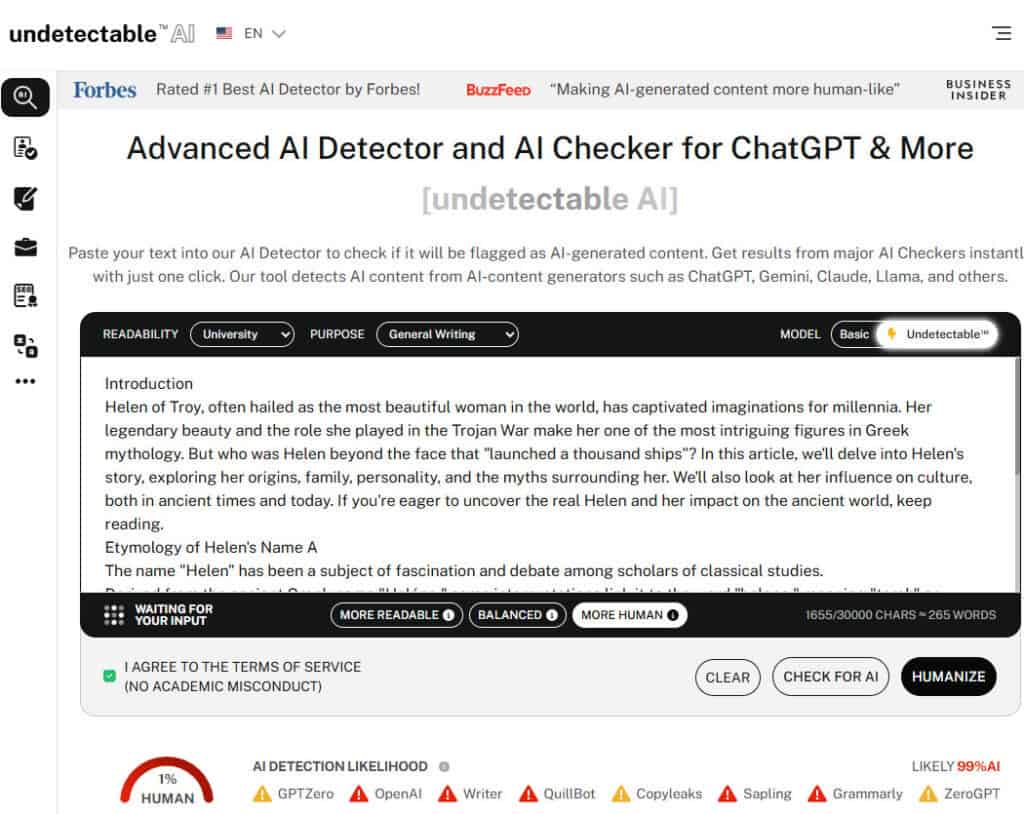

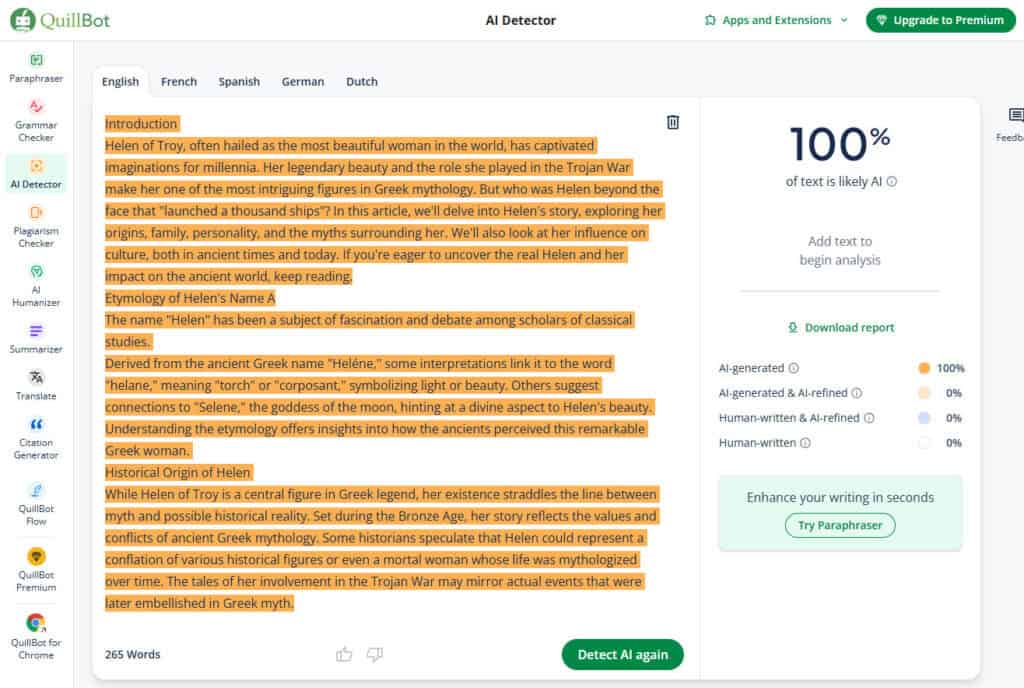

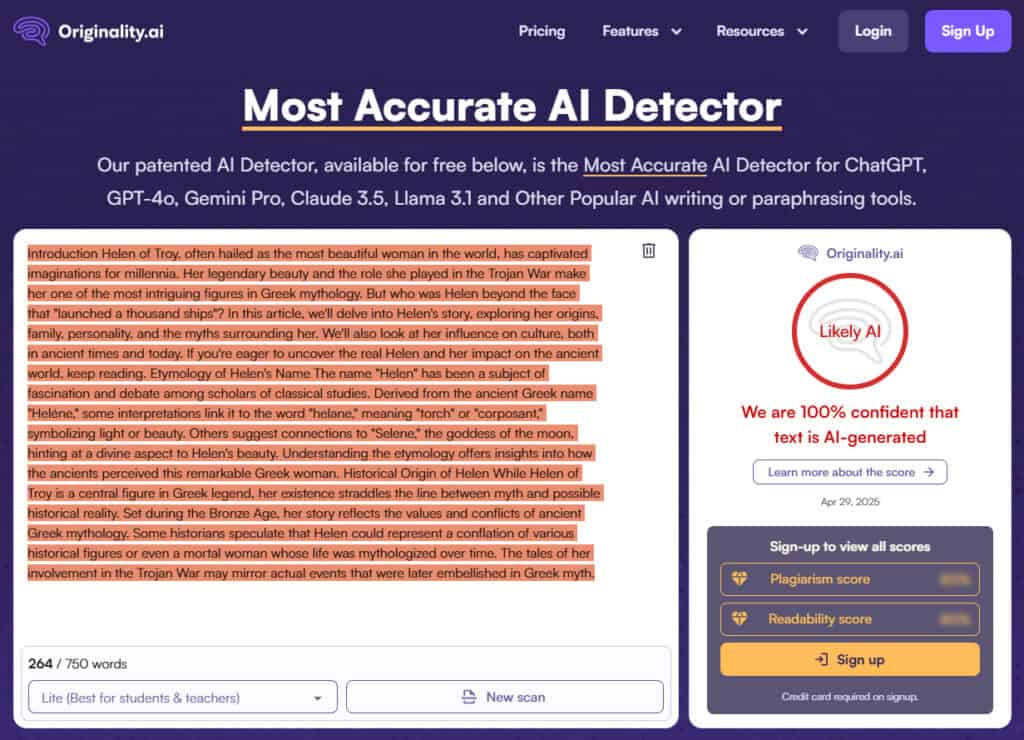

We selected a text sample from a video by The Nerdy Novelist, where the o1-preview model generated a short article titled “Helen of Troy: The Face that Launched a Thousand Ships”.

We focused on the intro and the first two headings, which were 265 words in total.

We threw the text into Undetectable AI, QuillBot, and Originality.ai, just to cover all the bases.

Undetectable AI was not impressed. It flagged 99% of the content as AI-generated and didn’t stop there.

It also predicted that other detectors—like QuillBot, ZeroGPT, and Grammarly—would come to the same conclusion. So, let’s confirm this by running the text through QuillBot.

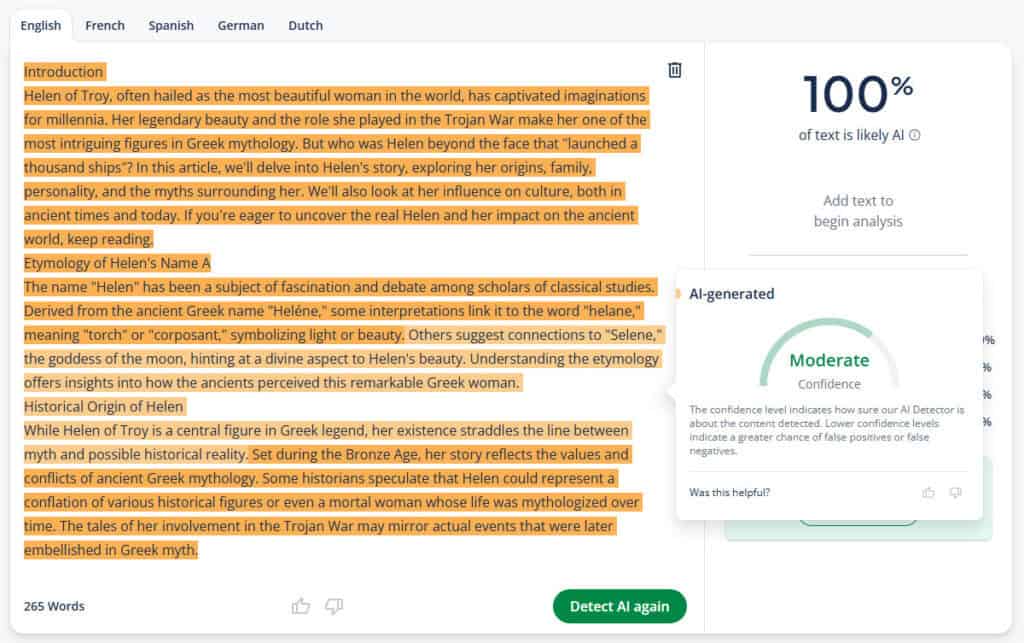

QuillBot followed suit. It flagged the text as 100% likely AI.

But it likes to give a benefit of doubt. You can hover over different parts of the text and see the confidence levels: low, moderate, high.

And then there’s Originality.ai. It came in with 100% confidence that the text was AI-written. Not 98%. Not 99. 100. And it doesn’t use the word “likely” even.

Is o1-mini Detectable?

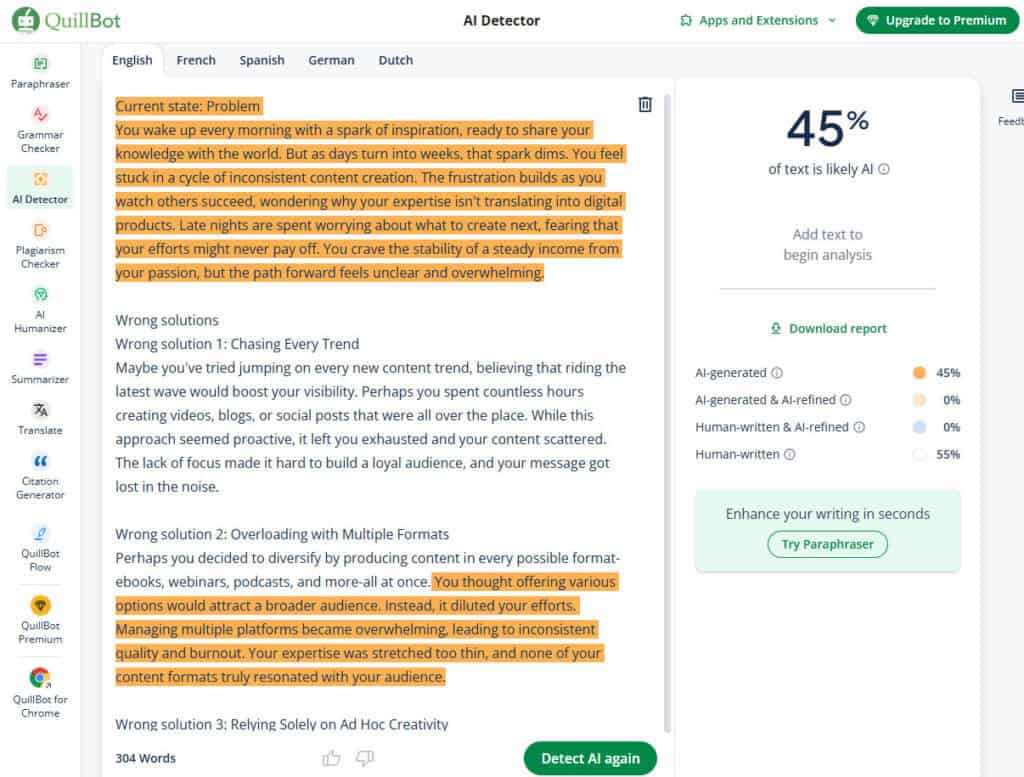

Now let’s talk about o1-mini.

The text we used here is from another YouTuber who used a much better prompt, and it shows. The writing had more personality and a more human flow to it.

The AI detectors noticed too. QuillBot called this one about 45% AI, 55% human. That’s actually a decent result.

If you just gave it a quick read without checking, you might even believe a person wrote it.

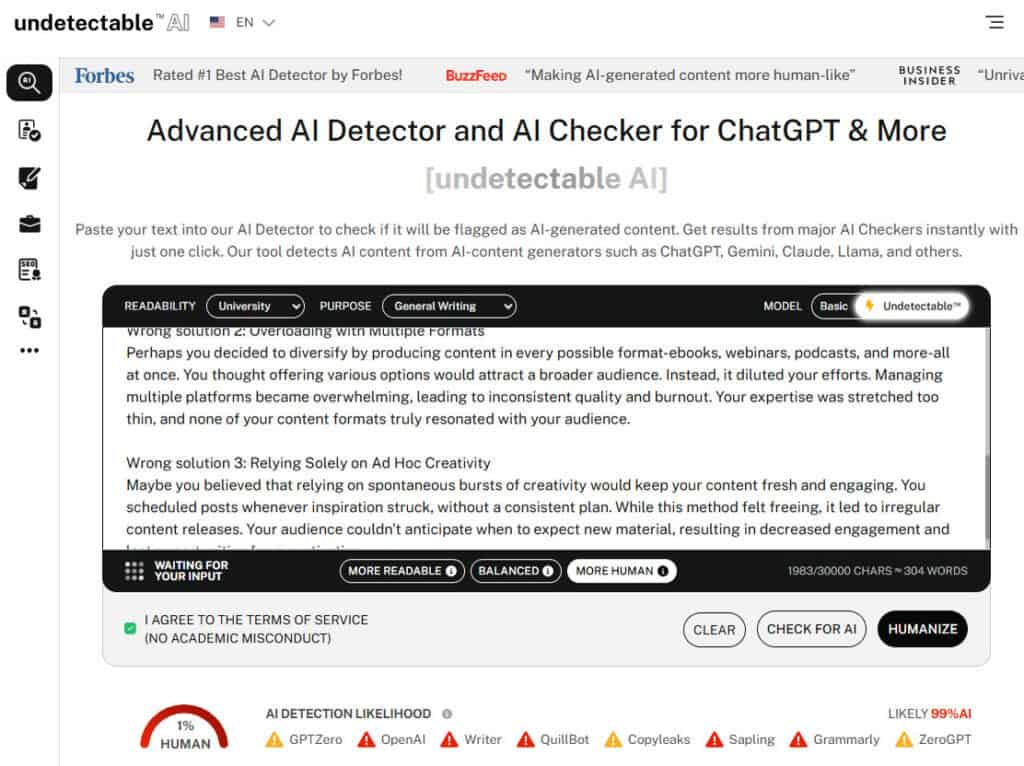

But don’t get too comfortable. Undetectable AI and Originality.ai weren’t fooled.

They both still called it AI with 99% and 100% certainty, respectively. So even with a better prompt, the writing couldn’t clear the fence.

Which brings us to the bottom line: OpenAI’s o1-preview and o1-mini are definitely detectable, especially if you use an Undetectable’s AI detector.

You can tweak your prompt, rephrase your sentences, maybe even fool one tool here and there. But Chat GPT o1 still has high chances of being detected as AI detection.

How to Bypass AI Detectors with GPT-o1

So you’ve got your shiny new text straight from GPT o1—clean, fast, and eerily coherent. Great. But now comes the real trick: making it look like a human actually wrote it.

Once GPT o1 has done its job, you don’t just post it raw. You need to make it look like a human wrote it.

But instead of doing it manually, you can do it through AI humanizers.

These tools know how to take robotic-sounding text and give it a little human weirdness. The kind that detectors struggle to decode.

But here’s the thing. There are quite a few tools out there for this purpose that promise to “humanize” your text but end up making it sound like a badly rehashed text.

That’s why Undetectable AI deserves some spotlight.

Our suite of tools like Humanizer, Stealth Writer, and Paraphraser actually understand how AI detectors think.

- Humanizer tweaks the flow and phrasing just enough to fly under the radar.

- Stealth Writer adds variation in sentence structure, which is key for confusing classifiers.

- And the Paraphraser reshapes the text while keeping the original meaning intact.

Basically, these tools know the language of AI, and they know how to throw it off its game.

So, if you’re using GPT o1 for writing, and you want your work to pass as human, don’t skip the post-processing step.

Even a little cleanup using Undetectable AI can make a big difference in beating detection tools.

Discover our AI Detector and Humanizer right in the widget below!

GPT-o1 vs GPT-4o: Which Is More Detectable?

We already talked about how GPT o1 leans heavily into math and science, while GPT-4o’s got a bit more finesse when it comes to language. But how do GPT o1 vs 4o compare in writing?

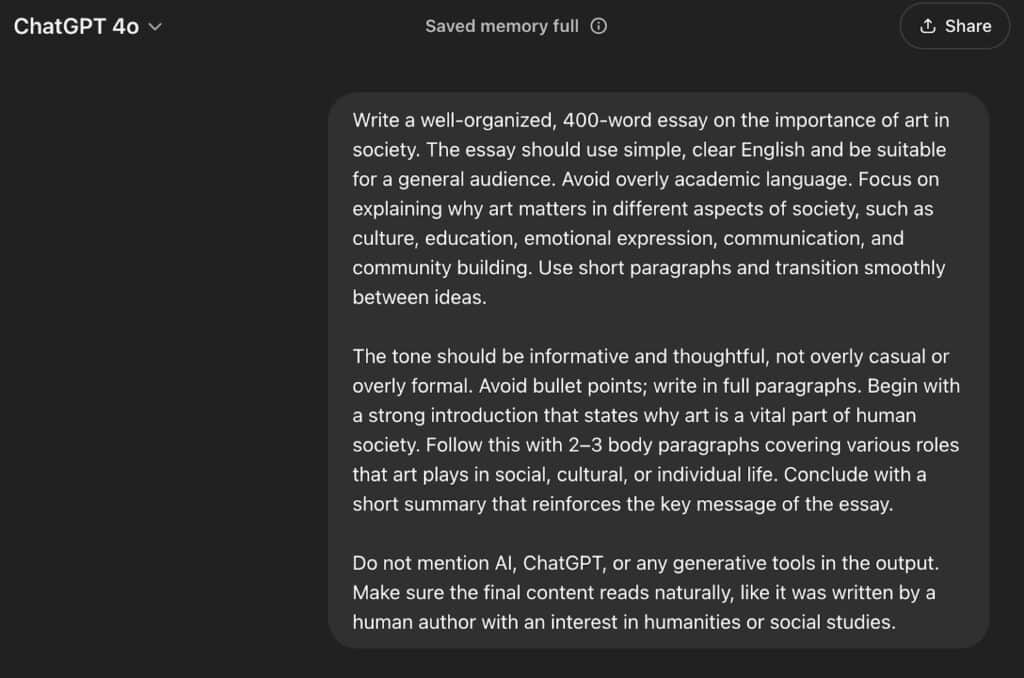

First, we used this prompt to generate 400 words of content using GPT 4o:

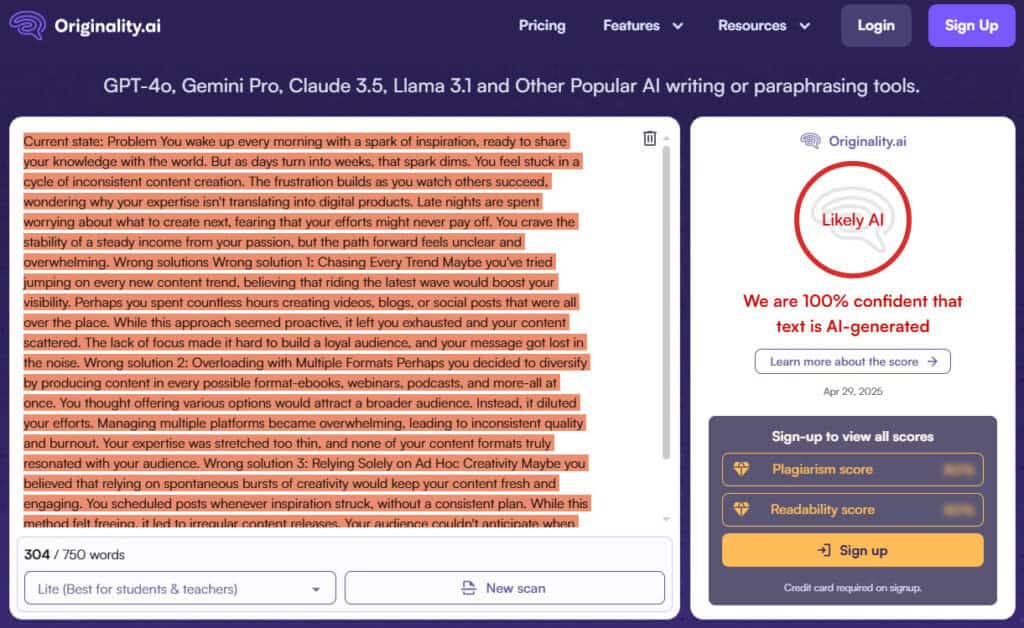

Then, we ran GPT-4o’s output through the same three AI detectors: Undetectable AI, QuillBot, and Originality.ai.

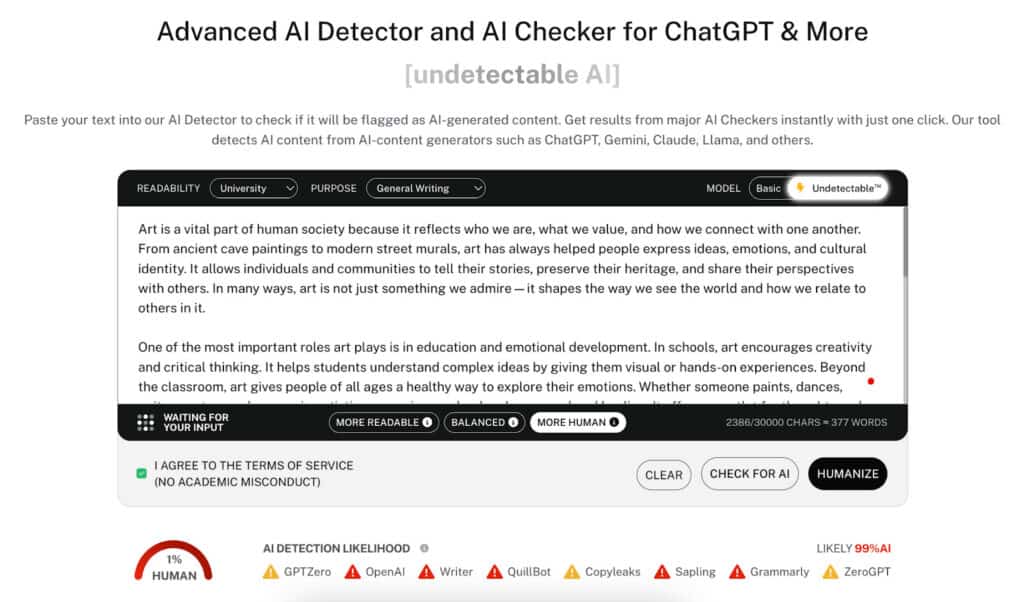

Undetectable AI flagged 99% of the content as AI. That’s some impressive AI detection, isn’t it?

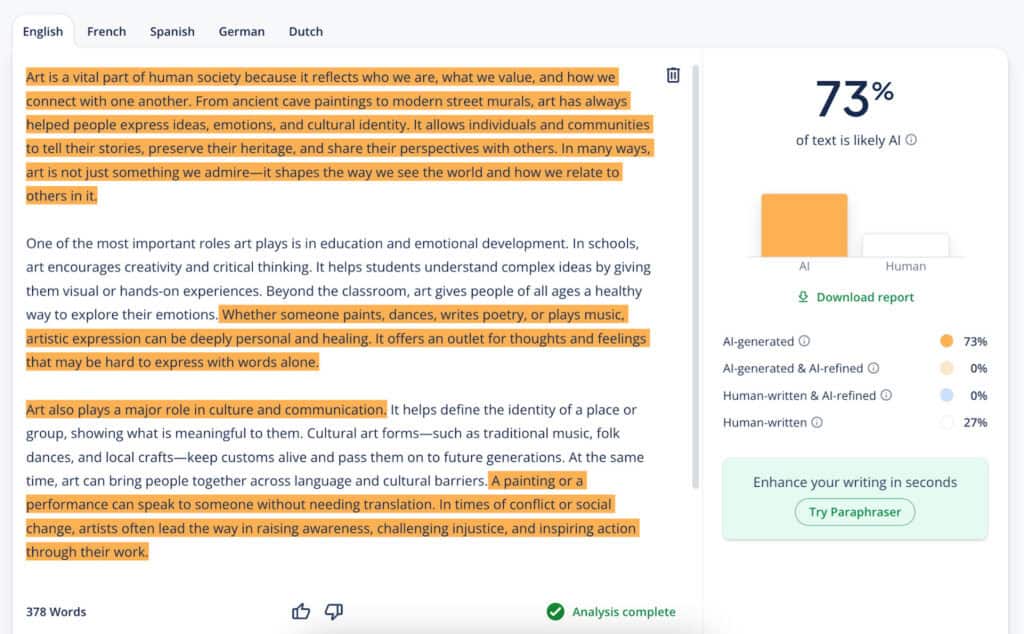

Then QuillBot came in and said, “Not so fast.” It flagged 73% of the same text as AI-generated, an even harsher judgment than it gave to GPT o1-mini, which it scored at 45%.

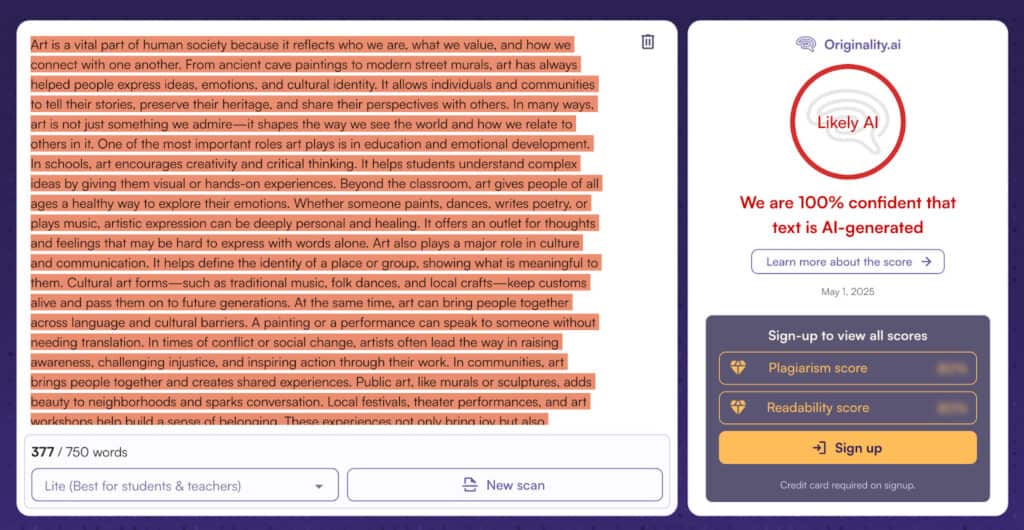

Originality.ai was still stuck in full suspicion mode. It clocked GPT-4o’s content at 100% AI, same as always.

So what does all this mean? Most Ai detectors like Undetectable AI and Orginality AI are good at catching ChatGPT o1 and 4o text.

But if we’re keeping score, GPT o1 is clearly more detectable. Across multiple detectors, it was consistently flagged at 99–100% AI, even with improved prompting.

And honestly, that makes sense. GPT o1 wasn’t built to be a language model. It’s a STEM-first model, built to solve problems.

GPT-4o, on the other hand, knows how to sound more natural, especially when paired with a solid prompt.

So if you’re choosing between the two for writing tasks where stealth matters, GPT-4o is your better shot at slipping past the radar.

You can also use Undetectable AI’s Math Solver to see how models like GPT-o1 handle structured problem solving, since it breaks equations into clear steps that make their reasoning easier to evaluate.

Final Verdict: Is GPT-o1 Detectable?

Our findings conclude that yes, GPT o1 is detectable.

Even with decent prompt engineering, the text it generates still trips up most AI detectors.

But to be fair, writing wasn’t the job it was trained to do. GPT o1 was built for STEM-related tasks like solving equations, coding, and crunching data.

So if you’re trying to whip up content that actually sounds human, GPT o1 probably isn’t the model for that. You’re better off using GPT-4o, which has more language fluency, or better yet, using a tool built specifically for writing undetectable AI content.

That’s where Undetectable AI steps in.

Our AI Humanizer rewrites your content in a way that sounds natural, nuanced, and convincingly human.

Whether you’re writing blog posts, essays, or product descriptions, it adapts to your topic without setting off AI detection alarms.

And speaking of alarms, if you want to test how detectable your content really is (whether it’s from GPT o1, GPT-4o, or any other model) our AI Detector is one of the most accurate tools on the market.

So, skip the guesswork.

Try Undetectable AI today.