The great Albert Einstein once said, “If we knew what it was we were doing, it would not be called research, would it?”

In most research cases, this applies. When you design experiments and ask the right questions, you’re on track.

But if your results can’t prove reason (without other variables crashing the party), then what you’ve got is a confusing mess, not a conclusion.

Welcome to the world of internal validity.

It’s your alter ego, your conscience, your very own Jiminy Cricket. If your experiment says “This is a success,” the first thing internal validity asks is: Did it, though? It’s the difference between “I think it worked” and “I know it worked, and here’s why.”

But internal validity isn’t just for academics and research. Marketing professionals testing campaign effectiveness, product developers running A/B tests, and even everyday people evaluating health claims all need this skill.

The ability to determine if X truly caused Y (rather than some hidden factor Z) is essential in our data-driven world.

Let’s unpack everything you need to know about internal validity. We’ll explore what it is, why it matters, and how to strengthen it in your own research.

Best of all, we’ll translate complex concepts into real-world examples that make sense.

What Is Internal Validity?

Internal validity is the extent to which you can trust that your study’s findings accurately reflect cause-and-effect relationships.

In simpler terms, it answers this question: “Can I be confident that my independent variable actually caused the changes I observed in my dependent variable?”

Internal validity is like the “truth detector” for your research conclusions.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

High internal validity means you’ve successfully ruled out alternative explanations for your results.

You’ve created a research environment where other variables can’t sneak in and confuse your findings.

Take a classic example: A researcher wants to determine if a new teaching method improves test scores.

Students who receive the new method score higher on their finals.

But did the teaching method cause this improvement? Or was it because the teacher unconsciously gave more attention to the experimental group? Perhaps the students who received the new method were already stronger academically?

These questions target the study’s internal validity.

Internal validity doesn’t happen by accident. It requires careful planning, meticulous execution, and honest analysis of potential flaws.

The goal isn’t perfection, as no study is immune to all threats, but rather maximizing confidence in your conclusions through rigorous research design that prioritizes control over confounding variables.

Why Internal Validity Matters

Why should you care about internal validity?

Because without it, your research conclusions are essentially meaningless.

Strong internal validity separates genuine insights from misleading correlations.

For example, pharmaceutical companies spend billions testing new medications. Without internal validity, they might approve drugs that don’t actually work or miss dangerous side effects.

Policy-makers rely on research to make decisions affecting millions of lives. Educational reforms, public health initiatives, and economic policies all depend on valid research conclusions.

Even in business settings, internal validity matters. A company might attribute increased sales to a new marketing campaign when the real cause was seasonal buying patterns.

Without attention to internal validity, companies make expensive mistakes based on false assumptions.

Even drafting a winning research proposal comes with the responsibility of showing how you’ll control for variables and rule out alternative explanations, because strong ideas mean nothing if the design can’t back them up.

Key Characteristics of High Internal Validity

What does research with strong internal validity look like?

Here are the hallmarks:

- Clear temporal sequence: The cause must precede the effect. This seems obvious, but it can be tricky in observational studies, where it’s not always clear what happened first.

- Consistent, strong relationship: The stronger and more consistent the relationship between variables, the more confidence we can have in causality.

- Appropriate control group: A well-matched control group that differs only in exposure to the independent variable strengthens internal validity.

- Random assignment: When participants are randomly assigned to experimental conditions, pre-existing differences are distributed equally across groups.

- Experimental control: The researcher maintains tight control over the study environment, minimizing outside influences.

- Consideration of confounding variables: Good research identifies and accounts for variables that might confound the relationship between cause and effect.

- Statistical conclusion validity: Appropriate statistical tests and adequate sample sizes ensure that detected effects are real and not due to chance.

High internal validity doesn’t happen by accident.

It requires thoughtful research design from the outset, not damage control after data collection.

Threats to Internal Validity

Even the most carefully designed studies face threats to internal validity. Recognizing these threats is half the battle.

Here are the major culprits:

- History: External events occurring during the study period can influence outcomes. If you’re studying a new teaching method’s effectiveness during a pandemic that disrupts normal learning, external factors may contaminate your results.

- Maturation: Natural changes in participants over time can be mistaken for treatment effects. Children naturally develop language skills as they age, so a study on language acquisition needs to account for this normal development.

- Testing effects: Taking a pre-test can influence performance on post-tests, regardless of any intervention. Participants might perform better simply because they’ve seen similar questions before.

- Instrumentation: Changes in measurement tools or observers can create artificial differences in results. If you switch from one standardized test to another midway through a study, score differences may reflect measurement changes rather than real effects.

- Statistical regression: When participants are selected based on extreme scores, they naturally tend to score closer to the average on subsequent tests. This “regression to the mean” can be misinterpreted as treatment effects.

- Selection bias: When experimental and control groups differ systematically before the intervention, these pre-existing differences (not your independent variable) may explain outcome differences.

- Experimental mortality (attrition): Participants dropping out of a study can skew results, especially if dropout rates differ between experimental and control groups. If the most severely ill patients drop out of a drug trial, the drug may appear more effective than it actually is.

- Diffusion or imitation of treatments: In some studies, control group participants may be exposed to aspects of the experimental treatment, diluting group differences.

Awareness of these threats doesn’t automatically eliminate them.

But it does enable researchers to design studies that minimize their impact or account for them during analysis.

How to Improve Internal Validity

Strengthening internal validity isn’t just about avoiding threats, but about actively implementing techniques that enhance causal inference.

Here’s how to boost internal validity in your research:

- Randomization: Randomly assign participants to experimental and control groups. This distributes potential confounding variables equally across groups. For example, in a clinical trial, random assignment helps ensure that factors like age, previous health conditions, and lifestyle habits are balanced between treatment groups.

- Control groups: Include appropriate control or comparison groups that receive either no intervention or a placebo. This allows you to isolate the effects of your independent variable. The gold standard in medical research—the randomized controlled trial—gets much of its strength from well-designed control groups.

- Blinding: Keep participants, researchers, or both (double-blinding) unaware of who received which treatment. This prevents expectation effects from influencing outcomes. In drug trials, both patients and physicians are often kept unaware of who receives active medication versus a placebo.

- Standardized procedures: Create detailed protocols for every aspect of your study and train all researchers to follow them precisely. This reduces variability introduced by inconsistent methods.

- Multiple measures: Use several different methods to measure your dependent variable. If all measures show similar results, you can be more confident in your findings.

- Statistical controls: Use statistical techniques to account for potential confounding variables. Methods like ANCOVA, propensity score matching, or regression analysis can help isolate the effects of your independent variable.

- Pre/post measures: Collect baseline data before your intervention to account for initial differences between groups. This allows you to measure change rather than just end states.

- Pilot testing: Run small-scale tests of your procedures before the main study to identify and correct potential problems. That way, you’ll save time and resources while strengthening your design.

- Manipulation checks: Verify that your independent variable manipulation actually worked as intended. For example, if you’re studying the effect of induced stress, confirm that participants in the stress condition actually felt more stressed.

Remember that improving internal validity often requires trade-offs with other research goals.

For instance, tightly controlled laboratory studies may have strong internal validity but weaker external validity (generalizability to real-world settings).

Internal vs. External Validity

Internal and external validity represent two sides of the research quality coin. While often discussed together, they address fundamentally different questions:

Internal validity asks: “Can I trust that my independent variable caused the observed changes in my dependent variable?”

External validity asks: “Can I generalize these findings beyond this specific study to other people, settings, and situations?”

These two forms of validity often conflict. Studies conducted in highly controlled laboratory environments might have excellent internal validity, where you can be confident about causality. But the artificial setting limits how well the findings translate to real-world contexts, reducing external validity.

In contrast, field studies conducted in natural settings may have strong external validity. The findings are more likely to apply to real-world situations.

However, the lack of control over external variables weakens internal validity, especially when relying heavily on observational data or a single primary source without replication.

Consider these differences:

| Internal Validity | External Validity |

| Focuses on causal relationships | Focuses on generalizability |

| Enhanced by controlled environments | Enhanced by realistic settings |

| Strengthened by random assignment | Strengthened by representative sampling |

| Threatened by confounding variables | Threatened by artificial conditions |

| Asks, “Did X cause Y?” | Asks, “Would X cause Y elsewhere?” |

The ideal research program balances both types of validity. You might start with tightly controlled laboratory experiments to establish causality (internal validity).

Then you progressively test your findings in more natural settings to establish generalizability (external validity).

Neither type of validity is inherently more important than the other. Their relative importance depends on your research goals.

If you’re developing fundamental theories about human behavior, internal validity might be prioritized.

If you’re testing an intervention intended for widespread implementation, external validity becomes extra important.

Real-Life Examples of Internal Validity

Abstract discussions of validity can feel removed from everyday research challenges.

Let’s examine real-world examples that illustrate internal validity concepts:

Example 1: The Stanford Prison Experiment

Philip Zimbardo’s infamous 1971 study suffered from several internal validity problems. The researcher played dual roles as prison superintendent and primary investigator, introducing experimenter bias.

There was no control group for comparison. Participants were aware of the study’s goals, creating demand characteristics.

These issues make it difficult to conclude that the prison setting alone caused the observed behavioral changes.

Example 2: Vaccine Efficacy Trials

COVID-19 vaccine trials demonstrated strong internal validity through several design elements:

- Large sample sizes (tens of thousands of participants)

- Random assignment to vaccine or placebo groups

- Double-blinding (neither participants nor researchers knew who received the actual vaccine)

- Clear, objective outcome measures (laboratory-confirmed COVID-19 cases)

- Pre-registered analysis plans

These features allowed researchers to confidently attribute differences in infection rates to the vaccines themselves rather than other factors.

How AI Tools Can Help in Research Design

AI tools such as those from Undetectable AI are increasingly valuable for strengthening research validity in research paper writing.

These tools help researchers identify potential threats to validity and design more robust studies.

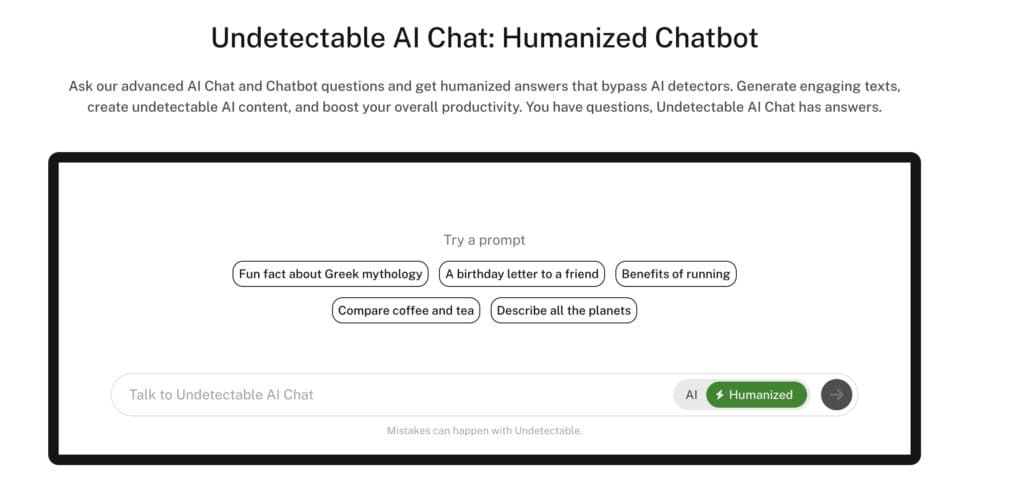

Undetectable AI’s AI Chat offers study design suggestions that reduce bias. This tool can:

- Analyze proposed methodologies for potential confounding variables

- Generate balanced experimental designs with appropriate controls

- Suggest randomization strategies tailored to specific research questions

- Identify possible sources of measurement error

- Recommend statistical approaches for controlling extraneous variables

For example, a researcher planning a study on workplace productivity might ask AI Chat to evaluate their design.

The tool could flag potential history threats (like seasonal business fluctuations) that the researcher hadn’t considered.

It might then suggest a counterbalanced design that controls for these time-related factors.

While these tools can’t replace researcher expertise, they serve as valuable thinking partners.

They help catch design flaws before data collection begins, when corrections are still possible.

Curious about our AI Detector and Humanizer? Try them in the widget below!

No Validity, No Verdict

Internal validity is key to credible research. Without it, we can’t confidently link cause and effect.

While flawless design is rare, careful planning can reduce bias and strengthen your conclusions.

Key reminders:

- Internal validity determines how much we can trust causal claims.

- Threats like selection bias, maturation, and testing effects can distort results.

- Tools like randomization, control groups, and blinding help guard against these threats.

- Balancing internal and external validity is often a trade-off.

- Real-world studies show just how critical internal validity is, whether in labs or public health policies.

As you design or review studies, prioritize internal validity, as it’s what separates real insights from misleading claims.

Need help checking your work? Use Undetectable AI’s AI tools to strengthen your methodology, clarify your logic, and write with more precision and authority.