AI detectors are like the Voight-Kampff test from Blade Runner, a tool used to distinguish between real and artificial humans.

But rather than asking emotional questions and scanning eye movement, AI detectors rely on machine learning (ML) and natural language processing (NLP) to identify AI-generated content.

Ironic, right?

ChatGPT and other famous AI tools also use MLs and NLPs to generate content.

It’s like using the same blueprint to build a house, and accusing it of being a copy.

So, how do AI detectors really work? And what do they mean for writers like you? Let’s find out.

Key Takeaways

Before we go into this rabbit hole, here are the essential points you should remember:

- Detection isn’t perfect. Even the best AI content detectors get things wrong. False positives and false negatives happen regularly.

- Accuracy varies wildly. Some detectors barely perform better than random guessing. Others achieve decent results but still make significant errors.

- Context matters more than you think. Writing style, topic complexity, and content length all affect detection accuracy.

- Hybrid approaches work better. Tools that combine detection with content rewriting offer more practical solutions than detection alone.

- Transparency is rare. Most companies don’t publish real accuracy metrics. When they do, the numbers are often misleading.

How AI Content Detectors Work?

AI content detectors are like pattern recognition systems. They’re trained on massive datasets of human-written and AI-generated text.

The goal is simple: learn to spot the differences.

But here’s where it gets complicated.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

These tools look for specific patterns in writing. Things like sentence structure, word choice, and paragraph flow. They assign probability scores based on how “AI-like” the text appears.

The problem? Human writing and AI writing are getting harder to distinguish. Modern AI models like GPT-4o can produce text that’s remarkably human-like.

This creates a fundamental challenge for detection systems.

Most detectors use one of two approaches. The first is perplexity analysis. This measures how “surprised” the detector is by word choices.

AI tends to pick more predictable words, while humans are more random.

The second approach is burstiness detection. This looks at variation in sentence length and complexity. Humans tend to write with more variation. AI often produces more consistent patterns.

Neither approach is foolproof. Good AI writing can mimic human randomness.

Human writing can sometimes appear very systematic.

What Should be Considered AI Content?

This question is trickier than it seems.

Is content that’s 50% AI-generated considered AI content? What about human-written content that’s been edited by AI?

The industry hasn’t settled on clear definitions. Some detectors flag any content with AI assistance. Others only flag fully AI-generated text.

This inconsistency makes accuracy comparisons nearly impossible.

Consider these scenarios:

- A human writes a draft, then uses AI to improve grammar and flow. Is this AI content?

- Someone uses AI to generate ideas, then writes everything from scratch. AI content or not?

- A writer uses AI to create an outline, then writes original content following that structure.

These edge cases reveal why accuracy metrics can be misleading. Different tools define “AI content” differently.

This affects their reported accuracy rates.

For practical purposes, most tools focus on detecting content that’s primarily AI-generated. But the boundaries remain fuzzy.

What Makes an AI Content Detector “Accurate”?

Accuracy in AI detection isn’t just about getting the right answer. It’s about getting the right answer consistently, across different types of content and use cases.

That’s why some are dismissing these tools.

Some argue that these detectors can be as inconsistent as a fortune cookie’s predictions, raising important questions about reliability and trust.

But true accuracy requires balancing two types of errors. False positives happen when human content gets flagged as AI.

False negatives occur when AI content passes as human-written.

The cost of these errors varies by context. For academic integrity, false positives can destroy student trust.

For content marketing, false negatives might lead to penalties from search engines.

Accuracy also depends on the quality of training data. Detectors trained on older AI models might struggle with newer, more sophisticated AI writing.

This creates a constant arms race between detection and generation.

The best detectors consider multiple factors:

- Statistical patterns in word usage and sentence structure

- Semantic coherence and logical flow

- Writing style consistency throughout the content

- Domain-specific knowledge and expertise demonstration

But even comprehensive approaches have limitations. Human writing varies enormously. Some people naturally write in patterns that trigger AI detectors.

Others can mimic AI-like consistency.

The goal isn’t perfect accuracy. It’s reliable accuracy that serves your specific needs.

And while no detector is flawless, the right tool can make the difference between a confident submission and a flagged document.

Undetectable AI’s Detector and Humanizer work together in a single workflow, offering a balanced approach that not only identifies AI-generated text but also rewrites it naturally.

With this integrated solution, you get both detection accuracy and practical solutions in one seamless experience.

Try the Undetectable AI Detector and Humanizer today and experience the confidence of AI-free, authentic content that’s ready for any challenge.

How We Measure Our AI Detector Accuracy

Most companies throw around accuracy percentages without explaining how they calculated them. We believe in transparency.

Our accuracy testing follows rigorous methodology.

We use diverse datasets that include content from multiple AI models, human writers of different skill levels, and various content types.

Here’s our testing process:

- Dataset Creation: Thousands of text samples, AI-generated. Covering academic essays, marketing copy, creative writing, and technical documentation. We sourced AI content straight from leading models and curated human text for broad representation.

- Blind Testing: Our detector analyzes each sample without knowing its source, outputting confidence scores and classifications (AI vs human).

- Statistical Analysis: From there, we calculate:

- True Positives / False Positives / True Negatives / False Negatives

- Precision, recall, and F1-score, which are standard metrics in machine learning evaluation.

- Cross-Validation: We test across content types and lengths to gauge performance in real-world use cases: academic, marketing, technical, and creative. Studies show creative writing is the hardest to detect accurately, so we pay extra attention to it.

- Continuous Monitoring: AI models evolve fast. Detectors trained on older data underperform on newer outputs. We track performance over time and retrain when needed to maintain accuracy.

Our current testing shows significant variation based on content type.

Academic writing is easiest to detect accurately. Creative writing poses the biggest challenges.

Current Accuracy of Our AI Content Detector

With Undetectable AI, we don’t rely on hearsay. We place our detector under the spotlight ourselves:

- Industry-leading external validation: Independent tests show Undetectable AI’s detector achieves 85 to 95% accuracy on mixed human and AI content, rivaling top-tier tools in the field.

- Paraphrase detection prowess: Research indicates Undetectable AI accurately spots paraphrased AI text 100% of the time in multiple tool comparisons, including free tools like Sapling and QuillBot.

- Self-reflective testing: Undetectable’s own GPTZero-comparison test flagged 99% of AI-generated content correctly, while GPTZero flagged only 85%.

- Continuous improvement through blind swapping: When users humanize AI text via Undetectable AI, traditional detectors (like Originality.ai) drop from over 90% detection rates to under 30%, proving the strength of our rewriting model.

- Backed by millions: Rated number one AI detector by Forbes, with over 4 million users and free usage across platforms.

In real terms, that means Undetectable AI delivers top-tier detection accuracy and pairs it with a state-of-the-art humanizer for seamless rewriting.

Test your content now — free scan with our AI Detector. Start with confidence: check your writing, get instant insights, and take action.

Why We Pair AI Detector + Humanizer

Detection alone isn’t enough. Knowing that content might be AI-generated doesn’t solve the underlying problem.

You need actionable solutions.

That’s why we built our platform around the detector-humanizer workflow. Instead of just flagging potential AI content, we help you address it.

Here’s how the paired approach works:

- Detection First: Our AI detector analyzes your content and identifies sections that might be AI-generated. You get specific confidence scores for different paragraphs.

- Targeted Rewriting: Our humanizer focuses on the flagged sections. Rather than rewriting everything, it intelligently modifies only the parts that need improvement.

- Verification Loop: After humanization, we run detection again to confirm the content now reads as human-written.

- Quality Preservation: The process maintains your original meaning and style while reducing AI detection signatures.

This workflow solves real problems. Content creators can ensure their work won’t trigger false positives. Students can verify that their writing appears authentically human.

Marketers can produce content that passes detection while maintaining quality.

The alternative is pure detection, which leaves you with problems but no solutions.

Knowing content might be AI-generated doesn’t help if you can’t fix it.

How We Compare to Other AI Content Detectors

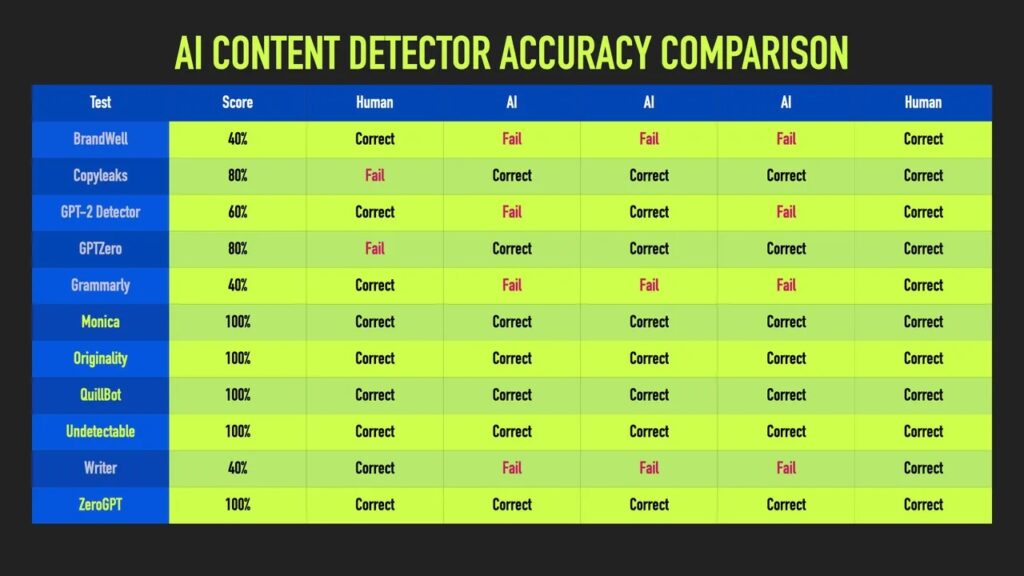

The AI detection landscape is cluttered with tools making ambitious accuracy claims. Independent testing shines a clearer light on what actually works.

ZDNet’s deep dive into 10 major AI content detectors involved submitting the same AI-generated samples to each tool and checking which consistently identified AI-written text.

Many detectors fell short. Some that claimed near-perfect accuracy barely scored better than chance when tested on real-world content.

Undetectable AI, however, stood out and landed in the top five for consistent AI-text detection across all samples.

ZDNet tested 10 AI detectors using identical AI-generated samples.

Out of those, only three tools flagged AI text 100% of the time across all tested samples.

Notably, our performance remained solid regardless of content type, not just on curated, easy examples.

- Consistent performance across a wide range of AI models and content types. While competitors often excel in narrow conditions, we maintain accuracy across the board.

- Clear methodology. We explain our testing procedures and update performance metrics regularly with no vague claims.

- Integrated solutions. We link detection with rewriting via our Humanizer. Purely detection-focused tools leave you with problems but no fixes.

- Frequent retraining. We continuously retrain our models as AI generation evolves. Static detectors quickly lose relevance.

- Honest limitations. We clearly communicate challenges and edge cases. Overpromising leads to user frustration and poor decisions.

ZDNet’s study emphasized a key point: consistency trumps flashy highs. A detector that is reliable 95% of the time beats one that hits 99% occasionally but crashes to 60% in other contexts.

Test your content now | Free scan with our AI Detector.

See firsthand where you stand. Scan your writing, get trusted results, and take actionable next steps.

See our AI Detector and Humanizer in action—just use the widget below!

Trust, Transparency, and Tools That Deliver

AI content detector accuracy is more than just numbers. It’s about understanding what these tools can and cannot do reliably.

Detection technology is promising but imperfect. Even the best tools make mistakes. Knowing these limitations helps you use them wisely.

The future of AI detection looks toward multi-modal analysis, behavioral patterns, and collaborative verification.

For now, detection tools like Undetectable AI should be treated as helpful assistants, not final judges. Combine them with human judgment and choose solutions that fit your needs.

Pure detection rarely solves real problems. That’s why Undetectable AI offers an integrated workflow that balances detection with content improvement.

The goal is not to eliminate AI from writing but to ensure transparency, maintain quality, and preserve trust.

Understanding detector accuracy, especially with Undetectable AI, puts you in control of the process.