There’s one thing that’s been on everyone’s tongue for the past couple of years: ChatGPT.

Teachers worry that it’s writing students’ homework.

Writers fear AI might write books and stories instead of them.

Coders are scared it will build websites and apps better than humans can.

Some even predict that millions of jobs could disappear because of AI.

We’ve all heard of it. But have you ever wondered how ChatGPT works?

How does ChatGPT write a kid’s homework? What’s happening behind the scenes when you type a question and it replies like a real person?

How can a machine write poems, stories, and code, even though it doesn’t understand words the way we do?

In this blog, we will look inside ChatGPT and explain what ChatGPT is and how it works, step by step.

You’ll learn how it remembers things, what its limits are, and how tools like this are built in the first place.

Let’s get started!

The Foundation: GPT Language Models

ChatGPT is an AI that is made to understand and write like a human.

It’s called a language model because it works with language – reading it, predicting it, and generating it.

But it doesn’t understand like people do. It doesn’t think. It doesn’t know facts. It just looks at patterns.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

For example,

- If you type, “The sky is…”

- It might say “blue.”

Not because it knows the sky is blue, but because it saw that sentence millions of times during training. It learned that “blue” often comes after “the sky is.”

This type of AI is called an LLM, which stands for Large Language Model.

It’s trained on tons of textbooks, websites, and more to figure out how humans use words. But it’s not reading for meaning. It’s learning how words usually show up next to each other.

GPT is a specific kind of LLM.

GPT stands for “Generative Pre-trained Transformer.”

- Generative — it can create new text.

- Pre-trained — it learn before it talks to you.

- Transformer — the key technology behind how ChatGPT works that helps it understand how words relate to each other in a sentence, paragraph, or even conversation.

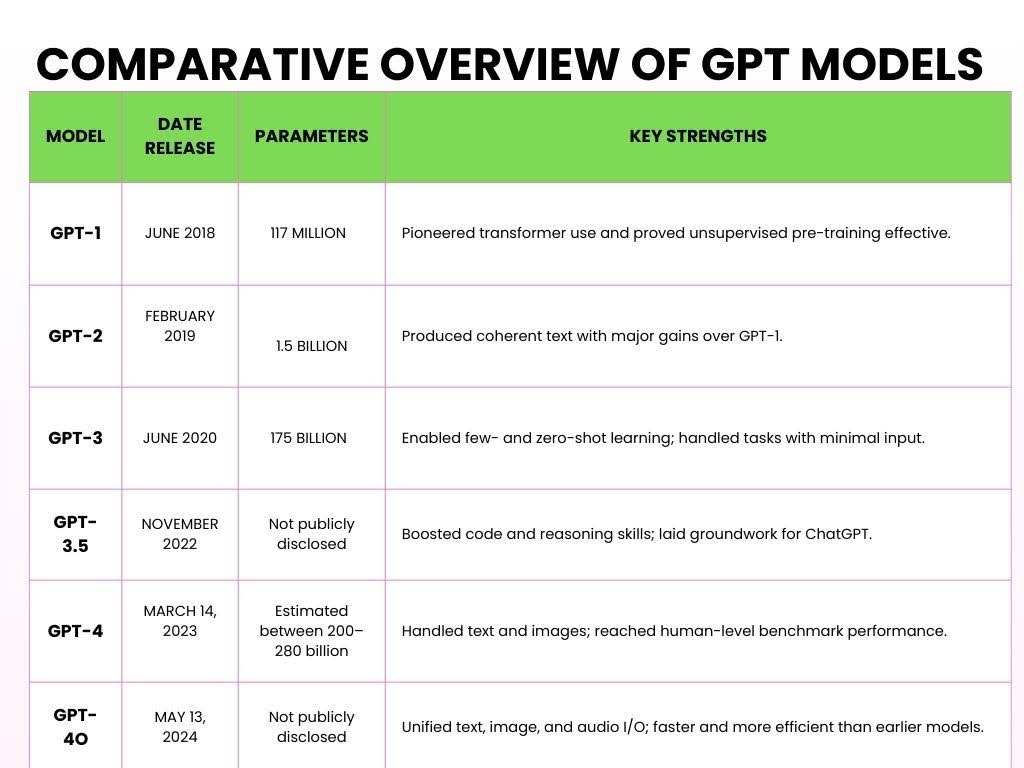

Here are the different versions of GPT that have been launched over the years.

How ChatGPT Works

Here’s a simplified breakdown of how ChatGPT works and processes your input in five key steps.

- Step 1: Pretraining on Massive Data

Large Language Models (LLMs) like ChatGPT are pre-trained by processing vast amounts of text from the internet to learn language patterns.

During pre training, the model processes trillions of tokens (tiny pieces of text).

A token can be a word, part of a word, or even punctuation, depending on how the model tokenizes the input.

For example,

You ask ChatGPT a math question, such as:

Problem:

- 2 + 3 = ?

During its training, ChatGPT reads hundreds of billions of words from books, news, articles, Wikipedia, stories, science papers, and even Reddit threads.

This training and reading help it understand how addition works.

For example,

It might learn the commutative property (i.e., 2 + 3 = 3 + 2) through such contexts.

ChatGPT sees many examples like

- “2 + 3 = 5,”

- “7 + 8 = 15,”

- “9 + 4 = 13.”

It doesn’t learn just these specific examples, it learns the pattern of addition.

It understands how numbers interact with the “+” symbol and how the result typically follows the “=” symbol.

- Step 2: The Transformer Architecture

Once ChatGPT had seen a massive amount of words, it needed a brain that could make sense of all that information. That brain of AI is called “Transformer.”

What sets the Transformer apart is its ability to focus attention on the most important parts of the input, much like how we process language.

For example,

Let’s apply this to a math problem:

- “What is the sum of 5 and 7?”

When processing this, the Transformer doesn’t just go word by word.

Instead, it looks at the full context — “sum,” “5,” and “7” — all at once.

It recognizes that “sum” refers to “addition” and that “5” and “7” are the numbers involved.

The Transformer then gives more “attention” to those words that directly impact the answer, so it focuses on the operation (“sum”) and the numbers (“5” and “7”).

This is a key part of how ChatGPT works – it doesn’t linearly process the problem, but in a way that helps it understand the relationship between the elements.

This ability to look at everything in context is what makes the Transformer so powerful.

Instead of just figuring out what comes next, it understands the meaning by connecting the relevant pieces of the sentence together.

Furthermore, stepping into a new online community often means learning a whole new vocabulary, but you don’t have to wait months to start speaking the language.

Plus, if you’re looking to help your messages blend in seamlessly, Undetectable AI’s Acronym Generator lets you create custom shorthand that mimics the complex patterns AI models use to process and generate structured linguistic short-forms.

- Step 3: Tokenization and Language Processing

When you type text into ChatGPT, it breaks your prompt into small tokens.

Some tokens are full words, while others are just parts of words.

For example,

When you input “ChatGPT is smart,” ChatGPT splits it into the following tokens:

[“Chat,” “G,” “PT,” “is,” “smart”]

Even the name “ChatGPT” gets split into different tokens.

This process is called tokenization. Because the models have been trained on tokens instead of full words, it’s much more flexible as it can handle:

Multiple languages (since different languages have different word structures),

Slang and abbreviations (like “u” for “you” or “idk” for “I don’t know”).

Even made-up words or variations (like breaking “unbelievable” into “un,” “believ,” “able”).

- Step 4: Fine-Tuning and Safety Layers

Once ChatGPT has been trained on a vast amount of data, it’s not quite ready for prime time.

It still needs help to make sure it responds in the most useful, polite, and safe way.

Through supervised fine-tuning, human reviewers give ChatGPT examples of what makes a good response. For example,

- “What is 5 + 7?”

- Bad answer: It’s an easy question. Why don’t you know this?

- Good answer: The sum of 5 and 7 is 12.

Over time, ChatGPT is trained with better examples to become more polite, clear, and focused.

Once it has a solid foundation, it gets more advanced help through Reinforcement Learning from Human Feedback (RLHF).

This process goes like this:

- ChatGPT answers.

- Humans rate that answer based on how good it is — how useful, accurate, and safe it is.

- ChatGPT learns from this feedback and tries to give better answers in the future.

For example, imagine ChatGPT answers a math problem, such as “What is 12 divided by 4?”:

- ChatGPT answers: “3.”

- Human feedback: This answer is great.

- ChatGPT learns: It keeps giving this type of response when similar questions come up.

The goal is for ChatGPT to keep improving, just like a student who learns from past mistakes.

Finally, one important purpose of fine-tuning by humans is the align with human values.

We want it to be not just smart, but also helpful, harmless, and honest.

For example, if a user asks a complex question like, “What is the square root of -1?”

Instead of giving an incorrect answer like “i is the answer” without any context, it would provide:

Safe, aligned response: The square root of -1 is an imaginary number, commonly represented as ‘i.’ This concept is used in advanced mathematics.

- Step 5: Prompt In, Response Out

This is the final step where ChatGPT is ready to answer your prompts.

A prompt is the text (i.e., question, command, or statement) you type into ChatGPT to start the conversation and get a response.

For example,

You enter the prompt “What’s the weather today?”

Here’s how does ChatGPT work behind the scenes:

You type in a prompt → ChatGPT breaks it into tokens → It looks for patterns in the tokens → Predicts the next word → Forms a response → Adjusts tone based on your text → Gets your final answer

For the prompt “What’s the weather today?”, ChatGPT would likely respond with something like:

“I can’t give real-time weather updates, but you can check a weather site or app like Weather.com or your local news for the most accurate info.”

This is because ChatGPT doesn’t have live data access unless it’s connected to a tool that fetches real-time information.

How It “Remembers” Conversations

When you talk to ChatGPT, it seems like it remembers things you said earlier.

And it does — but only while the chat is open. Imagine a big notepad where everything you type gets written down:

You say:

- My dog’s name is Max.

A few lines later, you say:

- What tricks can Max learn?

ChatGPT connects the dots. It remembers that Max is your dog, because it’s still on the notepad.

This notepad is called a context window, and it holds a limited number of words (called tokens).

Some versions can hold about 8,000 tokens, while the newest ones can go up to 32,000 tokens.

But once you hit the limit, it has to start erasing the oldest parts to make room for new text.

So if you say “My dog’s name is Max” way back at the start of a long chat — and then 50 paragraphs later ask, “What’s a good leash for him?” — it might forget who “him” is.

Because that info has already been erased from the notepad.

Now let’s talk about memory between chats.

Normally, when you close the chat, the notepad gets wiped clean.

So next time you open ChatGPT, it starts fresh.

But if you turn on custom memory, ChatGPT can remember things across sessions. For example,

- You tell: I run a small online bakery called Sweet Crumbs.

- A week later, you say: Write me a product description.

- It might reply: Sure! Here’s a description for your Sweet Crumbs cookies…

It doesn’t remember everything. It only remembers what you allow, and you’ll be told when something gets added. You can see, edit, or delete memories at any time.

So the lowdown is…

ChatGPT doesn’t actually “remember” like a person. It just looks at what’s in front of it — the current conversation.

If it looks like it recalls something from earlier, it’s because that info is still inside the context window.

Limitations of How ChatGPT Works

ChatGPT is incredibly helpful, but it’s important to understand its limitations, especially if you’re using it for anything customer-facing or conversion-driven.

1 – No real understanding or consciousness

ChatGPT doesn’t understand content like humans do. It doesn’t “know” facts — it simply predicts the next likely word based on training data.

For example,

If you ask, “What does success mean?” it may generate a fluent response, but it doesn’t have beliefs, values, or awareness. It’s mimicking patterns, not forming insights.

2 – Biases from training data

Because ChatGPT is trained on large, mixed sources from the internet, books, forums, and articles, it can inherit biases found in that data.

If the internet leans one way on a topic, ChatGPT might mirror that perspective — sometimes subtly, sometimes not — even when neutrality is required.

3 – Doesn’t browse the internet

ChatGPT can’t fetch real-time data. Ask it about a product launched last week or a stock price today, and it won’t have a clue.

Its training data has a cutoff, and anything after that point is out of reach.

4 – May “hallucinate” facts or cite fake sources

One of the more dangerous quirks: ChatGPT can make things up. Ask it for a statistic or quote, and it might respond,

“According to the World Health Organization, 80% of adults prefer brand X over brand Y.”

Sounds official — but that stat likely doesn’t exist.

It wasn’t retrieved, it was invented. This issue is known as hallucination, and it’s especially risky in research, journalism, or technical content.

If you ask ChatGPT how it works, you’ll see that it isn’t always factually accurate.

If you’re using ChatGPT for writing purposes, the output would feel stiff, robotic, or lacking that human edge.

For such nuances, you can use AI Humanizer.

The AI Humanizer rewrites ChatGPT outputs for tone, nuance, and emotion, giving your content a heartbeat.

It softens awkward phrasing, adds warmth, and makes technical or dry copy resonate with your audience.

When your content sounds human, it performs better.

Whether you’re writing landing pages, emails, or LinkedIn posts, relatability drives response. And emotion drives conversion.

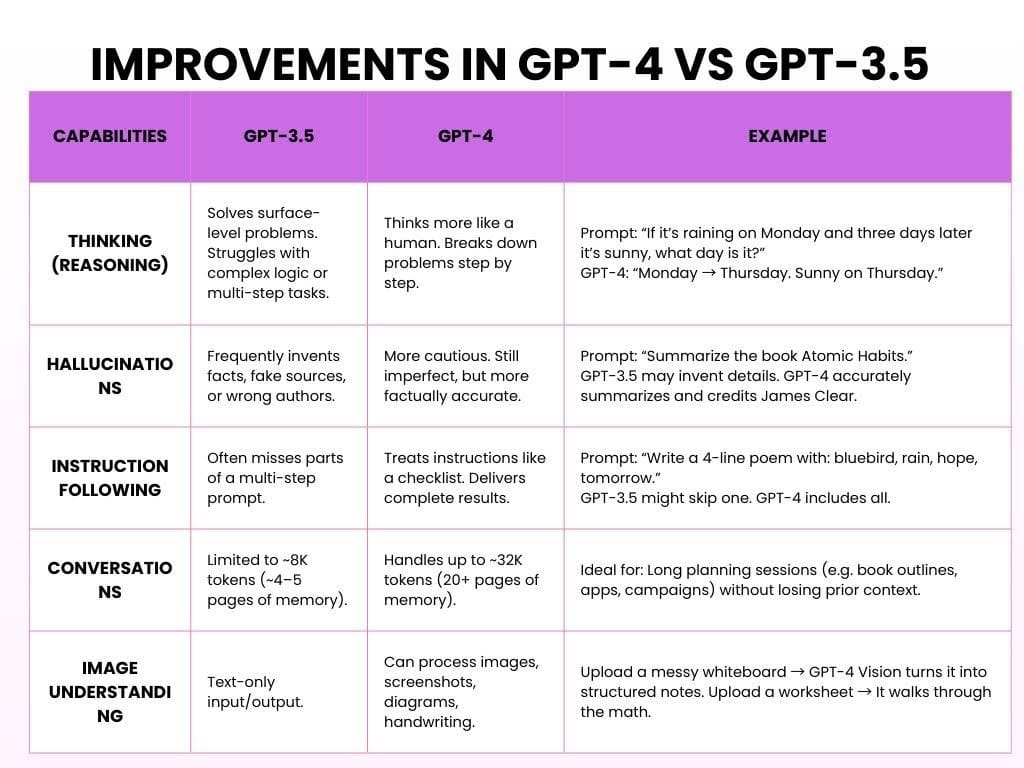

Improvements in GPT-4 vs GPT-3.5

GPT-3.5 is the free version, which is fast, solid, and great for simple tasks. GPT-4 is a paid version of OpenAI that’s smarter, more powerful, and way more helpful.

Here’s how ChatGPT works with both models:

Bottom line is that GPT-3.5 was helpful. GPT-4 is dependable, thoughtful, and feels like it’s listening.

Start exploring—our AI Detector and Humanizer are waiting in the widget below!

How AI Tools Like ChatGPT Are Built

Creating an AI like ChatGPT or other large language models is a multi-year project that involves massive datasets, expert teams, and relentless iteration.

Here’s how it typically happens:

- Phase One: Data Collection (6-12 months)

Objective: Teach the model language patterns.

Before an AI can answer questions, it needs to learn how humans write and speak.

This starts with gathering hundreds of billions of words from books, websites, news, articles, academic papers, and more.

It doesn’t “read” like humans. Instead, it identifies patterns, just the way we’ve explained in the above sections.

Time required: 6-12 months, depending on scale and team size.

- Phase Two: Pretraining the Model (6-9 months)

Objective: Build the brain.

Pretraining involves feeding the model large volumes of text and letting it predict missing words over and over again until it starts getting them right.

This phase often requires powerful GPU clusters and hundreds of millions of dollars in compute resources.

Time required: 6–9 months of non-stop GPU training.

3. Phase Three: Fine-Tuning & Human Feedback (3–6 months)

Objective: Make the AI useful.

Now it can speak — but does it make sense? Maybe or maybe not. At this point, human reviewers rate outputs, correct mistakes, and guide the model using Reinforcement Learning from Human Feedback (RLHF).

Time required: 3–6 months, often running alongside early testing.

4. Phase Four: Deployment & Infrastructure (Ongoing)

Objective: Make it scalable.

Once trained, the model is deployed across websites, apps, APIs, and enterprise platforms. This requires serious backend infrastructure: data centers, auto-scaling APIs, and load-balancing systems to handle millions of simultaneous users.

Timeframe: Begins post-training, but continues indefinitely.

5. Phase Five: Safety, Bias & Ethics (Ongoing, parallel)

Objective: Keep it safe, honest, and non-harmful.

AI isn’t just about intelligence — it’s about responsibility. Ethical teams work in parallel to flag potential misuse, reduce bias, block harmful content, and uphold privacy standards. They constantly evaluate how the model behaves in the real world.

Timeframe: Lifelong process; embedded into every stage above.

FAQs About How ChatGPT Works

Does ChatGPT search the internet for answers?

As of October 2024, ChatGPT gained the ability to browse the internet in real-time.

This feature was initially exclusive to paid users, but by December 2024, it became available to everyone.

Is it like a chatbot or something more?

ChatGPT is a generative AI model. Generative AI generates dynamic, context-aware replies using deep learning.

Beyond chatting, generative AI can write essays, generate images, compose music, and even create videos, showcasing its versatility across various domains.

Does ChatGPT think?

No, ChatGPT does not think in the way humans do. It doesn’t have awareness, beliefs, intentions, or emotions.

What it does is statistically predict the next word in a sentence based on patterns from its training data. This can look like thinking, but it’s not.

Final Thoughts

Large Language Models (LLMs) have changed how we interact with technology.

They can create text that sounds like it’s written by a human, helping with tasks like answering questions and making creative content.

But, LLMs don’t “understand” things or think like people. They work by predicting patterns in data, not through real human thought.

As LLMs get better, we need to think about the problems they can cause, like bias, privacy issues, and misuse.

It’s important to use AI carefully, ensuring it’s fair, transparent, and doesn’t spread false information or harm privacy.

Here are the usage guidelines:

- Be aware that AI can have bias in its content.

- Use AI tools in ways that follow privacy rules.

- Double-check important information from trusted sources.

- Don’t rely too much on AI. It’s a tool, not a replacement for human thinking.

As AI technology continues to grow more powerful, the question arises: How can we ensure that its advancements enhance human creativity and decision-making, rather than replacing the very things that make us uniquely human?