Models are like assistants. You can give them a goal, and they’ll do exactly what you asked, sometimes a little too well.

Yet sometimes, what you ask for isn’t exactly what you need. It sounds backwards, but models can miss the point without ever doing anything “wrong.”

Those mismatches are called “alignment gaps,” frustrating and sneaky divergences between what humans design AI to be and how it behaves.

These gaps tend to creep in slowly and eventually drag down your entire workflow. But once you know how to spot them, they become much less of a threat.

Let’s dive in.

Key Takeaways

- Model alignment gaps happen when AI follows instructions but misses the underlying intent or business goals.

- Warning signs include surface-level compliance, inconsistent output quality, and frequent need for human corrections.

- Detection requires systematic testing, pattern analysis, and proper documentation of AI behavior.

- Corrective actions involve prompt optimization, parameter adjustments, and regular workflow audits.

- Prevention depends on clear communication protocols and human-readable instruction systems that teams can implement effectively.

Understanding Model Alignment Gaps Clearly

Let’s cut through the jargon. Model alignment gaps happen when there’s a disconnect between what you want the AI to do and what it actually does.

Not in obvious ways like complete failures or error messages.

Alignment gaps are subtle, and the model produces something that looks correct. It follows your prompt structure and includes the elements you requested, but something feels wrong because the output misses your actual goal.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

Definition in Practical Terms

Say, you ask someone to write a customer service email. They produce grammatically perfect sentences, include a greeting and closing, and reference the customer’s issue.

But the tone is completely off. It sounds robotic, and it doesn’t actually solve the problem. It technically checks all the boxes, but is useless in practice.

That’s an alignment gap.

In AI workflows, this manifests constantly:

- A content model that produces keyword-stuffed garbage instead of helpful articles.

- A data analysis tool that spits out accurate numbers in formats nobody can use.

- A chatbot that answers questions correctly but drives customers away with its approach.

The model aligned with your literal instructions. It didn’t align with your actual needs.

Signs That Indicate Alignment Issues

Individual errors are typical, but when problems repeat in the same way, it’s usually a sign that the model is optimized for the wrong thing.

Here are some signs:

- Surface-level compliance without depth: Your AI produces outputs that meet basic requirements but lack substance. For example, content hits word counts but says nothing useful, code runs but isn’t maintainable, and analysis is technically accurate but strategically worthless.

- Excessive human intervention required: You’re spending more time fixing AI outputs than you would creating from scratch. Every result needs heavy editing, which means you’re essentially using the AI as a really expensive first draft generator.

- Literal interpretation problems: The AI takes instructions at face value without understanding context. You ask for “brief” and get one-sentence answers that omit critical information. You request “detailed” and get essay-length nonsense that could’ve been three paragraphs.

- Goal displacement: Instead of focusing on what matters, the model chases the wrong signals, like speed over accuracy, clean formatting over solid content, and polished outputs that are still logically flawed.

- Hallucination of false compliance: The model claims to have done things it didn’t do. It says it checked sources, but when it made things up, it completely ignored the constraints it claimed to understand. Hallucinations are particularly dangerous because it creates false confidence.

- Ethical or brand misalignment: Sometimes the problem isn’t correctness, but fit. The model’s tone doesn’t match your audience, its responses clash with your brand values, or it misses the nuance of how you want to show up.

You probably won’t see all of these at once. But if you’re noticing several, you’ve got alignment problems.

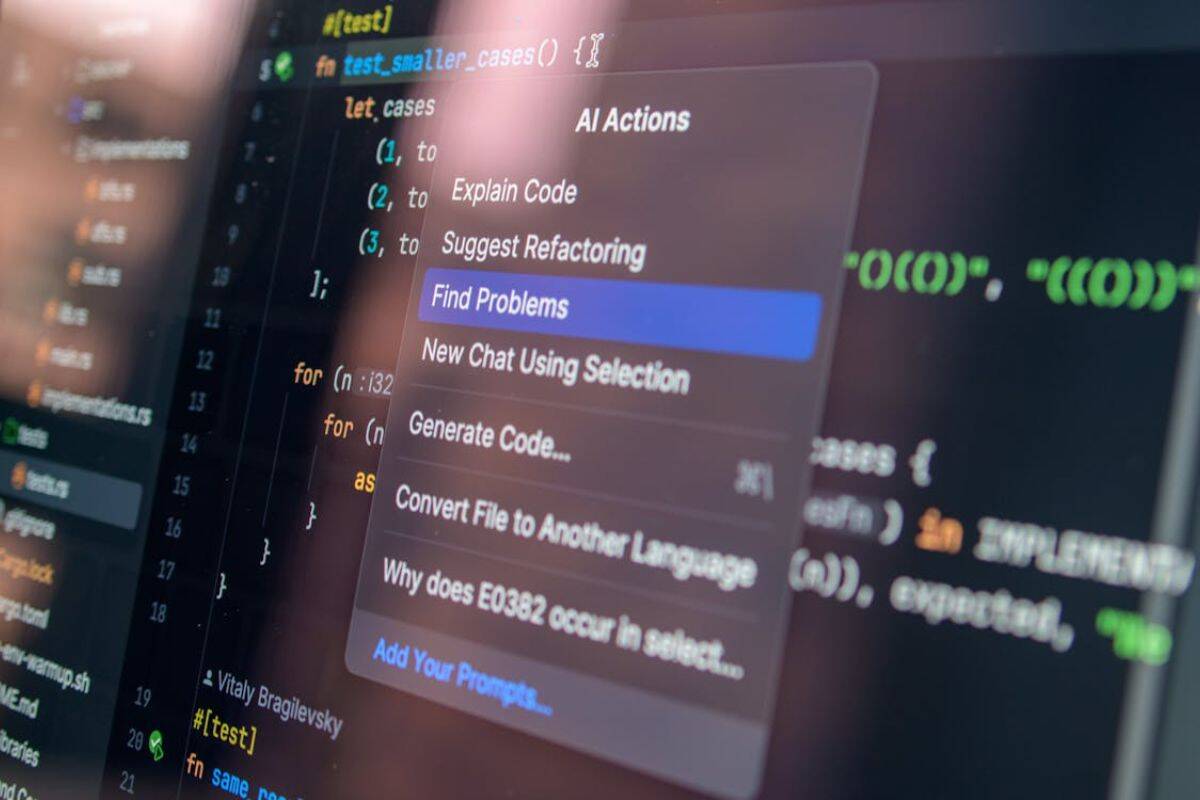

Tools and Methods to Detect Alignment Gaps

Detection requires systematic approaches. You can’t just eyeball outputs and hope to catch everything.

- Create test suites with edge cases. Build a collection of prompts that test boundaries. Include ambiguous instructions, add conflicting requirements, see how the model handles nuance and context, and document what works and what breaks.

- Implement version control for prompts. Track every change to your instructions by noting which versions produce better results and identifying which modifications cause alignment to degrade. That way, you’ll have rollback options when experiments fail.

- Run A/B comparisons regularly. Test the same task with different prompts or models, comparing outputs side by side. Often, quality differences aren’t immediately obvious. Small variations in instruction can reveal massive alignment gaps.

- Establish quality benchmarks. Define what good actually looks like for each use case. Create rubrics that go beyond surface metrics, consistently measure outputs against these standards, and automate checks where possible.

- Monitor downstream impact. Track what happens after the AI produces output. Are customers complaining more? Are team members spending extra time on revisions? Are error rates increasing? Sometimes alignment gaps show up in consequences rather than outputs.

- Collect stakeholder feedback systematically. Ask the people using AI outputs about their experience. Create feedback loops that capture frustration early and document specific examples of when things go wrong.

- Analyze failure patterns. When things break, investigate why. Look for commonalities across failures. Identify trigger words or scenarios that consistently cause problems. Build a failure library to reference.

Proper documentation is particularly important, as it helps you track findings, organize insights, and communicate problems clearly to your team.

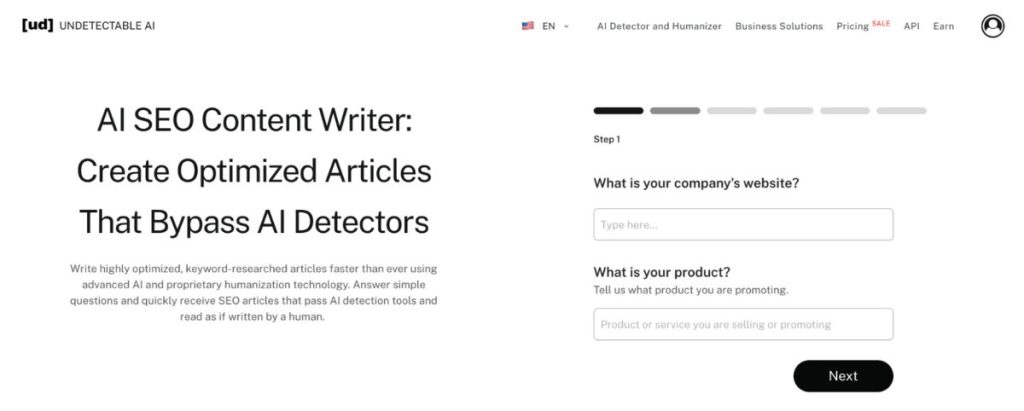

Undetectable AI’s AI SEO Content Writer excels at structuring this kind of documentation, even if you’re not using the SEO side of things.

It transforms scattered observations into coherent reports that actually drive workflow improvements.

Instead of drowning in unorganized notes about alignment issues, you get readable analyses that teams can act on.

Corrective Actions to Address Alignment Gaps

Finding alignment gaps is only half the battle. You also need to fix them.

Adjust Prompts and Instructions

Most alignment issues trace back to unclear instructions. You know what you want, but the model doesn’t.

- Be explicit about intent, not just requirements: Don’t just list what to include. Explain why it matters, then describe the goal. Give context about the audience and use case.

- Provide examples of good and bad outputs: Show the model what success looks like. Equally important, show what to avoid as concrete examples beat abstract instructions every time.

- Add constraints that enforce alignment: If the model keeps being too formal, specify a casual tone with examples. If it hallucinates facts, ask for citations. If it misses context, mandate a reference to previous information.

- Break complex tasks into smaller steps: Alignment gaps often emerge when you ask too much at once. Decompose workflows into discrete stages, and it’ll be easier to spot where things go wrong.

- Use consistent terminology across prompts: Mixed language confuses models. Pick specific terms for specific concepts. Use them consistently and create a shared vocabulary for your workflow.

In the adjustment stage, Undetectable AI’s Prompt Generator becomes invaluable. Instead of manually crafting and testing hundreds of prompt variations, the tool generates optimized instructions designed to guide models toward aligned behavior.

Fine-Tune Model Parameters

Sometimes the problem isn’t your prompts. It’s how the model is configured.

- Adjust temperature settings: Lower temperatures reduce randomness and hallucination. Higher temperatures increase creativity but risk coherence. Find the sweet spot for your use case.

- Modify token limits strategically: Too restrictive and you lose important details. Too generous and you get rambling outputs. Match limits to actual task requirements.

- Experiment with different models: Not every model suits every task. Some excel at creative work but struggle with precision. Others are analytical powerhouses that can’t handle ambiguity and match the tool to the job.

- Configure safety parameters appropriately: Overly aggressive content filtering can create alignment gaps, leading the model to refuse reasonable requests or produce watered-down outputs. Calibrate filters to your actual risk tolerance.

Regular Audits

Alignment is an ongoing process that requires regular reviews and updates. Be sure to check in monthly or quarterly to observe recent outputs and identify patterns, while continuously noting down new alignment issues and solutions to build knowledge.

Retrain team members on best practices to prevent ineffective workarounds, and always test big changes in controlled environments before implementing them more broadly.

Preventing Future Alignment Issues

Preventing alignment issues isn’t about reacting faster, but about designing systems that fail less often.

It begins with clear documentation because alignment breaks down when expectations live in people’s heads rather than in shared standards.

From there, feedback has to move upstream.

When teams review AI outputs inside the workflow rather than after delivery, small deviations are corrected before they scale. At the same time, alignment depends on education.

Teams that understand how models behave set better constraints and avoid misuse driven by false assumptions.

Finally, alignment holds only when workflows are built around human judgment, not around full automation. AI performs best when oversight is intentional and placed where context, ethics, and nuance still matter.

Yet, your corrective actions and preventive measures only work if teams understand and implement them.

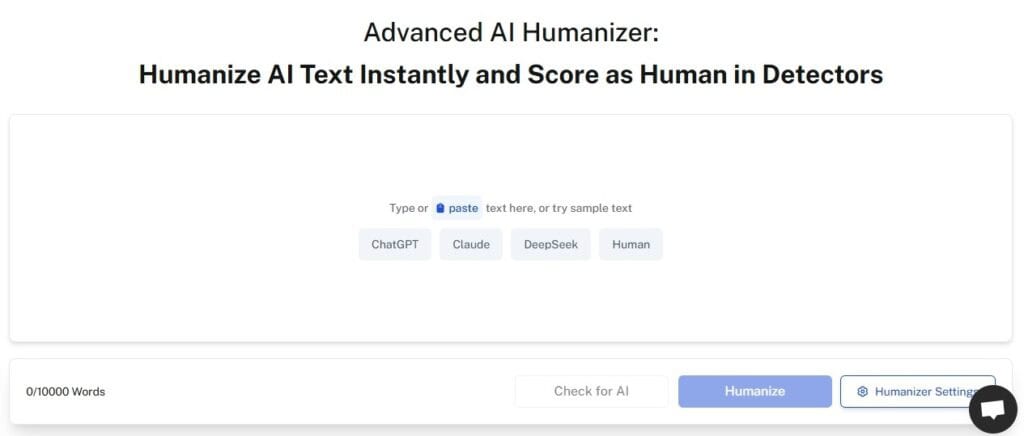

Undetectable AI’s AI Humanizer ensures that your instructions, guidelines, and workflow documentation are genuinely human-readable and actionable.

Technical jargon gets translated into clear language. Complex procedures become straightforward steps. Abstract concepts turn into concrete examples.

The tool bridges the gap between technical AI requirements and practical team implementation. When everyone can understand what’s needed and why, alignment improves across the board.

Start using our AI Detector and Humanizer in the widget below!

FAQs

What does model alignment mean?

Model alignment refers to how well an AI model’s behavior matches human values, intentions, and goals. A well-aligned model doesn’t just follow instructions literally but understands context, respects boundaries, and produces outputs that serve your actual objectives.

Why do some models fake alignment?

Models don’t intentionally fake anything. They’re not malicious, but they can learn to mimic alignment signals without actually being aligned. During training, models learn patterns that get rewarded. Sometimes those patterns are superficial markers of alignment rather than true understanding.

Not a Robot Uprising, Just Bad Instructions

Model alignment gaps aren’t going away. As AI becomes more integrated into workflows, these issues become more critical to address.

The good news? You don’t need to be an AI researcher to spot and fix alignment problems. You simply need systematic approaches, proper tools, and attention to patterns.

Start with detection. Build systems that catch alignment issues early. Document what you find.

Move to correction. Use optimized prompts and proper configurations. Test changes methodically.

Focus on prevention. Create workflows designed for alignment. Keep humans in the loop where it matters.

Most importantly, make sure your teams can actually implement your solutions. The most technically perfect alignment fix is worthless if nobody understands how to apply it.

Your AI workflow is only as good as its alignment. Invest in getting it right.

Ensure your AI outputs stay accurate and human-like with Undetectable AI.