Safety and ethics in AI are still a grey zone. For all the progress we’ve made, many AI tools today are notorious for scraping questionable data, producing biased results, and operating with little transparency about how they actually work.

Claude AI is one of the few systems that openly markets itself on the promise of ethical intelligence. Its makers claim to have built an AI that relies on free-flowing information and evidence-based reasoning only.

Of course, bold claims like that naturally raise questions.

Who exactly is behind Claude AI? And how does the company’s structure support its values instead of compromising them for profit?

In this article, I’ll discuss who owns Claude AI and how the company’s ownership model supports its moral compass.

Key Takeaways

- Claude AI is built and owned by Anthropic, an AI company founded in 2021 by former OpenAI researchers Dario and Daniela Amodei.

- Anthropic operates as a public-benefit corporation (PBC), i.e., it’s legally bound to balance profit with purpose. Amazon and Google have heavily invested in the company, but they do not have a say in decision-making.

- Claude uses constitutional AI, which means it self-checks its answers against a written set of ethical rules inspired by principles of human rights, honesty, and privacy.

Who Owns Claude AI

Claude AI is owned and developed by a San Francisco-based company Anthropic, an artificial intelligence startup founded in 2021.

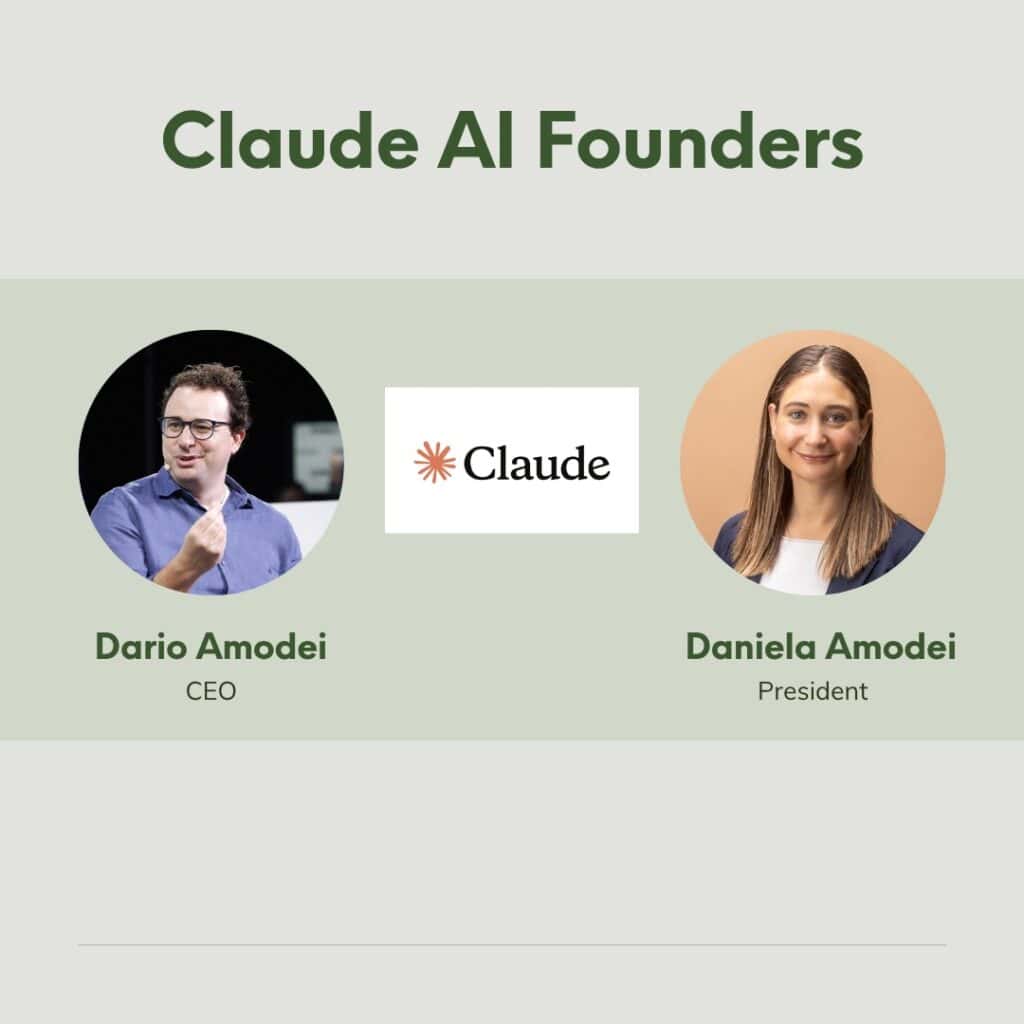

Anthropic was founded by former OpenAI researchers, including Dario Amodei and Daniela Amodei, who are brother and sister.

Dario, the CEO, was previously OpenAI’s vice president of research and one of the key people behind GPT-2 and GPT-3. Daniela, the president, oversaw safety policy at OpenAI.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

Claude, their flagship AI model, is named after Claude Shannon, the mathematician called the father of information theory.

The first version of Claude launched in March 2023, followed by improved Claude 2, Claude 3, and the latest iterations that compete with OpenAI’s GPT models.

Anthropic’s research methods, particularly, constitutional AI training, where Claude learns through explicit ethical guidelines, also form the basis for many other AI systems, including Undetectable AI’s AI Chat.

So, AI Chat is a safe, privacy-first conversational assistant inspired by Claude’s rigorous design principles.

Anthropic’s Investors and Ownership Structure

Anthropic is a privately-held public-benefit corporation (PBC) incorporated in Delaware. The “public-benefit” bit means it is legally chartered to balance profit with a mission.

In Claude’s case, it is building AI aligning with human values rather than chasing pure short-term financial returns.

Now, who owns Claude AI Anthropic, the founding company?

The company’s ownership is shared among several people.

The founders, including siblings Dario Amodei (CEO) and Daniela Amodei (President), have a large say in the company’s direction.

Early employees hold stock options too, as is common in tech start-ups.

The major investors in the company include:

- Amazon committed a multi-billion dollar investment in Anthropic to become one of its largest external backers. In 2023, it announced up to $4 billion, and in subsequent moves, it increased that commitment.

- Google currently holds about 14% of Anthropic shares and is set to add another $750 million this year, which will push its total investment in the company past $3 billion.

On top of that, many venture capital firms (such as Spark Capital, Lightspeed Venture Partners, and Iconiq Capital) and institutional investors contribute to the company.

Anthropic has also created a “Long-Term Benefit Trust” (sometimes called the “purpose trust”).

The trust holds certain governance rights to ensure the company’s mission remains uninfluenced by investors. That makes Amazon and Google large minority shareholders rather than controlling owners.

In September 2025, Anthropic also raised about $13 billion in a funding round that valued the company at around $183 billion.

How Claude AI Works

Claude AI works much like any generative-AI tool that’s read most of the internet and learned to predict a response to your prompts.

It is a large language model (LLM) trained on massive text datasets. But what makes Claude different from other chatbots is how it was trained to self-check and behave safely.

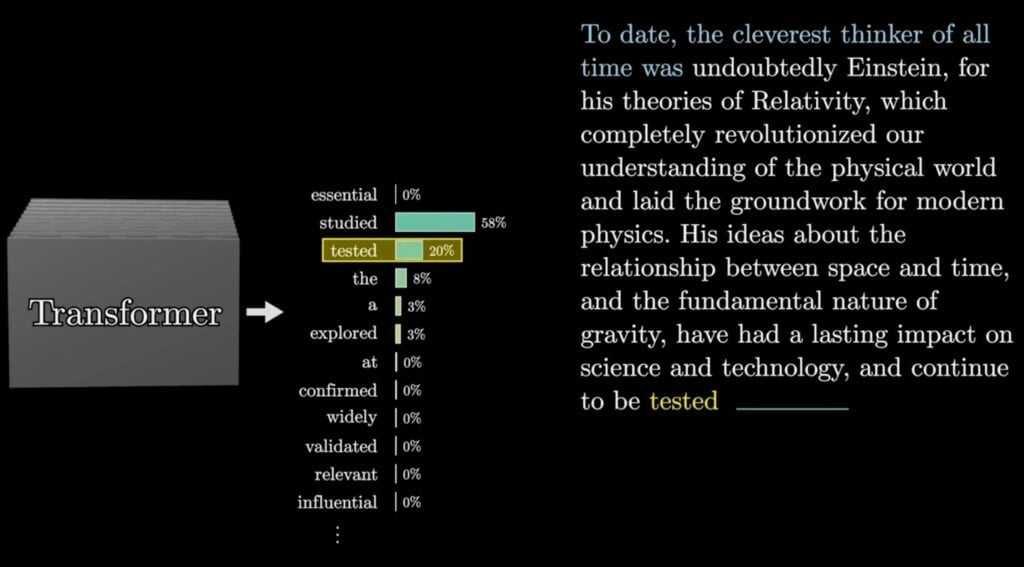

Technically, Claude is built on a transformer architecture (the same general structure used in GPT and Gemini models), which allows it to handle long contexts.

You can watch this video if you want to understand how the transformer architecture works when it comes to training AI models.

The Claude Model Series

The Claude series began with the release of Claude 1 in March 2023.

It was Anthropic’s first public model, which gained immediate public attention for being a more transparent alternative to other chatbots.

Claude 2, launched in July 2023 came with improved reasoning, writing, and contextual understanding.

Claude 3 was released in March 2024, and it marked a major leap in the tool’s development. It introduced a family of multiple other models with different strengths, including:

- Haiku, the fastest and most lightweight version of Claude 3 for real-time tasks and customer service bots

- Sonnet, which balances speed with intelligence, is ideal for business and research use.

- Opus, Anthropic’s most capable model yet, is used for deep reasoning, creative writing, and data-heavy problem-solving

The latest Claude models (as of Oct 2025) are Claude Sonnet 4.5, Claude Haiku 4.5, and Claude Opus 4.1.

| Feature | Claude Sonnet 4.5 | Claude Haiku 4.5 | Claude Opus 4.1 |

| Description | Advanced model built for coding, automation, and reasoning-heavy tasks. | Lightweight and extremely fast model ideal for everyday queries and productivity. | Premium model optimized for deep reasoning and complex analysis. |

| Speed | Fast | Fastest | Moderate |

| Context Limit | 200K tokens (up to 1M in beta) | 200K tokens | 200K tokens |

| Maximum Output | 64K tokens | 64K tokens | 32K tokens |

| Extended Thinking Mode | Available | Available | Available |

| Priority Access | Included | Included | Included |

| Knowledge Reliability | Up to January 2025 | Up to February 2025 | Up to January 2025 |

| Training Data Cutoff | July 2025 | July 2025 | March 2025 |

| Comparative Latency | Balanced for most workflows | Fastest overall | Slower, focused on depth and accuracy |

| Ideal Use Cases | Coding agents, large-scale automation, in-depth writing | Quick responses, general productivity, chat applications | Research, data interpretation, and decision-making requiring nuanced reasoning |

| API Pricing | $3 / input M-token • $15 / output M-token | $1 / input M-token • $5 / output M-token | $15 / input M-token • $75 / output M-token |

What Is Constitutional AI?

Most chatbots are trained through a method called “reinforcement learning from human feedback.”

It basically means that people sit down to read tons of AI-generated replies and mark the ones that sound better.

Does it work? Yes.

But is it efficient? Not so much.

Anthropic came up with the idea of giving the model a written constitution, a set of clear, transparent rules about how it should behave called Constitutional AI.

The rules are drawn from real ethical frameworks like the Universal Declaration of Human Rights and general ideas around honesty and privacy.

Claude is made to critique its own responses to check if it follows the constitutional rules. That process, called self-alignment, ensures the model improves its judgment without constant human correction.

Claude AI vs. ChatGPT vs. Gemini

Claude, ChatGPT, and Gemini are all large language models made to understand human input and respond to it.

The core concept behind them all is also the same, i.e., predicting the next most likely word in a sentence guided by massive training data.

Each system, however, was designed in a different way.

Claude, as we just discussed, uses constitutional AI to refine its responses based on a set of pre-determined rules.

You’ll see it pausing to explain its reasoning or outline its limitations. So, it’s less prone to overconfidence.

Plus, Claude’s latest series, which includes Haiku, Sonnet, and Opus, has a massive context window of up to 200,000 tokens.

It is so big that it can analyze entire books without losing the thread. ChatGPT and Gemini can’t come close to that yet.

ChatGPT is the most widely used AI model to date with a live web-browsing feature. Its models (GPT-3.5, GPT-4, GPT-4 Turbo, and GPT-5 being the current high-end version) are trained using reinforcement learning from human feedback (RLHF).

It basically learns from thousands of examples of what people like or dislike in responses. Naturally, ChatGPT will produce responses that you will agree on.

Sometimes, they could be inaccurate (i.e., it occasionally “hallucinates” details), but it’s the best conversationalist.

The current versions of ChatGPT also include DALL·E 3 (for images), Code Interpreter/Advanced Data Analysis, and custom GPTs.

And Gemini, previously called Bard, combines Google search and AI modeling in one place. Its core strength is the real-time, verified information extracted from Google Search.

Being one of the Google LLC products, it is also fully integrated across Google Workspace (Docs, Sheets, Gmail, Slides).

In terms of architecture, Gemini 1.5 introduced long-context reasoning of up to one million tokens in the research version. Gemini tends to sound a bit more fact-based than ChatGPT.

Here are some detailed benchmarks to compare the performance of Claude AI vs. ChatGPT vs. Gemini.

| Benchmark / Task | Claude Sonnet 4.5 | Claude Haiku 4.5 | Claude Sonnet 4 | GPT-5 | Gemini 2.5 Pro |

| Agentic Coding (SWE-bench Verified) | 77.2 % | 73.3 % | 72.7 % | 72.8 % (GPT-5 high) / 74.5 % (Codex) | 67.2% |

| Terminal Coding (Terminal-Bench) | 50.0 % | 41.0 % | 36.4 % | 43.8 % | 25.3% |

| Tool Use Performance (t2-bench) | Retail 86.2% Airline 70% Telecom 98% | Retail 83.2% Airline 63.6% Telecom 83% | Retail 83.8% Airline 63% Telecom 49.6% | Retail 81.1% Airline 62.6 % Telecom 96.7 % | — |

| Computer Use (OSWorld) | 61.4% | 50.7% | 42.2% | — | — |

| Math Competition (AIME 2025) | 100% (Python) / 87.0 % (no tools) | 96.3% (Python) / 80.7% (no tools) | 70.5% (no tools) | 99.6% (Python) / 94.6% (no tools) | 88% |

| Graduate-Level Reasoning (GPQA Diamond) | 83.4% | 73% | 76.1% | 85.7% | 86.4% |

| Multilingual Q&A (MMLU) | 89.1% | 83% | 86.5% | 89.4% | — |

| Visual Reasoning (MMMU Validation) | 77.8% | 73.2% | 74.4% | 84.2% | 82% |

Use the AI Checker to assess whether Claude’s responses contain AI-generated patterns.

Paste the output into the tool, and it instantly analyzes phrasing, tone, and structure to show how human or machine-written the text sounds.

It’s a quick way to verify content quality before sharing or publishing Claude’s responses.

Common Misconceptions About Claude AI

Most of us didn’t grow up with AI as part of our everyday lives, so it’s only natural that some of its concepts still feel abstract.

Here are some common misconceptions about Claude AI, and the truth behind them.

- Claude AI can surpass human intellect

A lot of people online like to say Claude, or any big AI model, really, can surpass human intelligence. The concept sounds really fun as a sci-fi movie plot, but it’s not true.

All AI models produce their responses based on math. For every input, they look at patterns in the data they were trained on to predict what text should come next. No self-awareness, understanding, or actual reasoning in the human sense is involved in the process.

But humans rely on gut-level decision-making all the time, which psychologists call heuristics. Those instincts are built on lived experiences.

Claude can definitely outpace humans, but that’s not the same thing as being smarter.

- Claude can learn on its own

The idea that Claude can “learn on its own” sounds almost believable due to the fact that it is built on constitutional AI.

But evaluating its responses on ethical grounds does not mean real learning.

Claude doesn’t teach itself new information or upgrade its abilities over time. Every improvement you’ve ever seen in a new Claude release, Claude 1, 2, 3, 4 Opus, Sonnet, came due to the efforts of human engineers.

Constitutional AI is simply a controlled training mechanism that engineers created to make Claude apply consistent ethical reasoning. Once training is done, the model is static. It can’t acquire new knowledge on its own.

- Claude is more ethical so it won’t lie or hallucinate

A lot of people also assume that because Claude has a reputation for being “ethical,” it won’t make things up.

The thing is, Claude is still an AI model. And Anthropic never claimed it was infallible. In fact, they’ve been refreshingly transparent about it.

The company has openly said that Claude can still “hallucinate,” simply because of what it predicts should come next, as per statistics.

Claude Docs gives users a practical tip to explicitly tell the system that it’s okay to say “I don’t know.” The instruction has been shown to reduce the chances of hallucination.

Get started with our AI Detector and Humanizer in the widget below!

Conclusion

Claude AI is definitely a benchmark for how AI should be developed responsibly. The tool reflects Anthropic’s commitment to building AI that respects and aligns with human values.

That said, the basic, free version of Claude comes with usage limits that reset every five hours, which is a little bit of a problem if you need continuous access.

If you’re looking for a similarly trustworthy, security-conscious AI platform that’s also free to use, check out Undetectable AI.

Besides a simple chatbot, Undetectable AI also has really cool specialized tools like the AI Humanizer, AI Paraphraser, Grammar Checker, AI SEO Writer, etc. All of the products follow the same high bar for privacy as Claude!

Give Undetectable AI a try today!