With the right AI prompt, you can do basically anything. That’s how powerful AI tools already are.

AI tools have reshaped how businesses operate, allowing them to streamline processes and drive efficiency like never before.

One area where AI truly excels is content creation. We’re not just talking about drafting outlines or generating social media posts.

AI can even help you write books and complete complex research papers.

But with great power comes great responsibility, and AI can’t always be trusted to do the right thing.

Sometimes, AI produces what are known as “hallucinations.” These are incorrect or bizarre outputs that can mislead users.

Nearly half of organizations report data quality challenges that jeopardize AI initiatives.

It’s not a silver bullet, but “grounding” aims to ensure that AI outputs are based on reliable information.

While it might sound complex, the answer to the question “What is grounding and hallucinations in AI?” is crucial for anyone using these powerful tools.

Let’s get into the details of AI hallucination so you can confidently maximize your output with AI while making sure your information stays accurate.

Key Takeaways

- AI hallucinations occur when models generate false or misleading information due to data gaps or overfitting.

- Grounding anchors AI outputs to verified, real-world data to reduce hallucinations and improve reliability.

- Hallucinations in content creation can harm credibility, spread misinformation, and cause legal issues.

- Prevent issues by using reliable tools, verifying content, and providing clear, detailed prompts.

- Combining AI detection and human oversight—like with Undetectable AI—ensures accuracy and authenticity.

What Is Grounding and Hallucinations in AI?

When it comes to understanding what is hallucination in AI, knowing how it might occur is especially helpful for organizations that require as accurate information as possible.

By learning about grounding and artificial intelligence hallucinations, you’ll be able to understand better how AI tools work and guarantee that your AI-generated content stays credible.

What are Hallucinations in AI?

Imagine you asked an AI chatbot for information about a person.

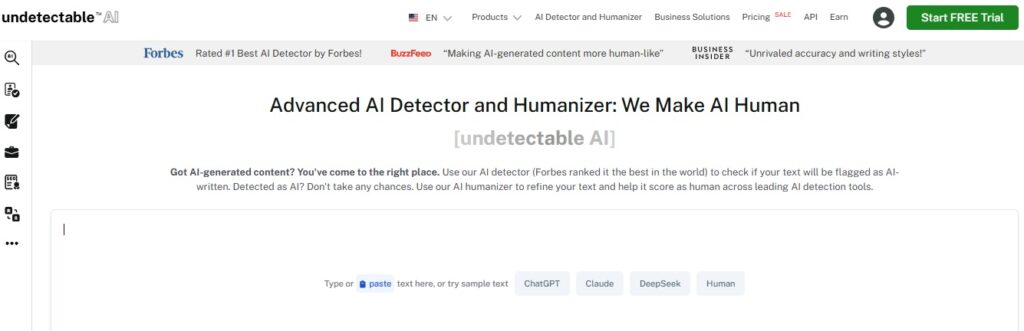

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

The model accurately provides details frequently mentioned in different online sources.

But then, as you cross-check the information provided, you notice that the AI also incorrectly guesses lesser-known information like their age and educational background.

These wrong details are what’s known as “hallucinations.”

Hallucination rates can vary between models. ChatGPT previously reported around 3%, while Google’s systems were estimated at up to 27%.

These chatbots are driven by a large language model that learns from analyzing massive amounts of text.

This happens because these models are trained to predict words based on patterns in the data they’ve analyzed without understanding what’s true or not.

Hallucinations mostly come from incomplete training data.

Remember that the model can pick up and reproduce errors depending on what it gets fed.

There’s also “overfitting,” where the model identifies false patterns or connections in the data.

Solving AI hallucinations is challenging because these models, operating on probabilities and patterns, will unavoidably produce false results.

This can mislead users who might trust the AI content as it is. That’s why it’s important to be aware of this limitation when using AI for content creation and fact-checking.

What is Grounding AI?

Grounding AI is an effective method to reduce hallucinations. It works by anchoring the AI’s responses to real-world data.

For example, a grounded system would use a reliable database from the get-go to provide factual answers, reducing the likelihood of the AI making stuff up.

Instead of relying only on predicting the next word based on patterns, a grounded system first retrieves information from a credible source.

The model is also more ethically acceptable because it admits that the context is insufficient, so it won’t be able to provide all the information you need, rather than just guessing.

To maximize value of AI while minimizing inaccuracies, organizations should invest time upfront gathering accurate training data, guidelines, and other necessary background info.

This preparation helps AI outputs stay grounded in reality and can be trusted by users.

So, while grounding is not a foolproof solution, it can significantly improve the quality of AI outputs.

What Is the AI Hallucination Problem in Content Creation?

In content creation, AI hallucinations can take different forms.

When creating a blog post or article, the AI might include false facts, incorrect statistics, or made-up quotes.

AI hallucination isn’t intentional deception, either. The model can’t lie since it has no motivation to deceive, nor is it aware that what it is saying is false.

It simply lacks a sense of how reliable its results are.

The consequences of AI hallucinations in content creation can be severe.

- Spread of Misinformation: More than one in five people admit they might have shared fake news without checking.

- Damage to Credibility: If an individual or company publishes content with misleading information, their reputation can suffer. Trust is crucial for users, and once it’s lost, it can be challenging to regain once more.

- Legal and Financial Repercussions: Publishing false information can lead to potential headaches, even lawsuits. It’s a costly battle you won’t want to encounter.

- Negative Impact on Business Decisions: Companies relying on AI to produce reports and insights for decision-making can lead to making poor choices based on incorrect data. False information causes harm to both the audience and the company or individual using AI for content creation. And AI hallucinations can actually make this problem worse by generating content that looks credible but is actually flawed.

How to Prevent Problems Caused by AI hallucinations

We know how valuable AI tools are in content creation. Using AI is even considered one of the best ways to make money today and to stay competitive in the industry.

It’s also why tackling the AI hallucinations problem is crucial to ensure that the content you use with AI is accurate.

Understand AI Limitations

While we already know how powerful AI is, it has its limitations.

When it comes to content creation, it works really well at pattern recognition and generating text based on huge datasets, but it still lacks any sense of reality.

Keep these limitations in mind when using AI:

- Struggles to grasp context or understand subtle cultural distinctions

- Heavily relies on the quality and quantity of information that’s available

- Tendency to create biases based on the training data

- Lacks human-like creativity and originality

- Can generate content that is morally questionable

- Has trouble solving problems outside of what the system knows

- Difficulty in adapting to changing situations or providing real-time input

- Vulnerable to attacks and can compromise data privacy

Knowing its limits prevents AI hallucinations, too. Boundaries help set realistic expectations and make it easier to catch inaccuracies before they go public.

Use Reliable Models and Tools

The best AI tools for content creation make things easy. These tools aren’t perfect, but what you use should definitely get the job done.

For AI chatbots, ChatGPT is still the strongest out there. GPT-4 boasts a high accuracy rate of 97% and the lowest rate of ChatGPT hallucinations.

Just be sure you give clear prompts and check the results against reliable sources.

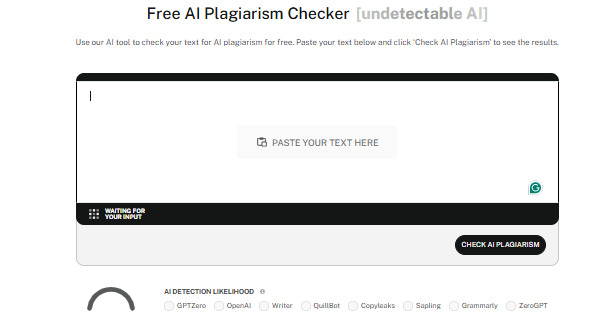

You should also use trusted AI detection tools like Undetectable AI.

Detectors analyze content effectively to spot even the most subtle AI elements, maintaining authenticity in any content you produce and keeping you credible.

Undetectable also has an AI Humanizer that further refines text to sound natural.

With it, you don’t just pass AI detection, you also guarantee your content’s quality.

Verify Content

No matter how you use AI in the content creation process, it’s always important to verify the content you’ve produced.

These are some common ways to verify AI content:

- Cross-verify by comparing information across multiple reliable sources

- Use fact-checking tools to streamline debunking misinformation

- Seek insights from subject matter experts or professionals in the field

- Have content reviewed by peers or colleagues to help identify potential errors, biases, or inaccuracies

- Use AI detection software to ensure originality and avoid unintentional plagiarism

- Consider historical context when referencing events or data for accuracy

Taking precautions pays off. Verifying AI content gives you some much-needed confidence that what you produce is always trustworthy.

Provide Clear Instructions

One of the main causes of AI hallucination is unclear instructions.

If you ask AI questions that are too vague with no additional context, it’s more likely to generate answers that don’t make much sense.

Clear and specific prompts are what keeps AI on track.

So, be specific and detailed in your prompts.

Instead of asking overly broad questions, like “What’s the best Asian restaurant?” try something more focused, such as “What are the highest-rated ramen shops in Kyoto?”

Specificity helps AI stay on track and produce accurate results that meet your expectations.

Stay Updated

Technology continues to advance, and at a rapid pace at that, so be sure that you keep yourself up-to-date.

AI essentially learns from the data it’s trained on, but information can quickly become outdated.

This is especially true in fast-moving fields like scientific research or healthcare.

You can stay informed by following trusted sources on social media, or you can take courses related to your industry, and attend industry events.

This way, you have insights into the content provided by AI remains credible.

AI tools themselves update themselves quite often, too, so stay on top of the latest developments so you’re always sure that you use the best ones.

Human Expertise

Humans and AI must work together to ensure quality, accuracy, and authenticity in content creation

After all, we’re ultimately the ones who bring critical thinking, creativity, and ethical judgment to the table.

By combining AI’s capabilities with human oversight, you can verify that content is not only accurate but also contextually relevant – as well as engaging and hitting the right notes.

Human experts can spot biases, double-check information, and make decisions that AI alone might not be able to grasp.

Humans and AI, together, can create content that’s reliable, insightful, and impactful.

Check out our AI Detector and Humanizer in the widget below!

Conclusion

Understanding what is grounding and hallucinations in AI is essential for anyone using AI tools in their work.

Grounding makes AI more accurate and maintains your content’s integrity by using real, verified data.

Being aware of AI hallucinations also helps you know the right ways to avoid them and steer clear of potential pitfalls.

Keeping AI content accurate is only one side of the process. Ensure that your content stays authentic and high-quality by using Undetectable AI.

This tool acts as both an AI detector and humanizer, making sure that AI-generated content blends seamlessly with human writing and can pass detections.

Choose the best AI content verification options for your needs, and let Undetectable AI consistently keep your content reliable, original, and human. Start using it today.