AI copyright lawsuit battles are intensifying in 2025, reshaping how copyright law interacts with generative AI technologies.

At the center is The New York Times’s landmark case against OpenAI and Microsoft, alleging unauthorized use of its articles to train advanced models like ChatGPT.

At the same time, a wave of consolidated lawsuits from bestselling authors, publishers, and media companies is challenging the core legality of AI training practices.

The outcomes of these high-stakes cases could redefine the future of AI, journalism, and creative industries worldwide.

What does this mean for the future of AI, content ownership, and legal responsibility?

Here, we break down the key developments, the major players involved, and what creators, businesses, and technologists need to understand moving forward.

Key Takeaways

- The New York Times’ lawsuit against OpenAI and Microsoft is moving forward in court, with major implications for copyright law, fair use, and AI training practices.

- Authors like George R.R. Martin and John Grisham are part of a consolidated class-action lawsuit claiming their books were used without permission to train large language models.

- Courts are scrutinizing AI’s use of copyrighted content, especially when copyright metadata (CMI) is stripped or works are scraped in bulk without consent.

- OpenAI has been ordered to preserve user chat data, raising major privacy and legal compliance questions that could influence future platform policies.

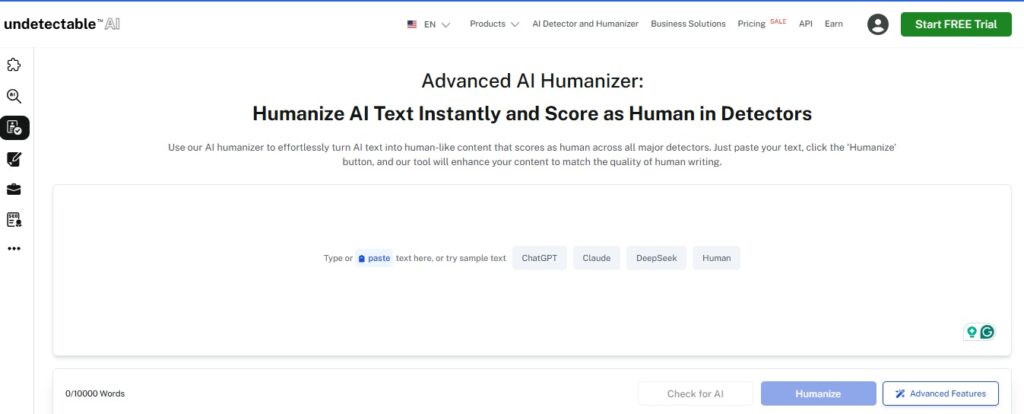

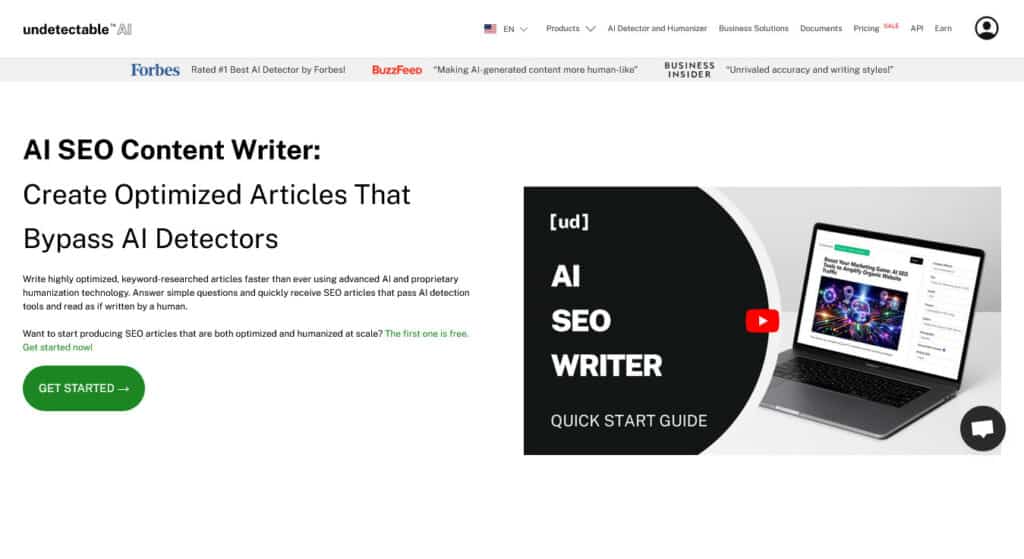

- Undetectable AI tools like the AI Humanizer and AI SEO Content Writer offer creators a safe and legal path to leveraging AI while avoiding copyright and originality risks.

The NYT vs. OpenAI and Microsoft: Signals from the Courtroom

Legal Journey and Key Rulings (2025)

The New York Times’ case, initiated in December 2023, remains one of the most consequential.

In March 2025, Judge Sidney Stein refused to dismiss the lawsuit, allowing most copyright and DMCA claims to proceed—excluding some peripheral claims like certain unfair competition arguments.

In July, the parties were consolidated with related lawsuits filed by authors like John Grisham and Jonathan Franzen, routed to the Southern District of New York for streamlined handling

Privacy Meets Litigation: User Chat Preservation

A critical privacy flashpoint emerged when the court ordered OpenAI to indefinitely preserve user chats—even deleted ones—for discovery.

OpenAI, led by Sam Altman, strongly opposed, citing user trust and data protection norms, and has lodged an appeal.

Discovery Disputes and Data Access

The Times sought access to millions of ChatGPT logs, but OpenAI offered a compromise of just 20 million chats, while the Times pushed for up to 120 million.

DMCA Implications: Copyright Management Info (CMI)

Plaintiffs argue OpenAI stripped CMI—copyright metadata—from downloaded articles before training their models.

Cases like Raw Story v. OpenAI and others suggest DMCA claims may survive if plaintiffs show deliberate removal of CMI

What This Means for AI, Creators, and the Future of Content

Fair Use Under Scrutiny: Courts are no longer broadly deferring to fair use—how data was obtained, whether CMI was stripped, and whether the use is truly transformative are all under microscopic legal examination

Consolidation Expedites Litigation: By aggregating 12-plus related lawsuits under Judge Stein in New York, the cases can be coordinated more efficiently—potentially leading to a jury benchmark that may influence similar disputes

Impacts on AI Business Models: AI firms may increasingly turn toward content licensing, stricter data filtering, and enhanced transparency—especially in light of high-profile court actions and public backlash.

Policy & Regulatory Pressures Mount: With Congress and publishers pressing for transparency, and cases like those involving ANI in India and media in Canada developing separately, global regulatory guards are tightening around AI training and content use.

Looking Ahead: The Future of AI and Copyright Law

As lawsuits escalate in 2025, one thing is clear: the future of generative AI will be shaped by legal precedent.

Lawmakers are weighing new regulations like the TRAIN Act to enforce transparency and licensing for AI training data.

Meanwhile, AI companies are beginning to pivot toward licensed content and ethical data use.

Tools like AI Humanizer and AI SEO Content Writer offer a safer path forward—helping users create compliant, high-quality content without risking copyright violations.

Globally, other countries are following suit, signaling a new era where AI development must respect the rights of human creators.

The coming years will likely define whether AI thrives as a collaborative tool—or faces tighter legal constraints.

How Undetectable AI Can Help Creators and Brands Navigate This Terrain

As the copyright and AI landscape evolves, Undetectable AI equips creators with tools that align with legal safety and originality:

- AI Humanizer – Ensures AI-generated content is contextually refined and distinctly human—helping avoid potential over-reliance on AI outputs that could trigger infringement concerns.

- AI SEO Content Writer – Generates optimized, original content rooted in licensed or safe data—supporting compelling storytelling that’s protected from copyright claims.

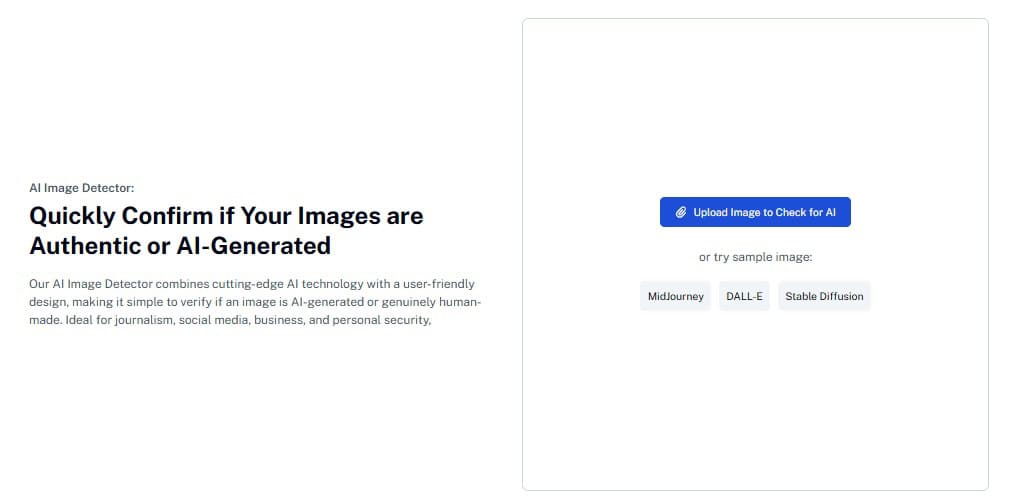

- AI Image Detector – Scans AI-generated visuals for detectability, ensuring transparency and adherence to ethical and legal standards.

- Internal Insights & Blog Resources – Explore related deep dives like “How AI Training Must Evolve After NYT vs OpenAI” or “Fair Use vs Fair Theft: Navigating AI Content” to stay informed and compliant.

How TruthScan’s AI Image Detector Supports Copyright Integrity

In a time when AI-generated visuals are flooding the internet, maintaining image authenticity is just as critical as protecting written content.

TruthScan’s AI Image Detector helps creators, media teams, and legal professionals verify whether an image has been generated, altered, or scraped without authorization.

It examines pixel data, metadata, and hidden generative patterns to flag synthetic visuals or unauthorized manipulations.

For publishers and brands, this ensures every image used—whether for marketing, journalism, or product design—meets ethical and copyright standards.

Together, Undetectable AI and TruthScan give creators and brands the tools to protect their work and uphold integrity in today’s fast-changing digital world.

Detect AI patterns and humanize your writing in seconds—use the widget below.

FAQs

Is it still safe to train AI on web content at scale?

Not without caution. You must consider licensing, CMI retention, and whether the use is truly transformative.

As courts have emphasized, fair use doesn’t trump intentional removal of copy protection or bulk usage of copyrighted works.

Will these lawsuits set legal precedent?

Possibly—but with caveats. The Anthropic settlement avoids a ruling. The NYT case may carry substantial weight—but outcomes may vary based on jurisdiction, data practices, and proof of harm.

Never Worry About AI Detecting Your Texts Again. Undetectable AI Can Help You:

- Make your AI assisted writing appear human-like.

- Bypass all major AI detection tools with just one click.

- Use AI safely and confidently in school and work.

How can creators protect their work?

Leverage tools like Undetectable AI to generate human-sounding content and monitor for potential infringement. Register copyrights where needed and consider opt-out or licensing options.

What role does privacy play in these cases?

Huge. OpenAI is appealing orders requiring indefinite data retention, citing privacy norms, GDPR, and user trust. This suggests future cases may balance IP law with privacy protections.

Is legislation coming?

Pressure is mounting—from authors, publishers, and lawmakers—to legislate AI transparency and fair compensation. But as of now, significant new laws haven’t passed.

Conclusion

The AI–copyright firefight is no longer hypothetical—it’s here, live in the courts.

Outcomes from the NYT and consolidated author suits, along with Anthropic’s settlement, are redefining how generative AI must acquire and respect copyrighted content.

For creators, publishers, and AI developers, understanding evolving rules—and choosing tools that respect them—is now essential.

That’s where Undetectable AI comes in.

Whether you’re a writer, educator, business, or legal team, tools like the AI Humanizer, AI SEO Content Writer, and AI Image Detector empower you to create compliant, human-sounding, copyright-safe content that’s ready for 2025 and beyond.

Stay protected. Stay original. Explore Undetectable AI today and build confidently in the age of ethical, transparent AI.